Publications

[Complete list of all entries in BibTex format]

The copyright for most of the above papers has been transferred to a publisher. The ACM and IEEE grant permission to make a digital copy of the works on the authors' institutional repository. See also any copyright notices on the individual papers for details.

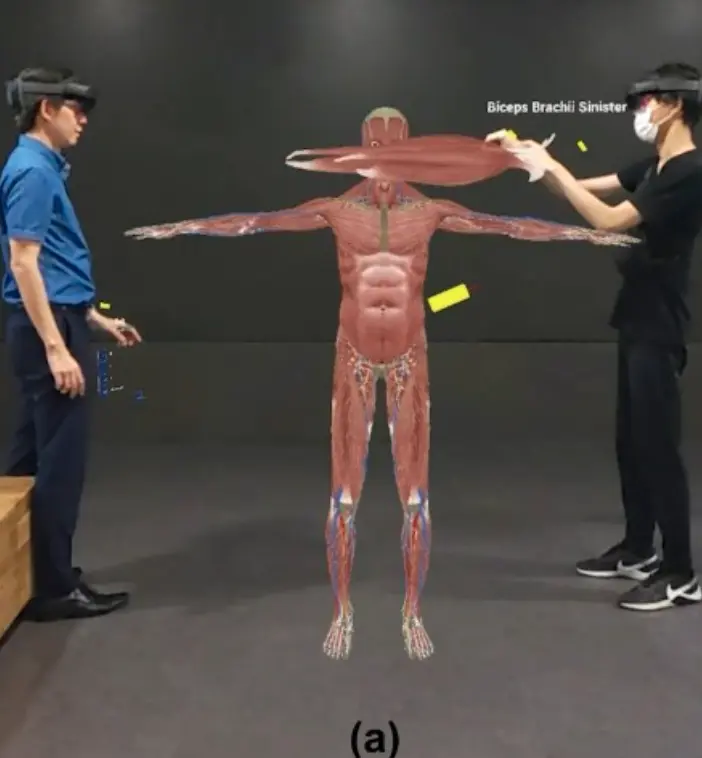

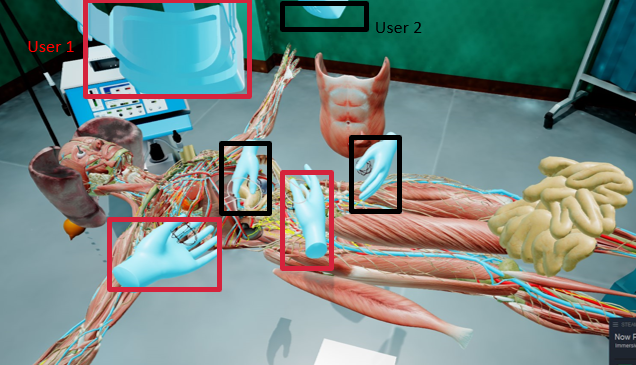

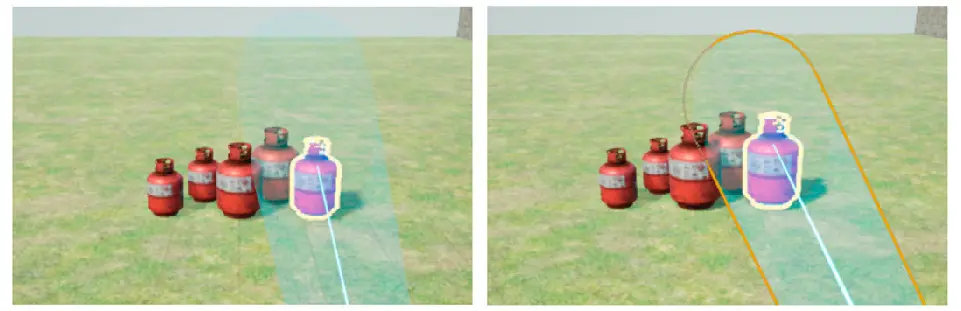

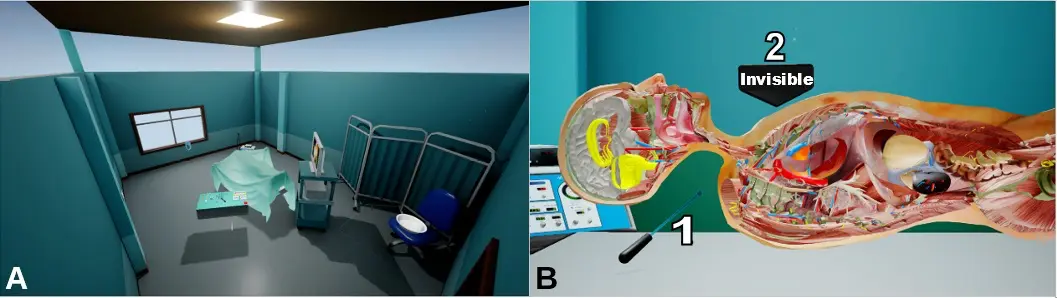

Mixed Reality for Group-Based, Integrated Instruction of Anatomy, Pathology, and Physical Examination

Mores Prachyabrued, Metasit Getrak, Peter Haddawy, Paphon Sa-Ngasoongsong and Gabriel Zachmann

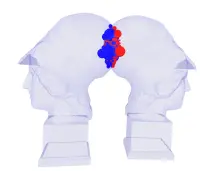

Anatomy is fundamental to medical education and clinical practice. Traditional instruction involves 2D atlases, dissections, and plastic models. Translating 2D images into a 3D spatial map is difficult. Dissections suffer from the cost of cadavers, formalin exposure, and emotional distress. Plastic models lack sufficient details and anatomical variation. Mixed reality (MR) addresses these issues by offering a rich, 3D environment where students can affordably and safely explore a virtual body. Anatomy instruction is typically delivered in small groups to promote active learning and knowledge sharing. Using MR for group study simplifies the body tracking burden because participants meet face-to-face, allowing both verbal and nonverbal communication while enabling them to safely move through the space. Medical curricula have integrated anatomy with clinical sciences to highlight its relevance. In clinical practice, students must link anatomy with pathology and diagnostic methods. We present a novel MR system that facilitates group‑based, integrated instruction of anatomy, pathology, and physical examination. Feedback shows the system boosts learning, yet performance and learning curve need improvement.

Published in:

IEEE VR 2026 Conference on Virtual Reality and 3D User Interfaces, Daegu, Korea, March 21 - 25, 2026.

Files:

Poster

Alchemistische Morphologien – Multimodale Analysen

Julian Jachmann, Ines Röckl, Gabriel Zachmann und Thomas Hudcovic

Ziel ist eine Online-Suchmaschine, die einem hochgeladenen Bild mit Rocaille-Ornamentik ähnliche Druckgrafiken zuordnen kann.

Published in:

DHd 2026 Jahrestagung des Verbandes Digital Humanities im deutschsprachigen Raum, Wien, Österreich, Februar 23 - 27, 2026.

Files:

Poster

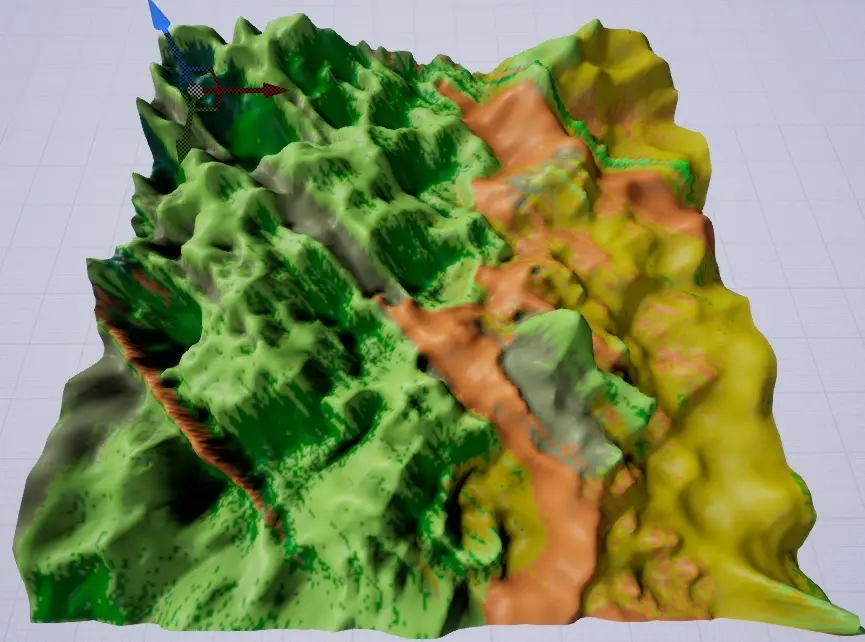

Earthbender: An Interactive System for Stylistic Heightmap Generation using a Guided Diffusion Model

Danial Barazandeh, Gabriel Zachmann

Games, 3D simulations, and cinematic pipelines depend on realistic 3D terrain for immersion. However, creating detailed 3D terrain is labour-intensive: artists sculpt elevation, iterate on mountains, rivers, lakes, and must often repeat the entire workflow when the design changes. Recent generative approaches are attempting to address this challenge, but they primarily focus on a single landform (typically mountains) and overlook structural features, such as river networks, roads, or lakes. We propose a sketch‑conditioned diffusion framework that generates depth maps representing complete landscapes, including mountains, river networks, and lakes. Our method extends Stable Diffusion with a ControlNet branch that takes multiple channel inputs: Canny edges for overall structure, red for mountains, green for lakes, and blue as a carving tool for painting roads and rivers onto the heightmap. This approach addresses the technical challenges while prioritizing the artist’s creative control. Our interactive system, Earthbender, gives the artist fine-grained control over every detail in the heightmap, demonstrating a collaborative model where the generative AI acts as a powerful assistant to achieve an artistic vision, rather than replacing the artist’s creativity. Our experiments show that our ControlNet-based approach significantly outperforms traditional GANs in both data efficiency and output quality. Furthermore, we present an analysis demonstrating that the choice of loss function acts as a powerful artistic control, allowing the user to select between a sharp, detailed style and a softer, more organic output better suited for downstream game engine workflows.

Published in:

MIG 2025, Zurich, Switzerland, December 3-5, 2025

Files:

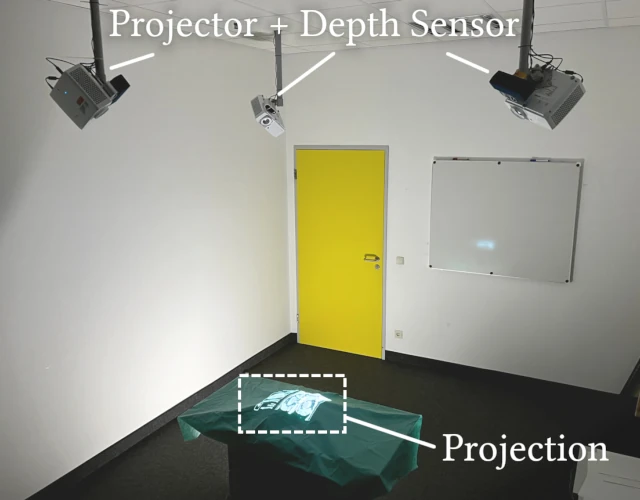

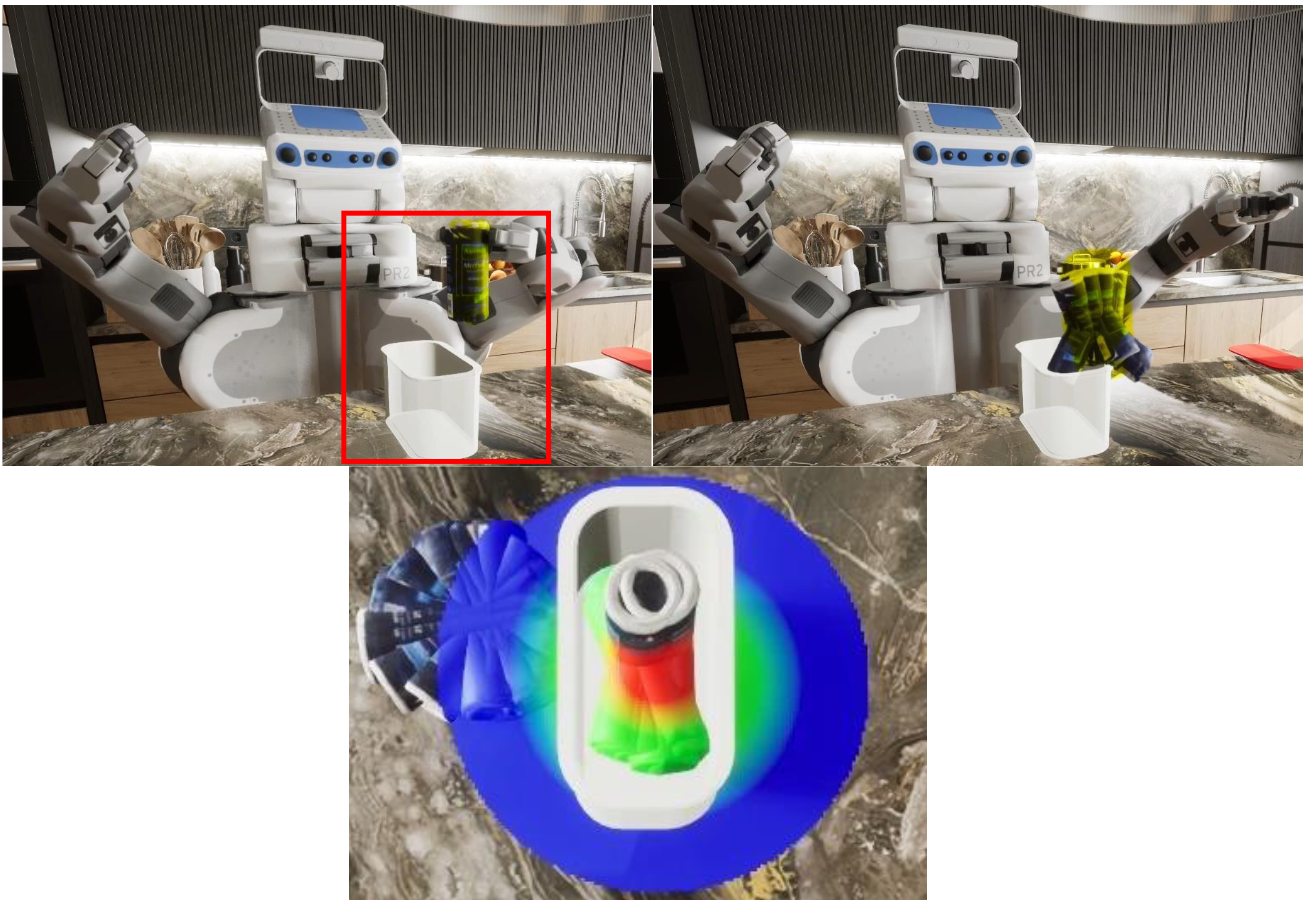

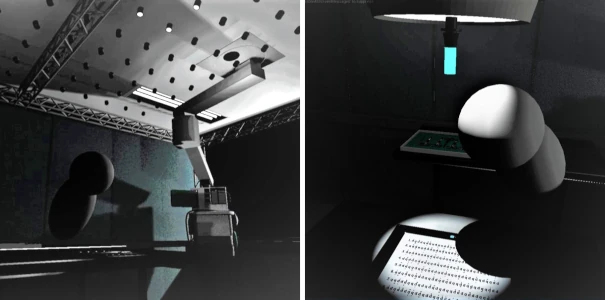

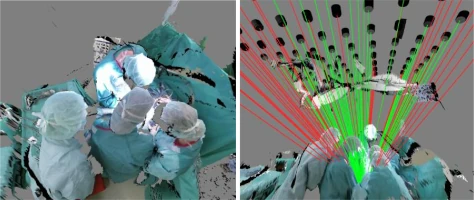

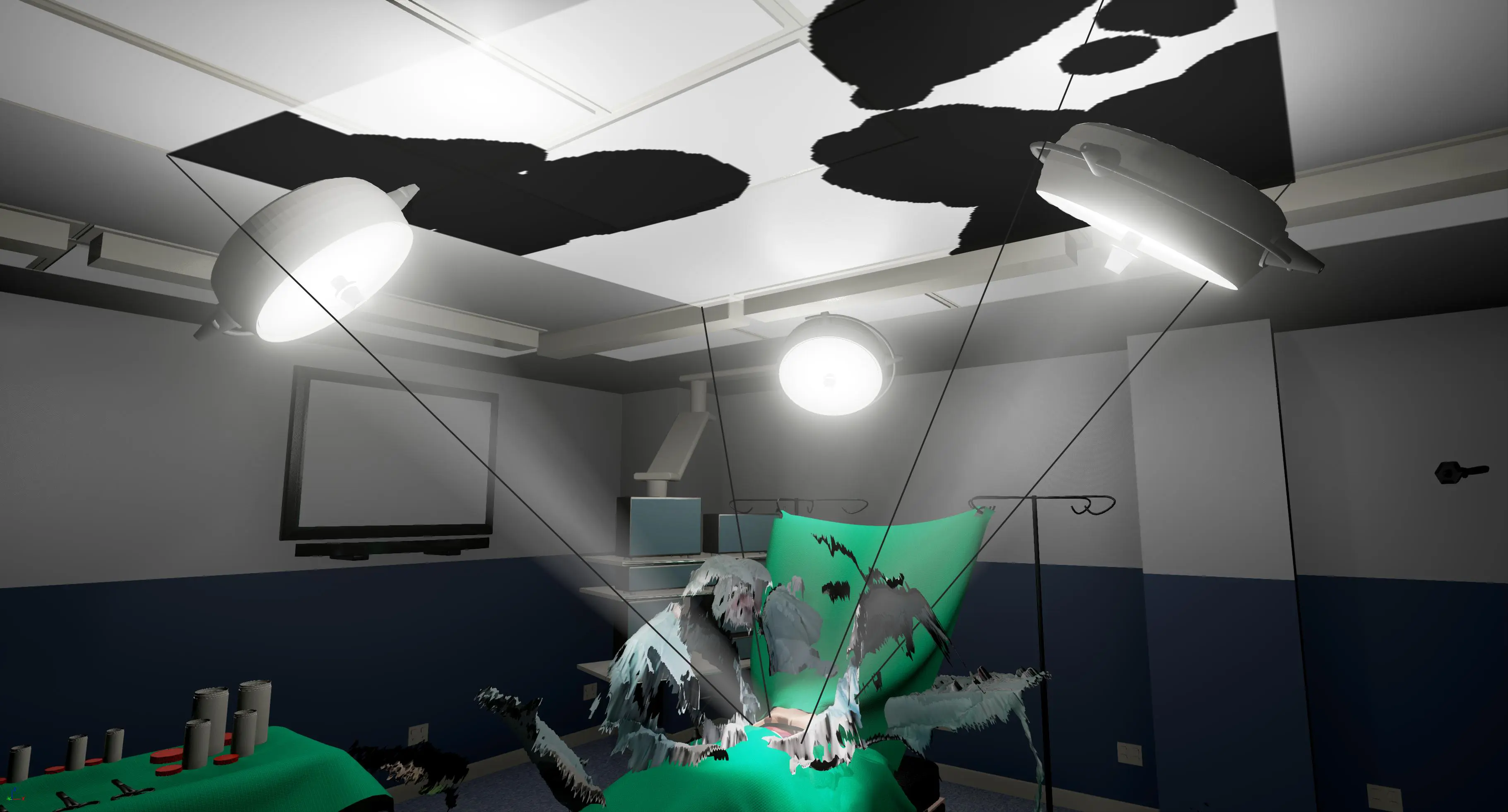

Shadow-Free Projection with Blur Mitigation on Dynamic, Deformable Surfaces

Andre Muehlenbrock, Yaroslav Purgin, Nicole Steinke, Verena Uslar, Dirk Weyhe, Rene Weller, Gabriel Zachmann

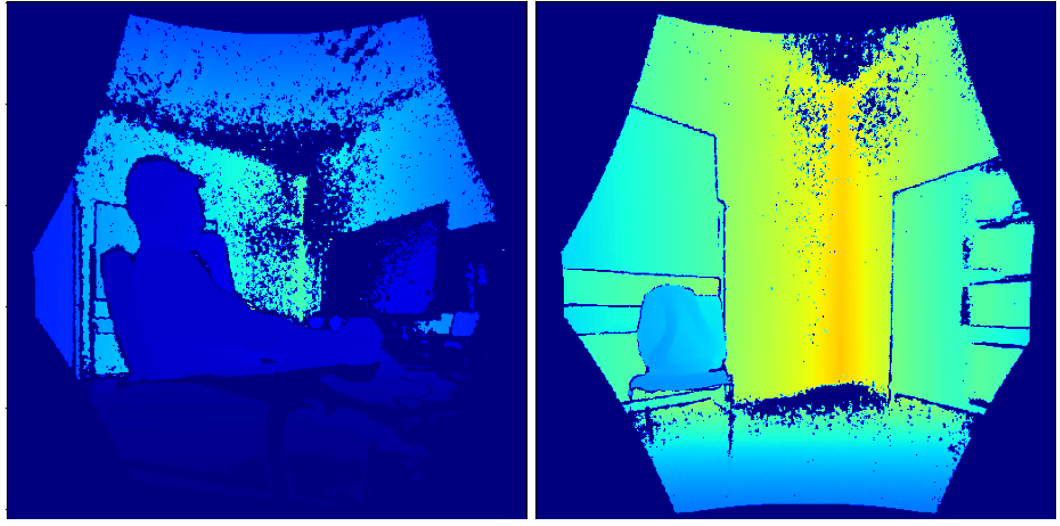

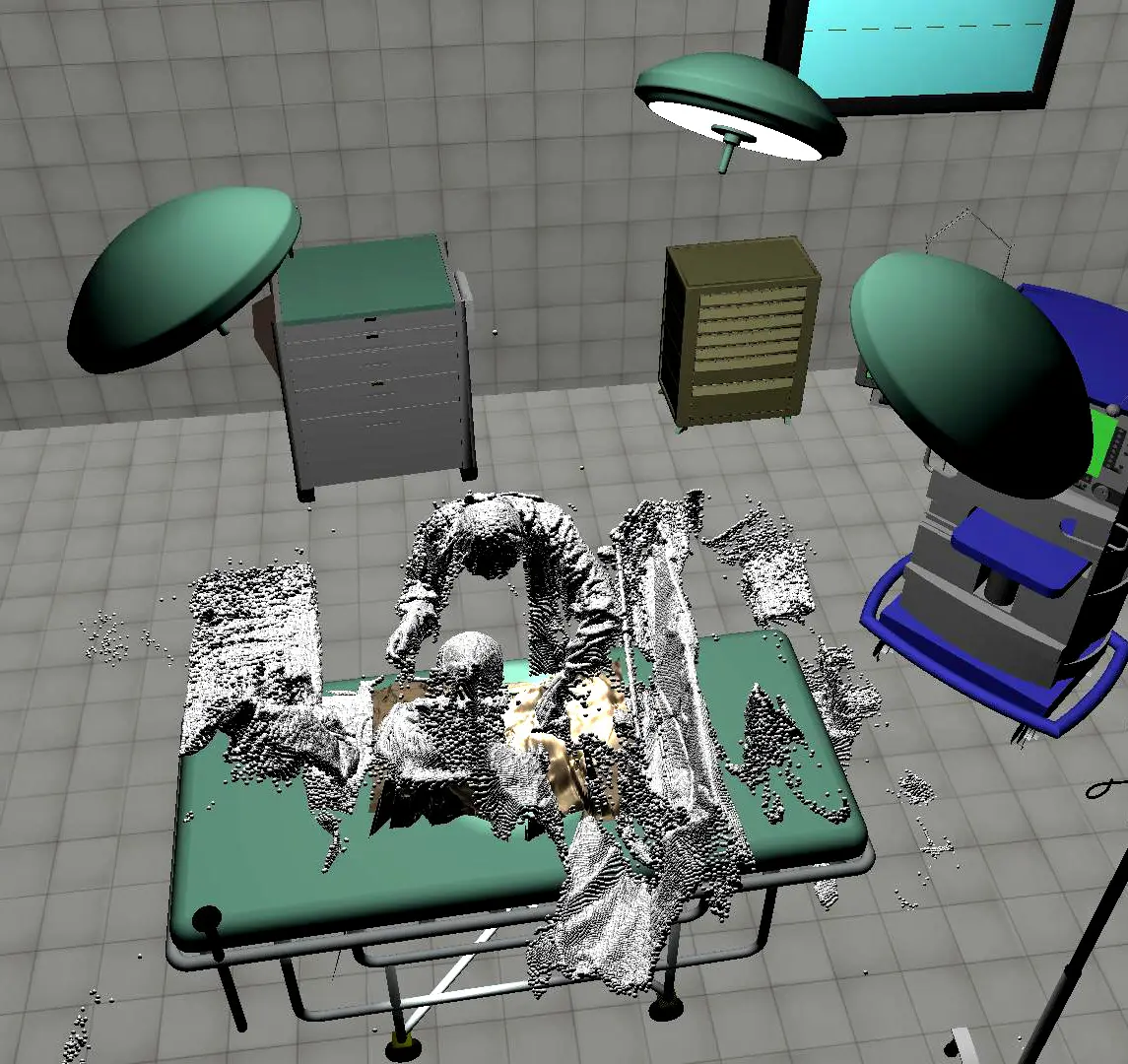

We present a real-time projection mapping system for visualizing information and displaying user interfaces on uneven, deformable surfaces in dynamic environments, where the surface gets partially and dynamically occluded. An important application area is the operating room, where this technology would allow for projection onto the surgical drapes. To achieve precise and adaptive geometric correction in setups with multiple projectors and overlapping, partially occluded projection regions, we adapt a point cloud rendering technique that accurately and efficiently reconstructs surface geometry in the projectors’ image space. This enables an overlap precision of 1.6mm at a projection distance of 2 m, even on uneven surfaces. In addition, we propose two novel GPU-based blur mitigation methods that address blur caused by inevitable inaccuracies of the depth sensors and the overlapping projector images. A user study (𝑛 = 23) shows that our blur mitigation strategies significantly enhance perceived readability, reduce visual artifacts, and lower user workload compared to conventional multi-projector blending. Our system supports projection on arbitrary surfaces, without requiring explicit segmentation and is well-suited to meet the demands of sensitive environments, including those with sterility constraints or limited display access.

Source Code: https://www.github.com/muehlenb/deformableprojection

Published in:

ACM VRST 2025, Montreal, Canada, November 12-14, 2025 (Honorable Mention)

Files:

Paper

Supplemental Material

Slides

Video

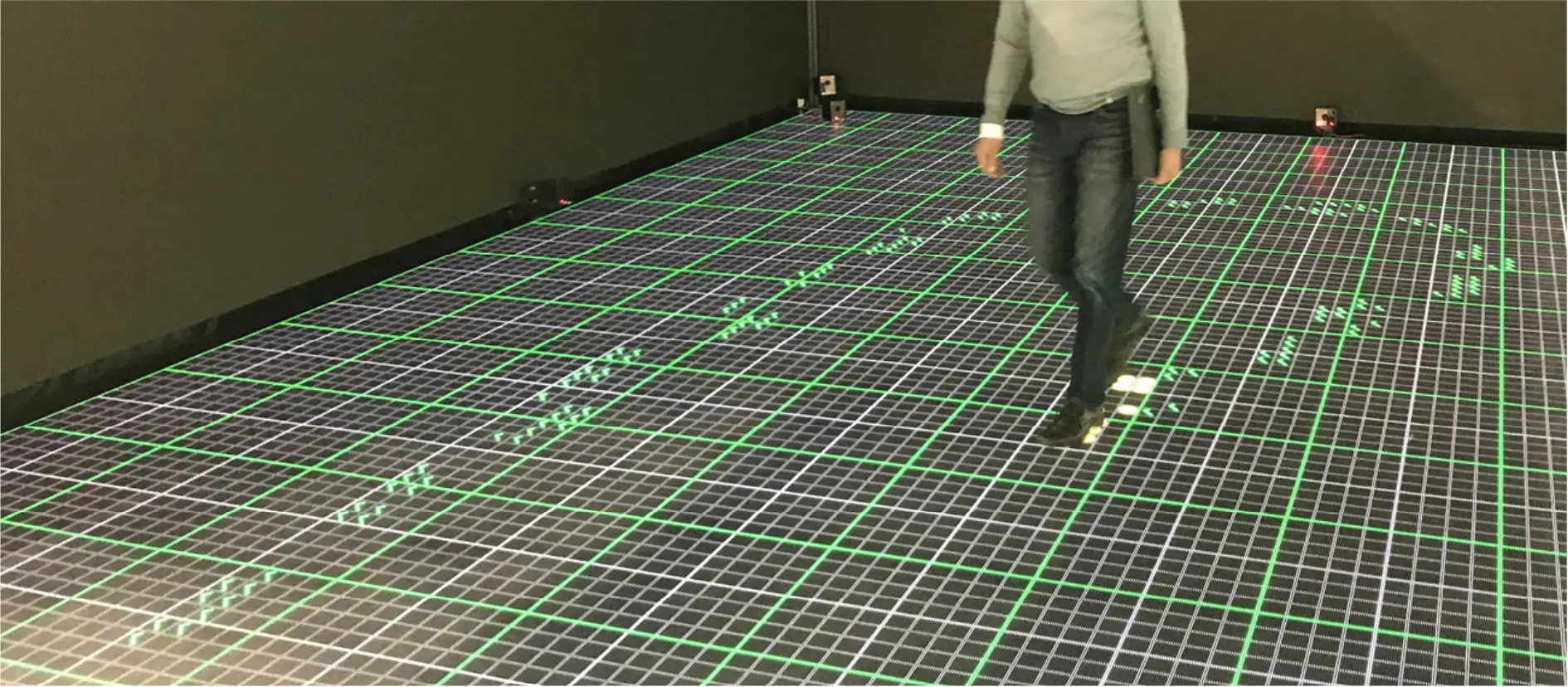

Balancing Speed and Visual Fidelity of Dynamic Point Cloud Rendering in VR

Andre Muehlenbrock, Rene Weller, Gabriel Zachmann

Efficient rendering of dynamic point clouds from multiple RGB-D cameras is essential for a wide range of VR/AR applications. In this work, we introduce and leverage two key parameters in a mesh-based rendering approach and conduct a systematic study of their impact on the trade-off between rendering speed and perceptual quality. We show that both parameters enable substantial performance improvements while causing only negligible visual degradation. Across four GPU generations and multiple deployment scenarios, continuous dynamic point clouds from seven Microsoft Azure Kinects can achieve binocular rendering at triple-digit frame rates, even on mid-range GPUs. Our results provide practical guidelines for balancing visual fidelity and efficiency in real-time VR point cloud rendering, demonstrating that mesh-based approaches are a scalable and versatile solution for applications ranging from consumer headsets to large-scale projection systems.

Source Code: https://www.github.com/muehlenb/blendpcr

Published in:

ICAT-EGVE 2025, Karlskrona, Schweden, December 03-05, 2025

Files:

Extended Reality for the Operating Room (XR4OR) (Dagstuhl Seminar 25062)

Peter Haddawy, Anja Hennemuth, Ron Kikinis, Gabriel Zachmann, Mario Lorenz, Anke Reinschlüssel and all authors of the abstracts in this report

This report documents the program and the outcomes of Dagstuhl Seminar 25062 "Extended Reality for the Operating Room (XR4OR)".

Published in:

Dagstuhl, October, 2025.

Files:

ReportLinks:

Dagstuhl Seminar 25062

Dagstuhl Report on Seminar 25062

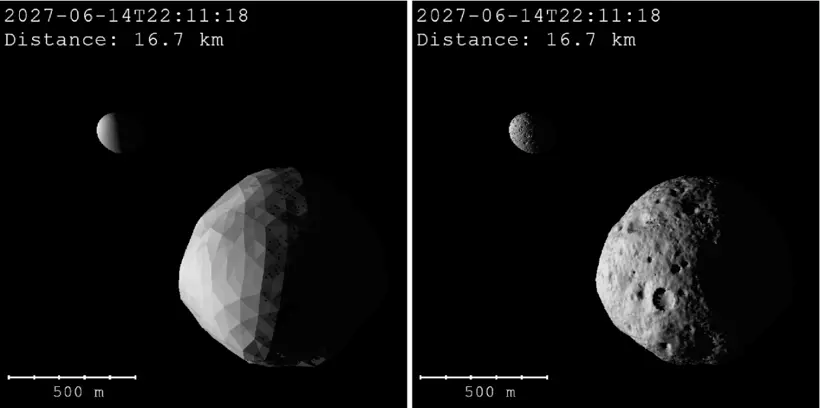

Guided Inverse Gravity Modeling for Asteroids using Neural Networks

Jan-Frederik Stock, Hermann Meißenhelter, Thomas Hudcovic and Gabriel Zachmann

This work is about inverse gravity modeling, which means cal- culating the internal mass distribution of a body from its gravi- tational field. Studying the mass distribution can give valuable insight about the history of a body and can help build better models of small-bodies. The goal of this work was to adapt a machine learning based inverse gravity modeling method, so that the mass can be predetermined in a certain area. This is useful, because additional information about the mass distri- bution might be available from other sources. The inversion method should respect the defined mass, while calculating the remaining mass from the gravitational field. The main results of this work are:

- a dataset consisting of mass distributions and corresponding gravitational fields to train CNN based inversion methods

- a customized loss function for the GeodesyNet [2], which enables the specification of mass/density for a certain area

Published in:

GI VR/AR 2025, Chemnitz, Germany, September 16 - 17, 2025.

Files:

PosterPaper

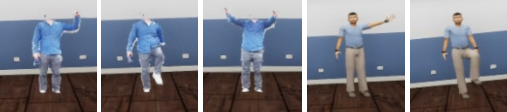

Towards a Modular XR Platform for Personalized Upper Limb Rehab

Sambhav Manohar, Mohit Madhu, Chaitanya Anand, Abhijit Patil, Gabriel Zachmann, Himangshu Sarma

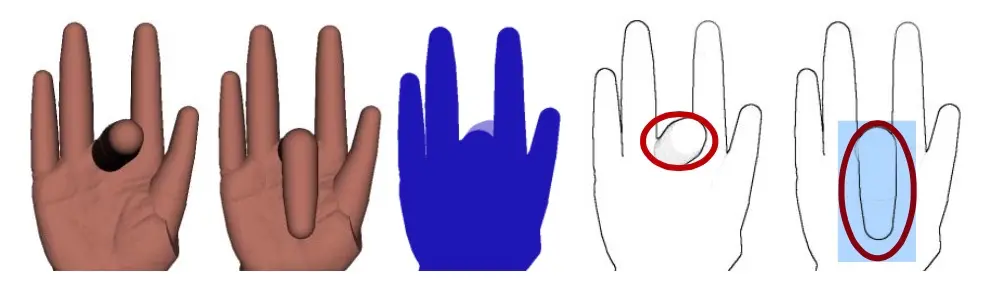

A modular, clinically informed XR-based rehabilitation platform is presented to support customizable therapy programs for upper limb conditions. Designed in collaboration with rehabilitation physi- cians, the system enables medical professionals to create, adapt, and deploy personalized therapy routines while monitoring patient progress remotely. The platform incorporates gesture-based exer- cises and personalized progression through two core mechanisms: (1) A tolerance adaptation algorithm is proposed to dynamically ad- just evaluation difficulty based on user-specific parameters, includ- ing age, injury severity, and demographic profile. (2) A weighted gesture scoring model that prioritizes motion components most rel- evant to the therapeutic objectives of each exercise. Modular game- play allows reconfiguration according to clinical prescriptions for various conditions, including Carpal Tunnel Syndrome, post-stroke upper limb recovery, and rotator cuff rehabilitation. All exercise-to- gesture mappings are derived from validated clinical documentation to ensure medical accuracy. This system establishes a foundation for a general-purpose, adaptable XR rehabilitation framework tai- lored to individual patient needs and therapeutic outcomes.

Published in:

ISMAR 2025, Daejeon, South Korea October 8 - 12, 2025.

Files:

Paper

Virtual reality simulation for learning minimally invasive endodontics: a randomized controlled trial

Chalinee Srakoopun, Siriwan Suebnukarn, Peter Haddawy, Maximilian Kaluschke, Rene Weller, Myat Su Yin, Panuroot Aguilar, Panupat Phumpatrakom, Kriangkrai Pinchamnankool, Kamon Budsaba and Gabriel Zachmann

Learning minimally invasive endodontic techniques presents unique challenges, requiring precise tooth structure preservation and strong spatial awareness. This study evaluated a clinically realistic virtual reality (VR) simulator, featuring eye-tracking feedback and automated outcome scoring, as an innovative tool to support student learning in minimally invasive endodontics.

Published in:

Springer Nature, October, 2025.

Files:

PaperLinks:

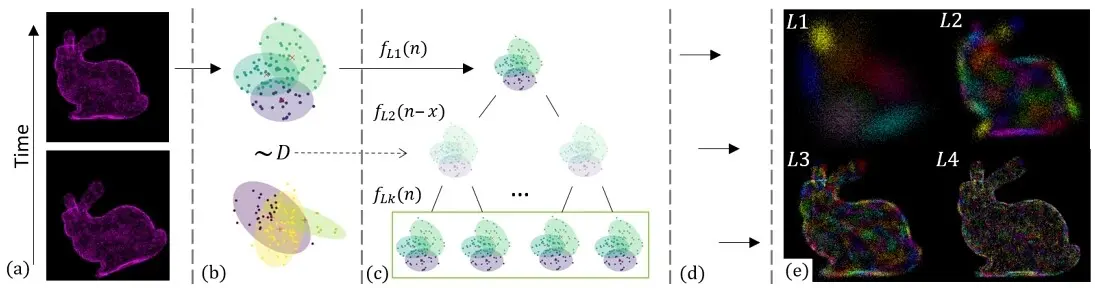

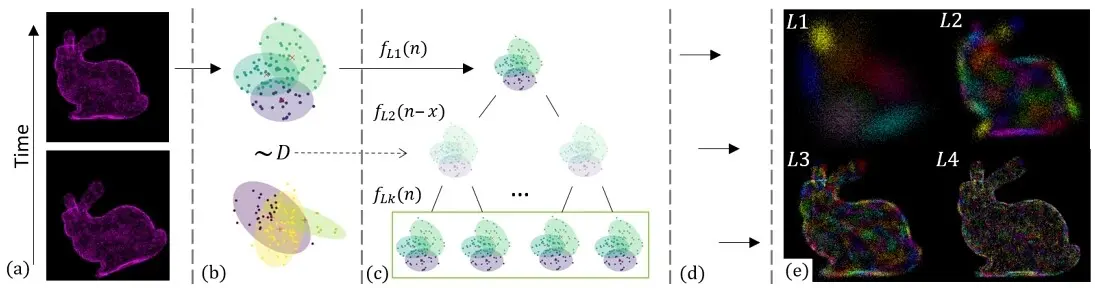

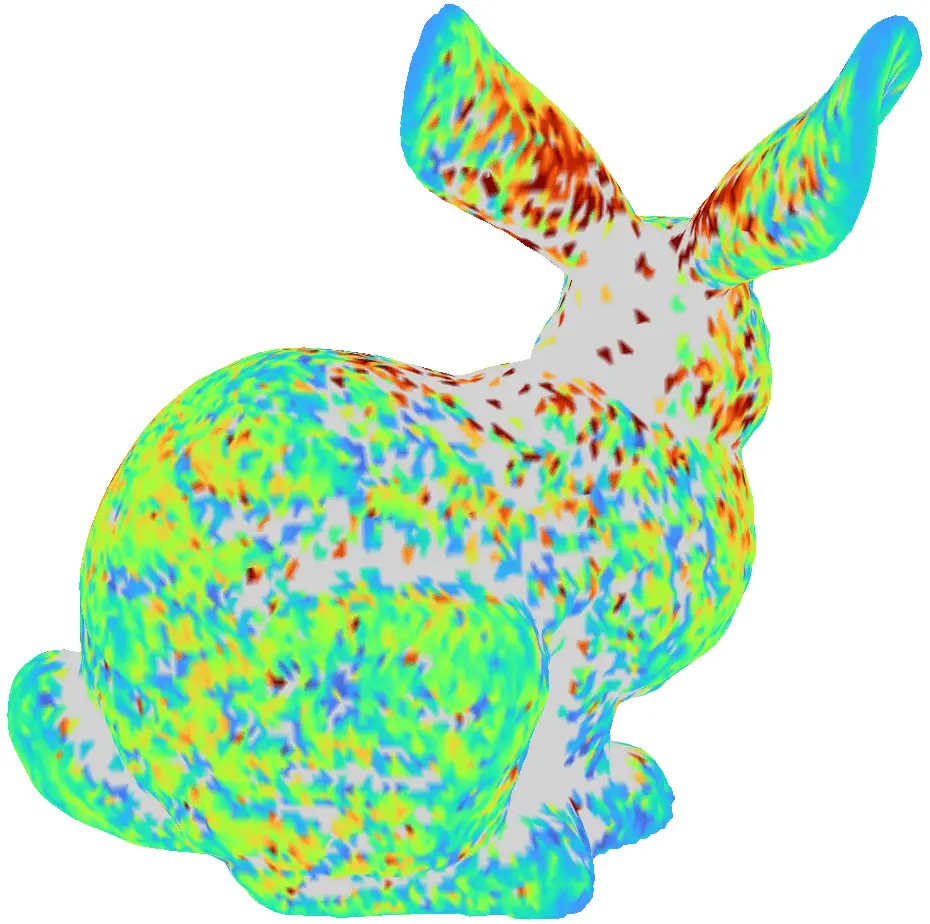

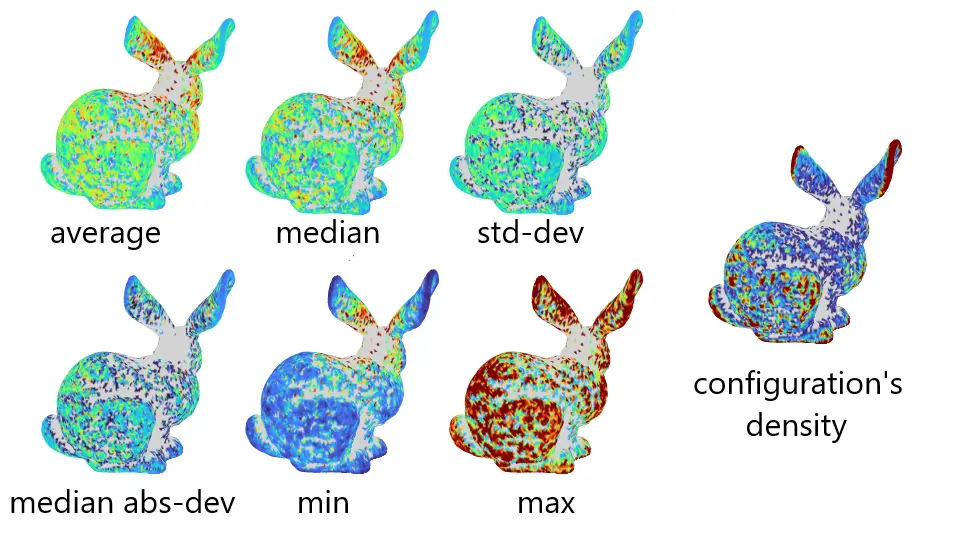

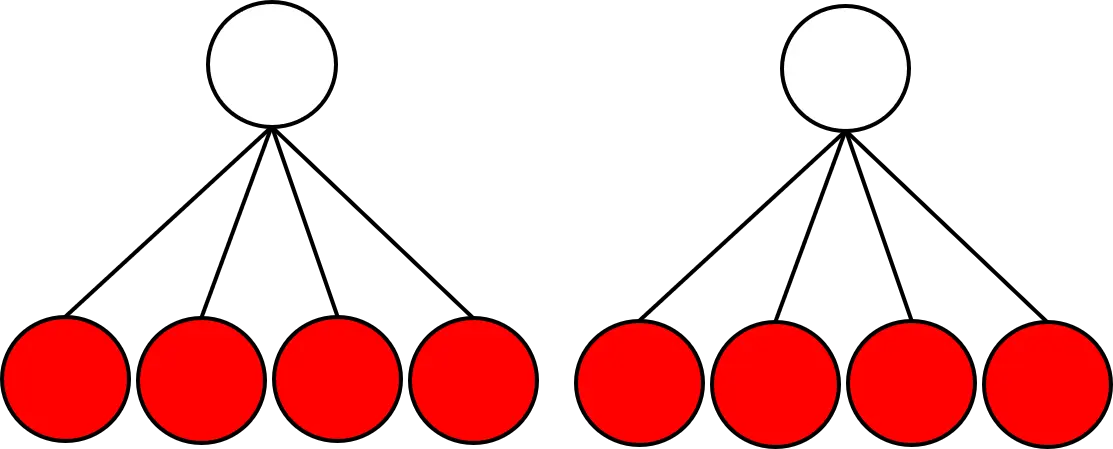

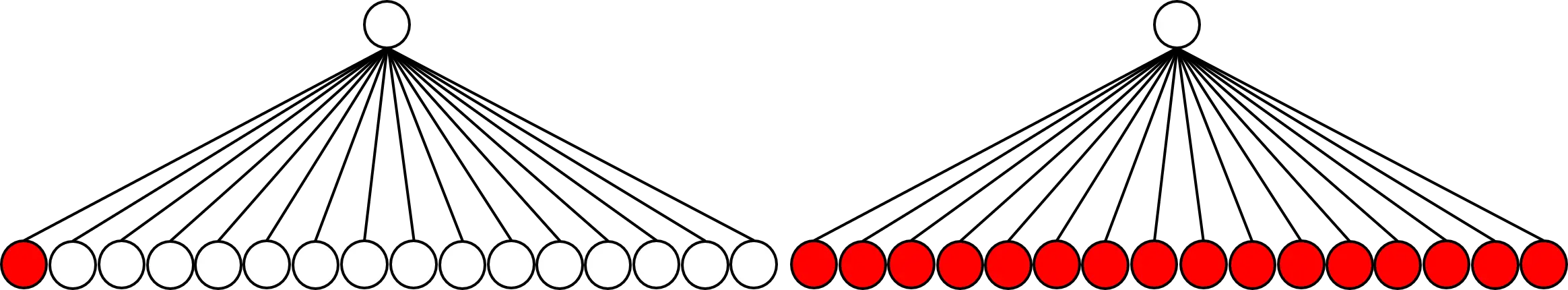

Point Cloud Streaming using Temporal Hierarchical GMMs

Roland Fischer, Tobias Gels, Haya Almaree, Gabriel Zachmann

Efficient processing and accurate representation of point clouds are crucial for many tasks, such as real-time 3D scene and avatar reconstruction. Especially for web/cloud-based streaming and telepresence, minimizing time, size, and bandwidth becomes paramount. We propose a novel approach for compact point cloud representation and efficient real-time streaming using a generative model consisting of a hierarchy of overlapping Gaussian Mixture Models (GMMs). Our level-wise construction scheme allows for dynamic construction and rendering of LODs, progressive transmission, and bandwidth- and computing power-adaptive transmission. Utilizing temporal coherence in sequential input, we reduce construction time significantly. Together with our highly optimized and parallelized CUDA implementation, we achieve real-time speeds with high-fidelity reconstructions. Moreover, we achieve significantly higher compression factors, up to 59 %, than previous work with only slightly lower accuracy.

Published in:

Web3D '25: The 30th International Conference on 3D Web Technology, Siena, Italy, September 9 - 10, 2025.

Files:

PaperMovie

Slides

Links:

Virtual Reality and Mixed Reality - 22nd EuroXR International Conference, EuroXR 2025

Despina Michael-Grigoriou, Gabriel Zachmann, Regis Kopper, Sang Ho Yoon, Stefanie Zollmann, Patrick Bourdot

22nd EuroXR International Conference, EuroXR 2025, Winterthur, Switzerland, September 3–5, 2025, Proceedings

This book constitutes the refereed proceedings of the 22nd International Conference on Virtual Reality and Mixed Reality, EuroXR 2025, held in Winterthur, Switzerland, during September 3–5, 2025.

The 17 full papers presented were carefully reviewed and selected from 51 submissions. The papers are grouped into the following topics: Rendering and streaming; content synthesis and creation; human factors and perception; and interaction techniques.

Published in:

22nd EuroXR International Conference, EuroXR 2025, Winterthur, Switzerland, September 3–5, 2025, Proceedings.

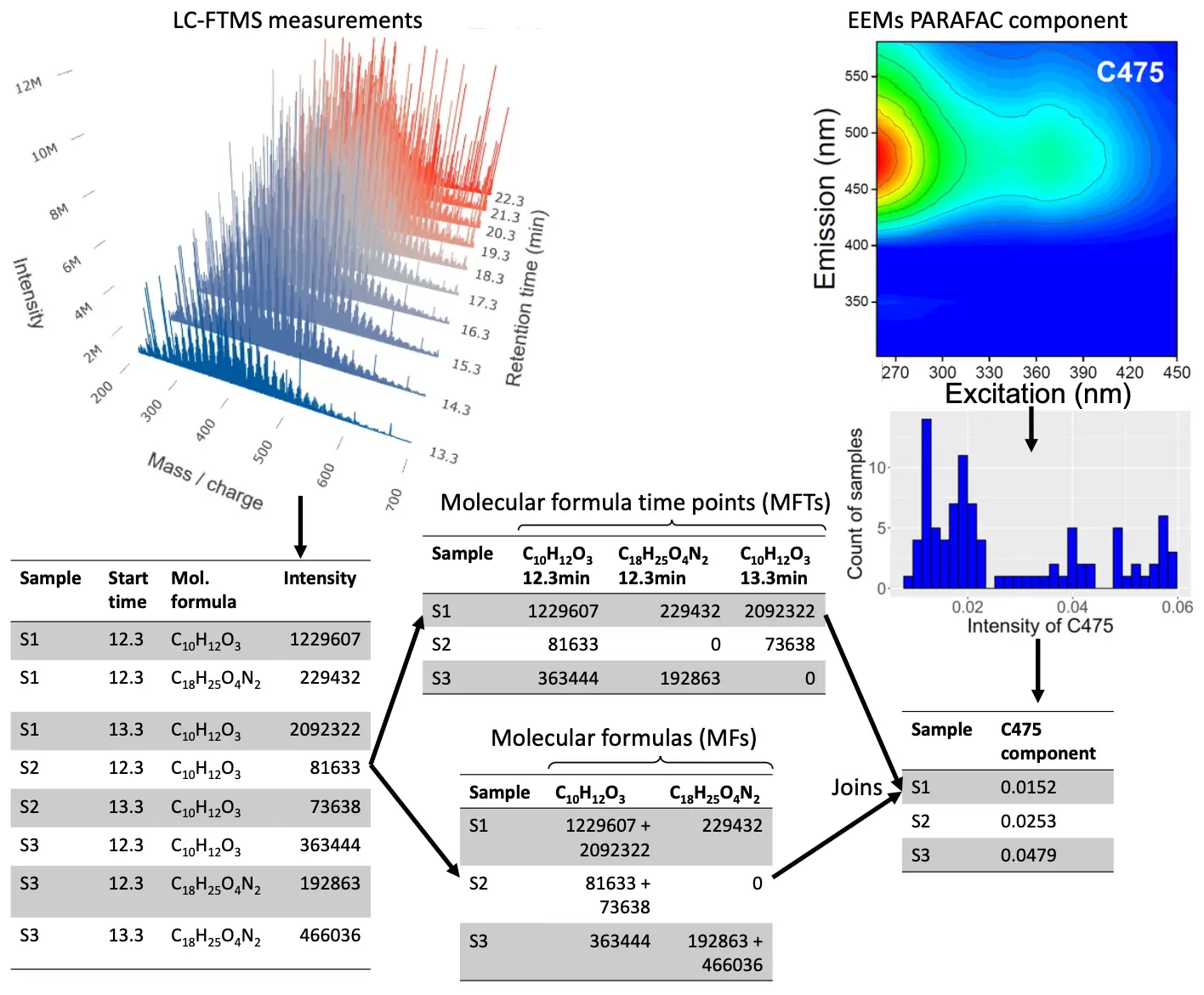

Optimizing machine learning-based prediction of terrestrial dissolved organic matter in the ocean using fluorescence and LC-FTMS data

Marlo Bareth, Boris P. Koch, Gabriel Zachmann, Xianyu Kon, Oliver J. Lechtenfeld and Sebastian Maneth

Marine dissolved organic matter (DOM) is an extremely complex mixture of or- ganic compounds that plays a crucial role in the global carbon cycle. In the Arctic, climate change is accelerating the release of terrestrial organic carbon. Since chemical information is the only way to track DOM sources and fate, it is essential to improve analytical and data science approaches to assess DOM composition. Here, we compare 1 random forest (RF), support vector machines, and generalized linear models (GLM) to predict a fluorescence-derived proxy for terrestrial DOM based on molecular formula data from liquid chromatography coupled with Fourier transform mass spectrometry (LC-FTMS). We systematically evaluate different data preprocessing, normalization, and ML techniques to optimize prediction accuracy and computational efficiency. Our results show that a generalized linear model (GLM) with sum normalization provides the most accurate and efficient predictions, achieving a normalized root mean square error (NRMSE) of 5.7%—close to the precision of the fluorescence measurement. The prediction based on RF regression was slightly less accurate and required significantly more computation time compared to GLM, but was most robust against data prepro- cessing and independent of linear correlations. Feature selection significantly improved the performance of all models, with robust predictions obtained using only ca. 2,000 of the ca. 70,000 molecular features per sample. Additionally, we assessed the im- pact of chromatographic retention time on prediction accuracy and explored the key molecular features contributing to terrestrial DOM signatures using Shapley values and permutation importance (for RFs). Our study is a blueprint for the application of ML to enhance the analysis of high-resolution mass spectrometry data, offering a scalable approach for predicting information important for the understanding of marine DOM chemistry.

Published in:

Files:

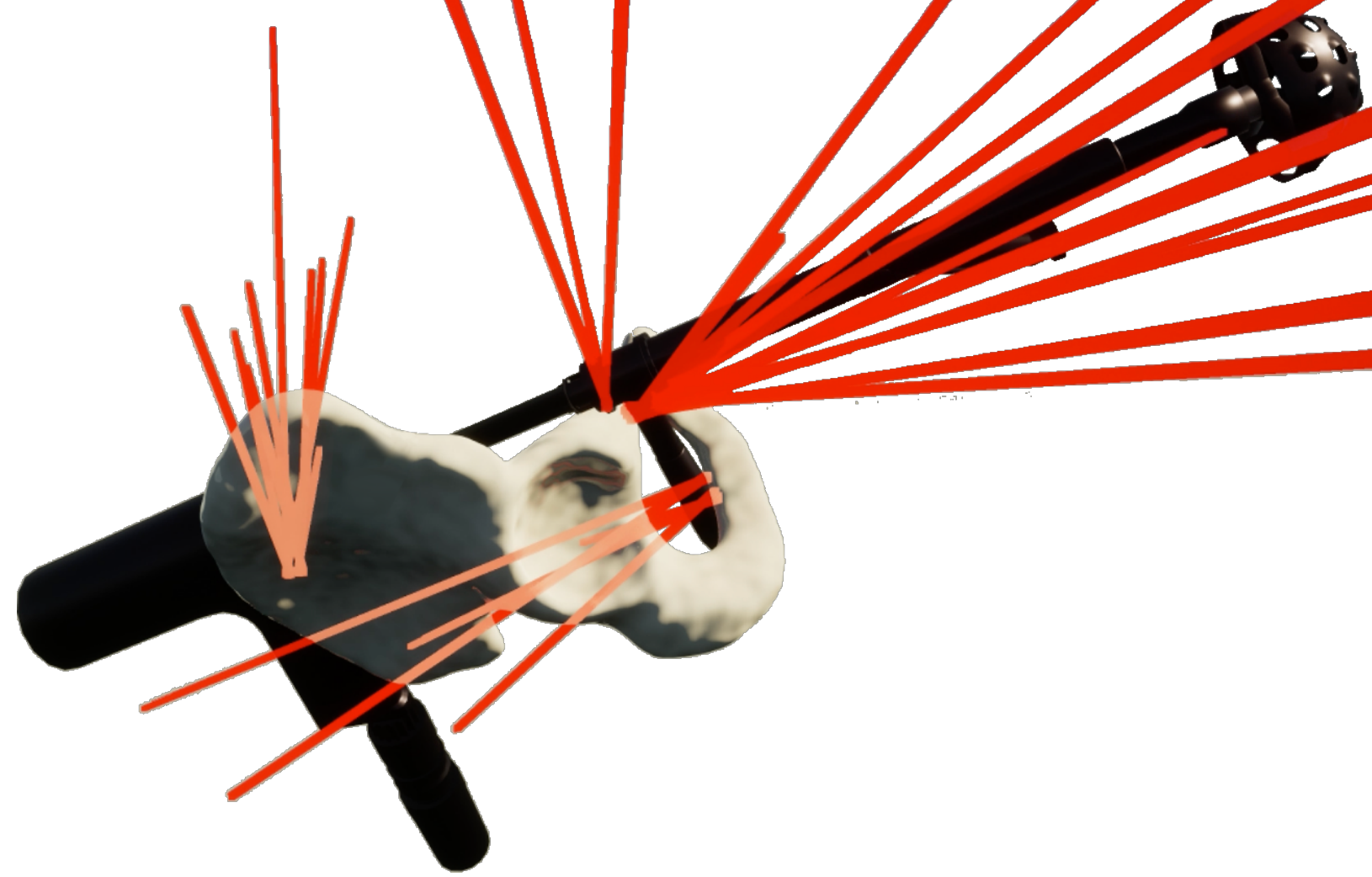

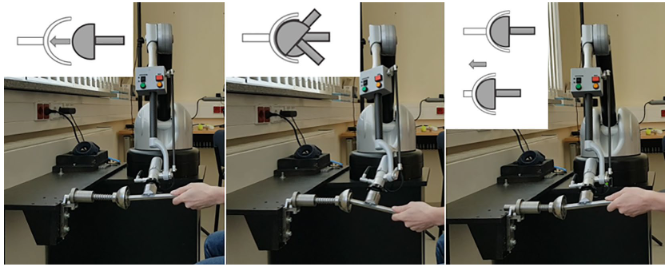

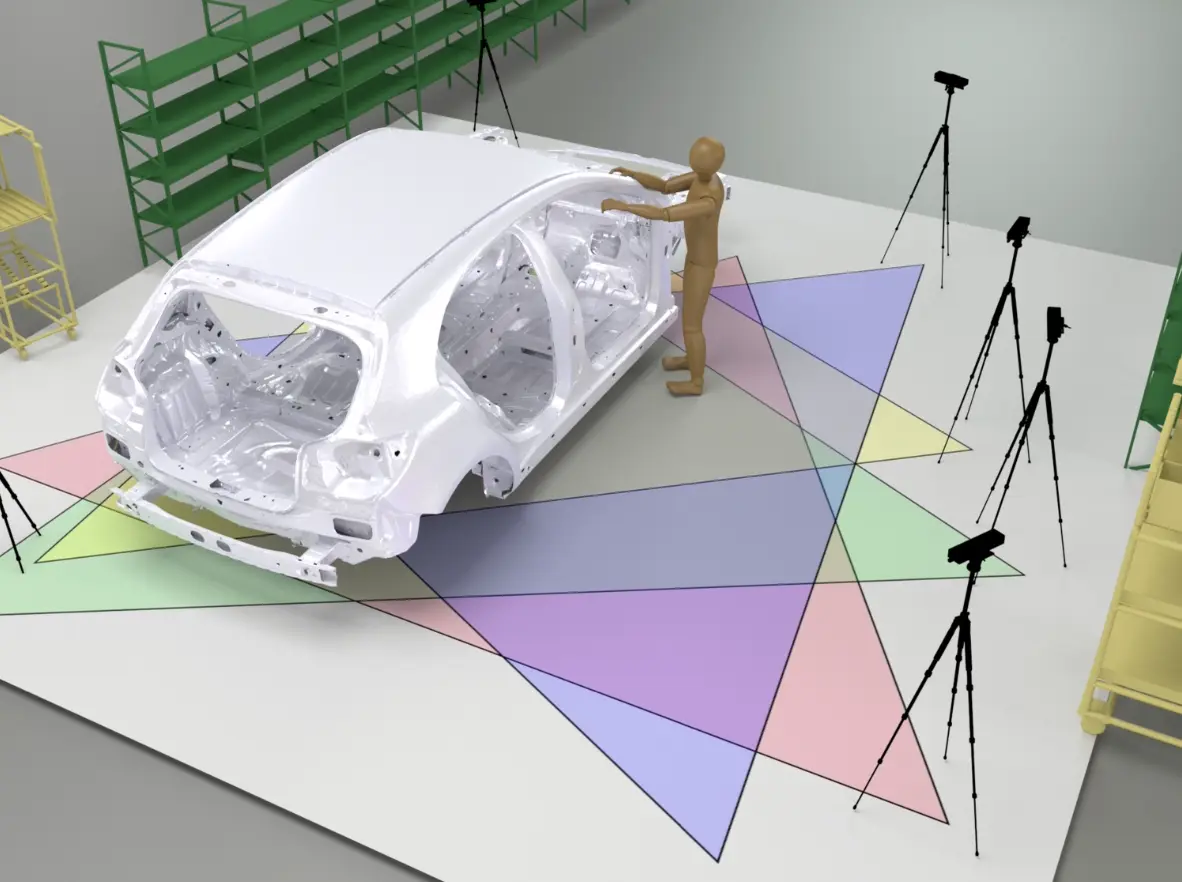

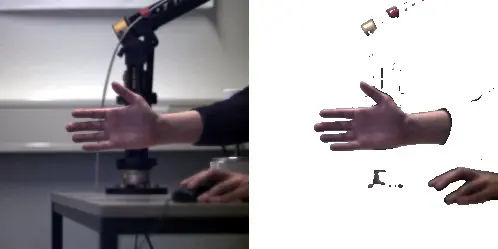

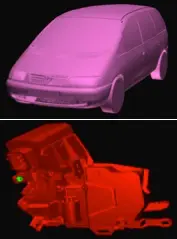

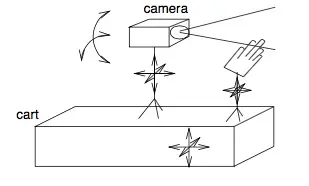

Recurrent Multi-view 6DoF Pose Estimation for Marker-less Surgical Tool Tracking

Niklas Agethen, Janis Rosskamp, Tom L. Koller, Jan Klein and Gabriel Zachmann

Purpose: Marker-based tracking of surgical instruments facilitates surgical nav- igation systems with high precision, but requires time-consuming preparation and is prone to stains or occluded markers. Deep learning promises marker-less tracking based solely on RGB videos to address these challenges. In this paper, object pose estimation is applied to surgical instrument tracking using a novel deep learning architecture. Methods: We combine pose estimation from multiple views with recurrent neu- ral networks to better exploit temporal coherence for improved tracking. We also investigate the performance under conditions where the instrument is obscured. We enhance an existing pose (distribution) estimation pipeline by a spatio- temporal feature extractor that allows for feature incorporation along an entire sequence of frames. Results: On a synthetic dataset we achieve a mean tip error below 1.0 mm and an angle error below 0.2◦ using a four-camera setup. On a real dataset with four cameras we achieve an error below 3.0 mm. Under limited instrument visibility our recurrent approach can predict the tip position approximately 3 mm more precisely than the non-recurrent approach. Conclusion: Our findings on a synthetic dataset of surgical instruments demon- strate that deep-learning-based tracking using multiple cameras simultaneously can be competitive with marker-based systems. Additionally, the temporal infor- mation obtained through the architecture’s recurrent nature is advantageous when the instrument is occluded. The synthesis of multi-view and recurrence has thus been shown to enhance the reliability and usability of high-precision surgical pose estimation.

Published in:

International Journal of Computer Assisted Radiology and Surgery, 2025.

Files:

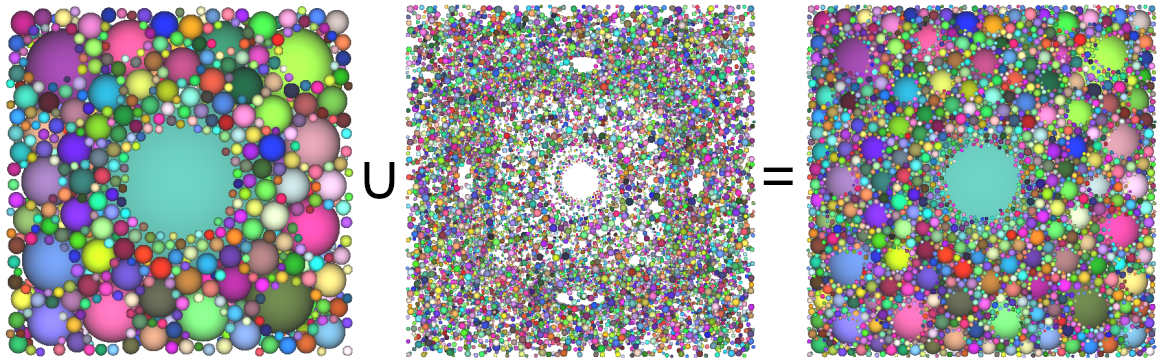

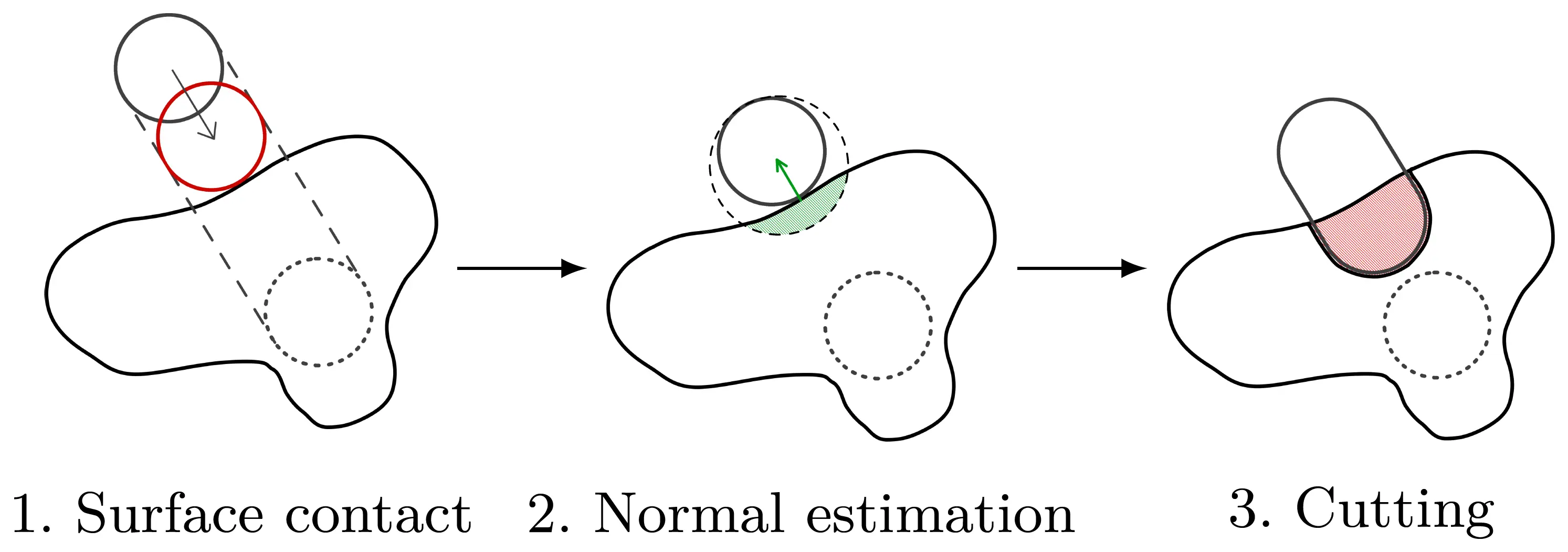

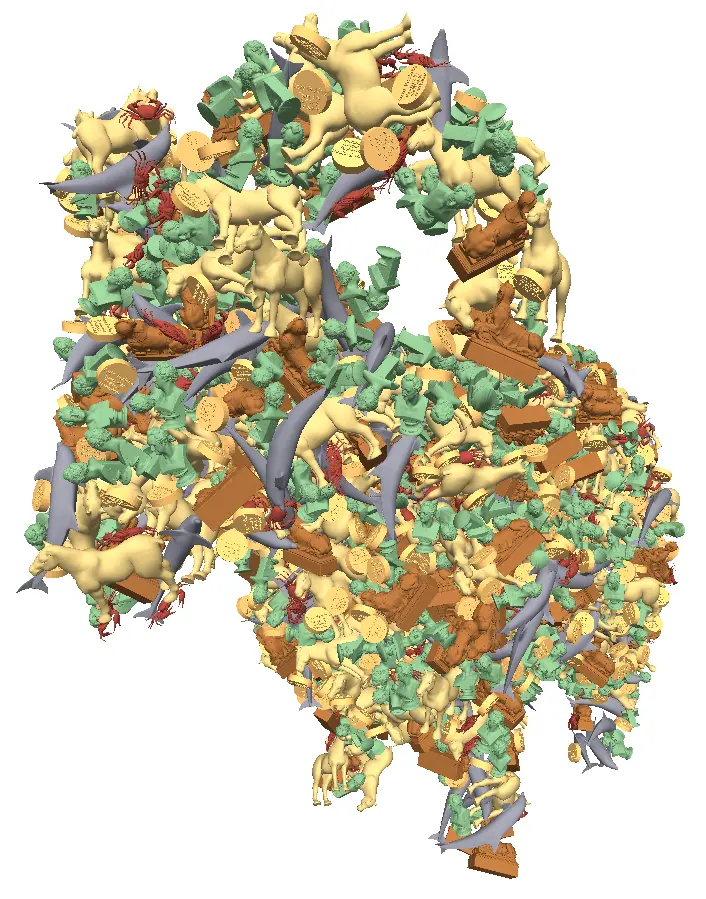

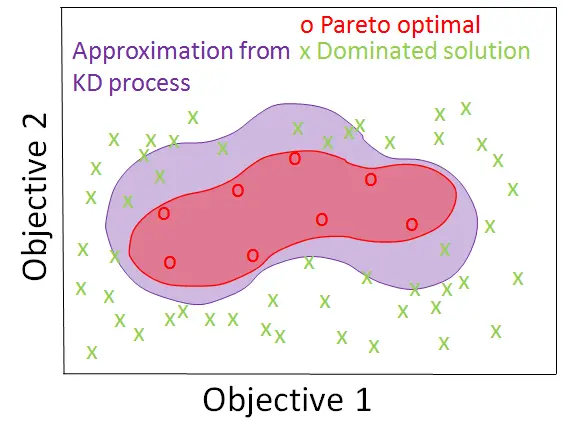

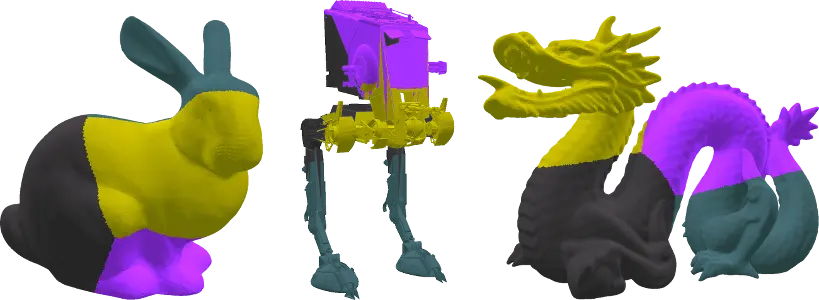

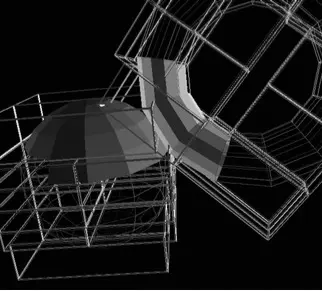

Multi-Objective Packing of 3D Objects into Arbitrary Containers

Hermann Meißenhelter, René Weller and Gabriel Zachmann

We focus on the relatively underexplored task of packing a set of arbitrary 3D objects—drawn from a predefined distribution—into a single arbitrary 3D container. We simultaneously optimize two potentially conflicting objectives: maximizing the packed volume and maintaining sufficient spacing among objects of the same type to prevent clustering. We present an algorithm to compute solutions to this challenging problem heuristically. Our approach is a flexible two-tier pipeline that computes and refines an initial arrangement. Our results confirm that this approach achieves dense packings across various objects and container shapes.

Published in:

Eurographics 2025 - Short Papers (EG Digital Library)

Files:

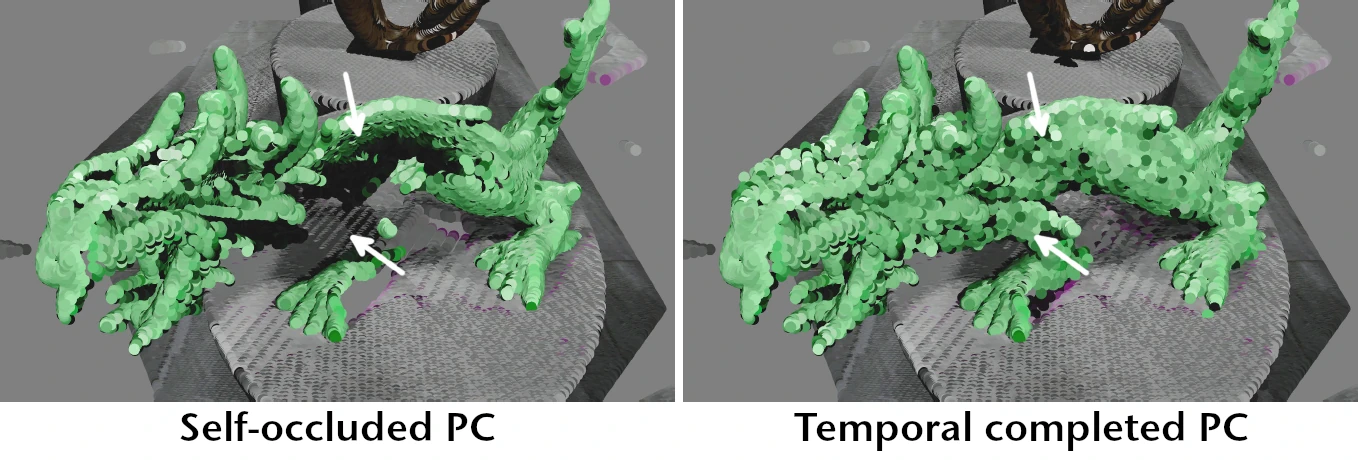

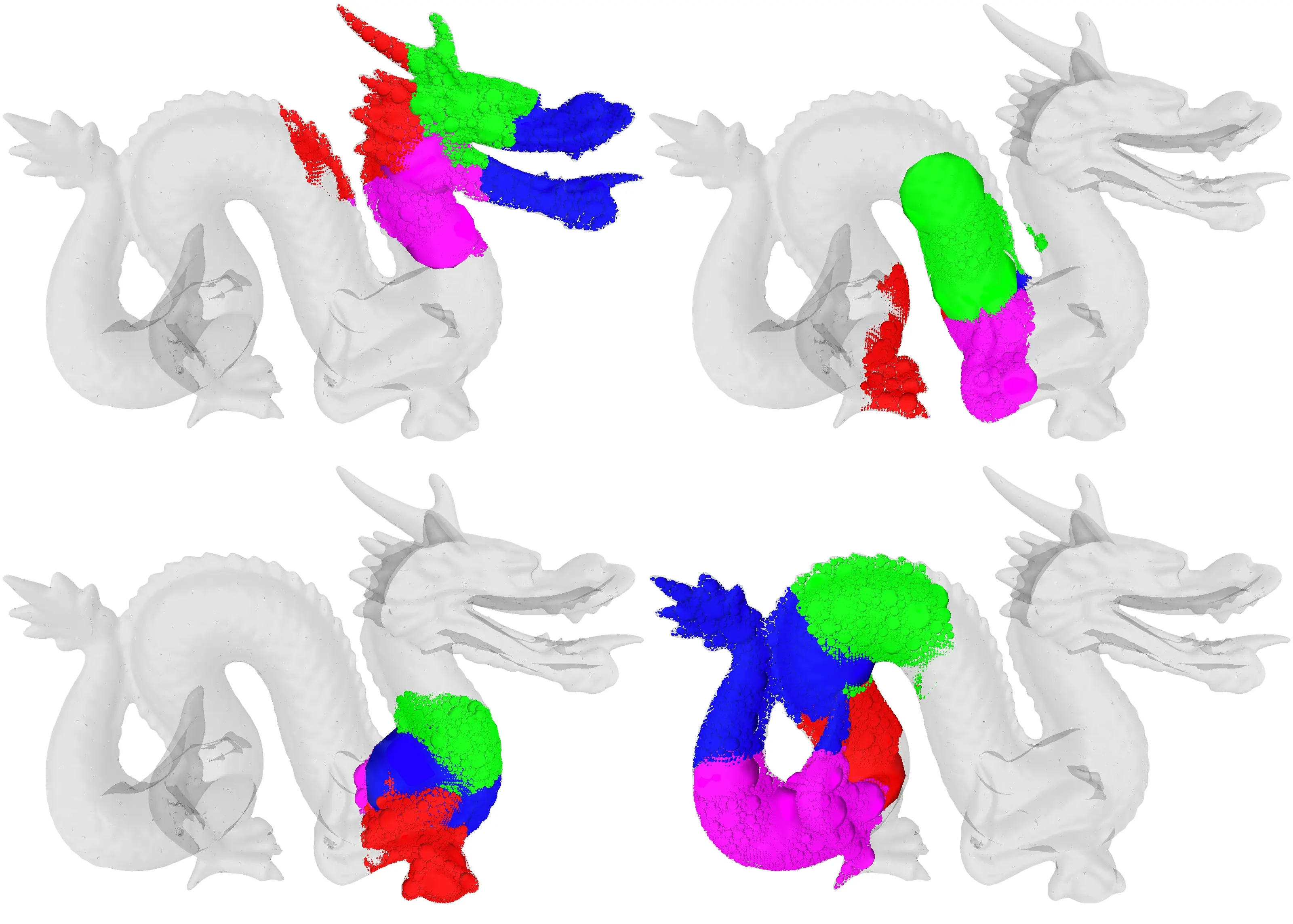

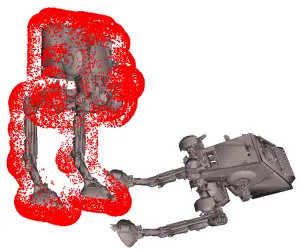

TemPCC: Completing Temporal Occlusions in Large Dynamic Point Clouds captured by Multiple RGB-D Cameras

Andre Mühlenbrock, René Weller and Gabriel Zachmann

We present TemPCC, an approach to complete temporal occlusions in large dynamic point clouds. Our method manages a point set over time, integrates new observations into this set, and predicts the motion of occluded points based on the flow of surrounding visible ones. Unlike existing methods, our approach efficiently handles arbitrarily large point sets with linear complexity, does not reconstruct a canonical representation, and considers only local features. Our tests, performed on an Nvidia GeForce RTX 4090, demonstrate that our approach can complete a frame with 30,000 points in under 30 ms, while, in general, being able to handle point sets exceeding 1,000,000 points. This scalability enables the mitigation of temporal occlusions across entire scenes captured by multi-RGB-D camera setups. Our initial results demonstrate that self-occlusions are effectively completed and successfully generalized to unknown scenes despite limited training data.

Source Code: https://www.github.com/muehlenb/tempcc

Published in:

Eurographics 2025 - Short Papers (EG Digital Library)

Files:

Paper

Supplementary Material

Slides

Video

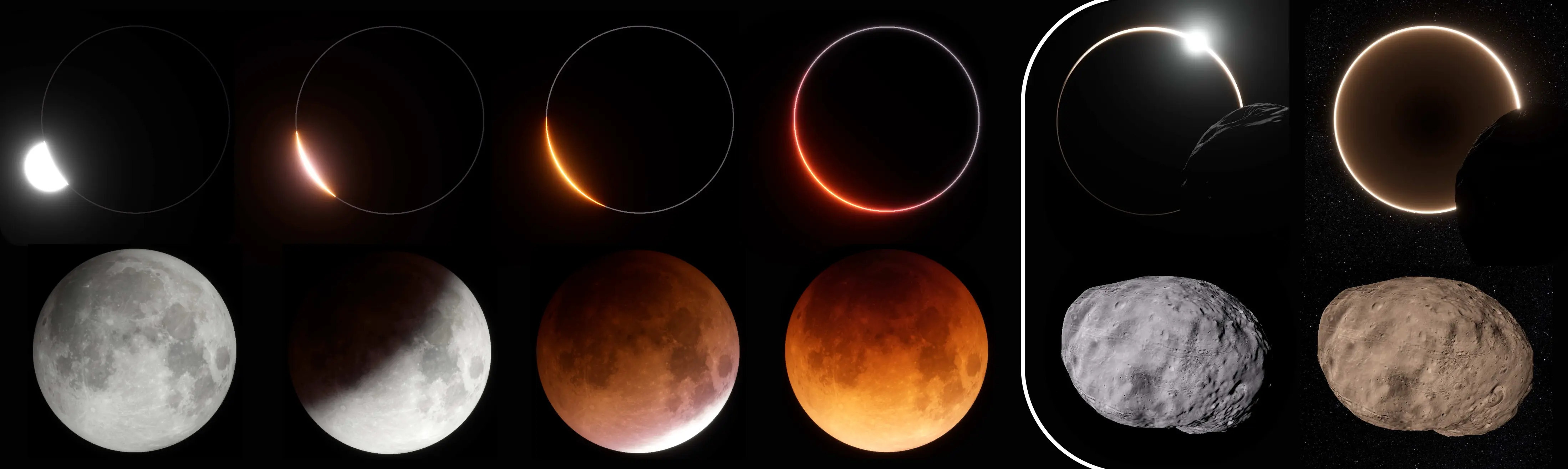

Physically Based Real-Time Rendering of Eclipses

Simon Schneegans, J. Gilg, V. Ahlers, Gabriel Zachmann, Andreas Gerndt

We present a novel approach for simulating eclipses, incorporating effects of light scattering and refraction in the occluder’s atmosphere. Our approach not only simulates the eclipse shadow, but also allows for watching the Sun being eclipsed by the occluder. The latter is a spectacular sight which has never been seen by human eyes: For an observer on the lunar surface, the atmosphere around Earth turns into a glowing red ring as sunlight is refracted around the planet. To simulate this, we add three key contributions: First, we extend the Bruneton atmosphere model to simulate refraction. This allows light rays to be bent into the shadow cone. Refraction also adds realism to the atmosphere as it deforms and displaces the Sun during sunrise and sunset. Second, we show how to precompute the eclipse shadow using this extended atmosphere model. Third, we show how to efficiently visualize the glowing atmosphere ring around the occluder. Our approach produces visually accurate results suited for scientific visualizations, science communication, and video games. It is not limited to the Earth-Moon system, but can also be used to simulate the shadow of Mars and potentially other bodies. We demonstrate the physical soundness of our approach by comparing the results to reference data. Because no data is available for eclipses beyond the Earth-Moon system, we predict how an eclipse on a Martian moon will look like. Our implementation is available under the terms of the MIT license.

Published in:

Computer Graphics Forum (Eurographics), 2025

Journal article

Files:

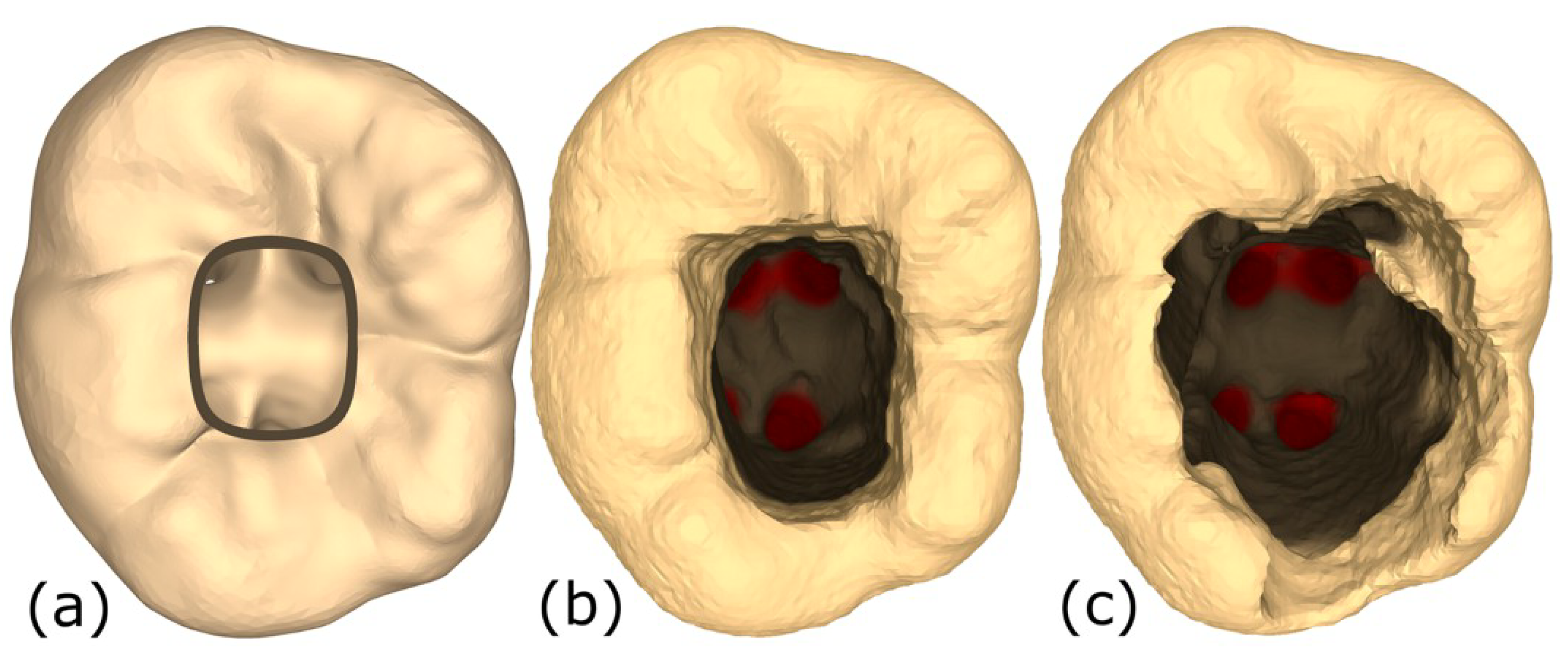

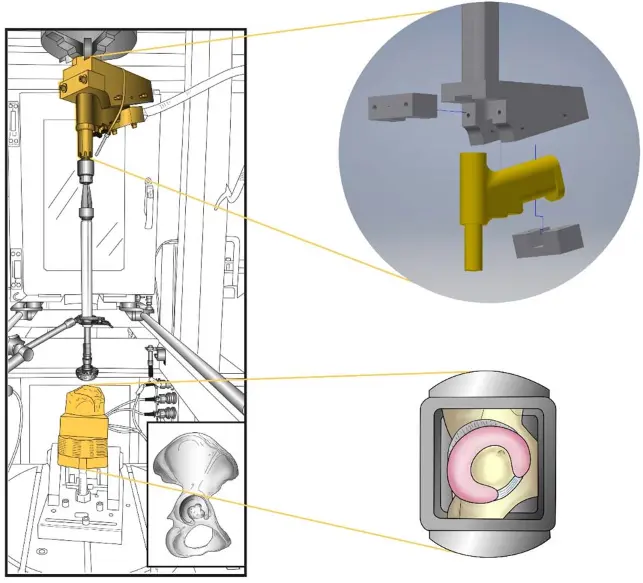

HIPS – A Surgical Virtual Reality Training System for Total Hip Arthroplasty (THA) with Realistic Force Feedback

Mario Lorenz, Maximilian Kaluschke, Annegret Melzer, Nina Pillen, Magdalena Sanrow, Andrea Hoffmann, Dennis Schmidt, André Dettmann, Angelika C. Bullinger, Jérôme Perret, Gabriel Zachmann

Virtual reality training simulations to acquire surgical skills are important for increasing patient safety and save valuable resources, e.g., cadavers, supervision and operating room time. However, as surgery is a craft, simulators must not only provide a high degree of visual realism, but especially a realistic haptic behavior. While such simulators exist for surgeries like laparoscopy or arthroscopy, other surgical fields, especially where large forces need to be exerted, like total hip arthroplasty (THA; implantation of a hip joint protheses), lack realistic VR training simulations. In this paper we present for the first time a novel VR training simulation for the five steps of THA (from femur head resection to stem implantation) with realis-tic haptic feedback. To achieve this, a novel haptic hammering device, an upgraded version of the Virtuose 6D haptic device from Haption, novel algorithms for collision detection, haptic rendering, and material removal are introduced. In a study with 17 surgeons of diverse experience levels, we confirmed the realism, usefulness and usability of our novel methods.

Published in:

IEEE Transactions on Visualization and Computer Graphics (2025 IEEE Conference Virtual Reality and 3D User Interfaces, Saint-Malo, France, March 8-12, (Honorable Mention)

Files:

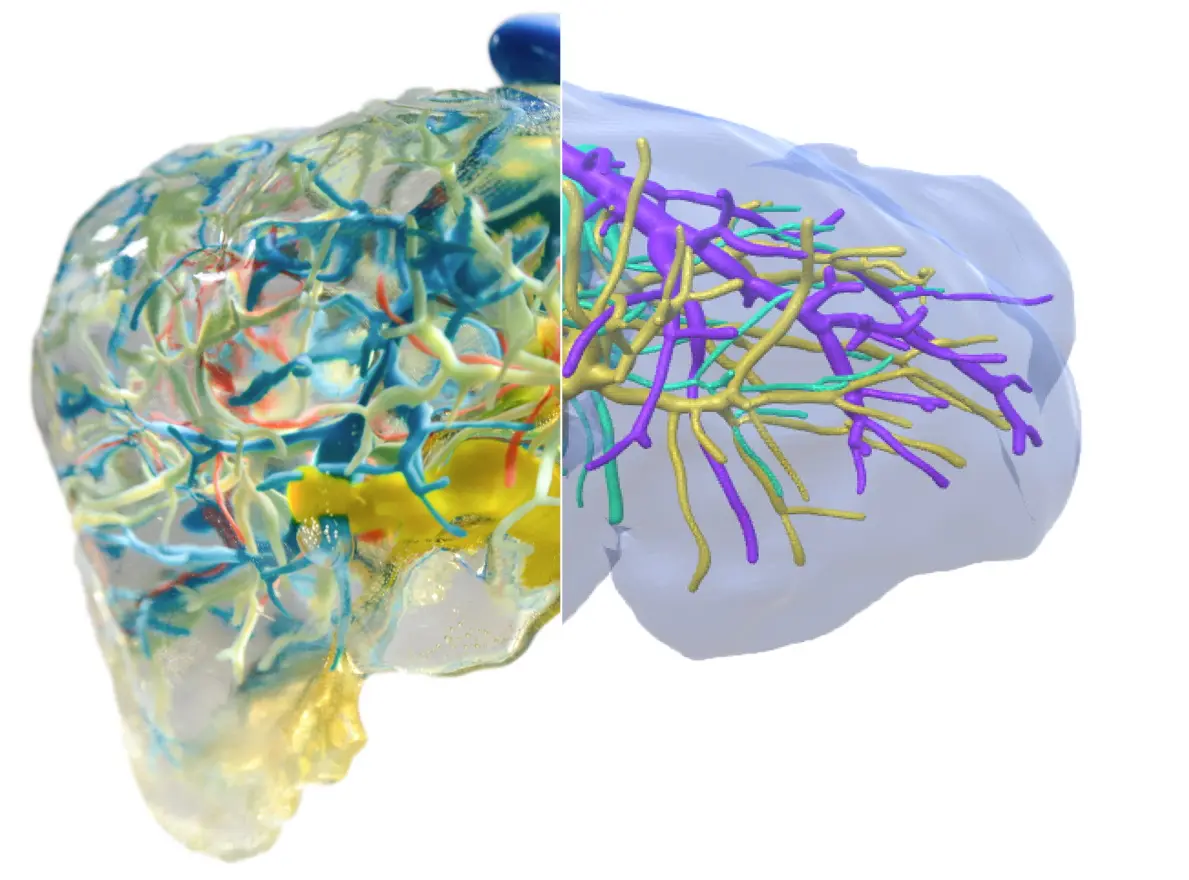

Von der Bildgebung zur Interaktion mit 3D-Modellen: technische Aspekte

Andrea Schenk, Alexander Kluge, Sirko Pelzl, Gabriel Zachmann, Rainer Malaka

Augmented und Virtual Reality (AR bzw. VR) werden bereits in einigen medizinischen Bereichen angewendet bzw. erprobt. Dem breiten Einsatz stehen allerdings noch uneinheitliche und für Personen, die nicht mit aktuellen Entwicklungen vertraut sind, oftmals verwirrende Begriffsdefinitionen entgegen. Außerdem sind die technischen Grundlagen und Anforderungen für die Verwendung häufig zu wenig bekannt. Daher soll dieser Übersichtsartikel die wichtigsten Begrifflichkeiten erläutern und den aktuellen technischen Stand darlegen, indem er einen Bogen spannt von den Anforderungen bei der medizinischen Bildgebung, über 3D-Modelle und die verschiedenen Arten der Visualisierung bis hin zu den Möglichkeiten der Interaktion in VR und AR. Dies soll dabei helfen, dass Entwickler und Anwender zukünftig eine gemeinsame Sprache sprechen und die Potenziale digitaler assistiver Technologien voll ausgeschöpft werden können.

Published in:

Die Chirugie, 12/2024

Journal article

Table of contents of the journal.

Files:

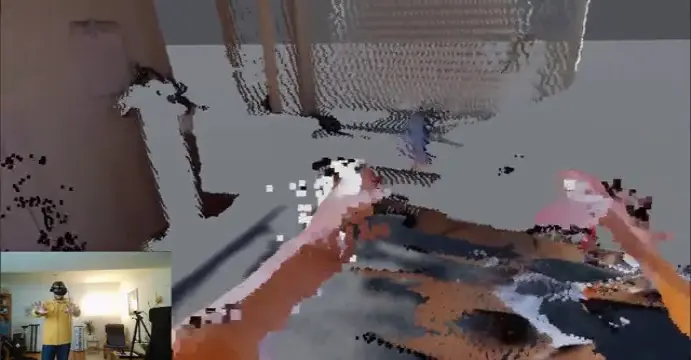

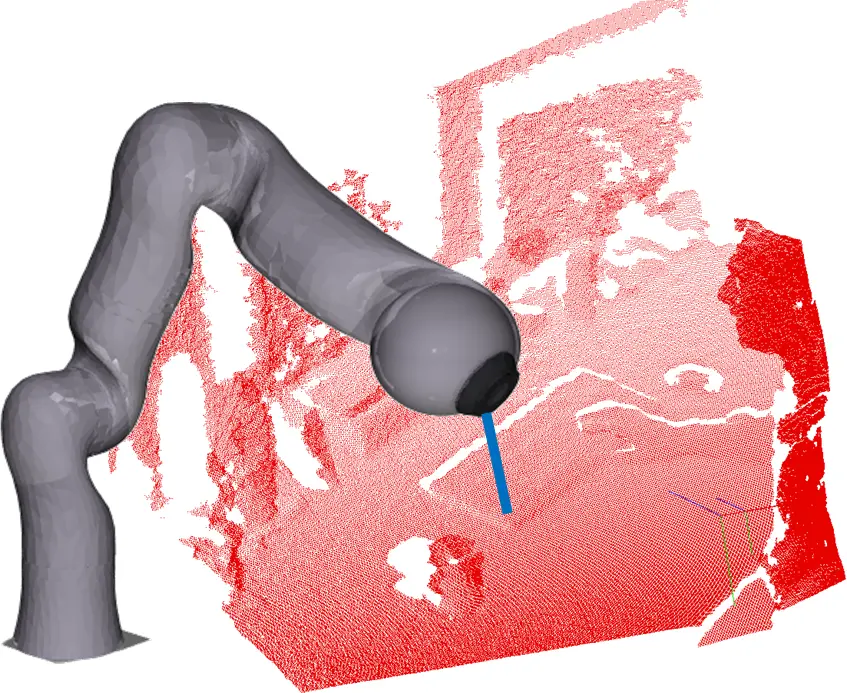

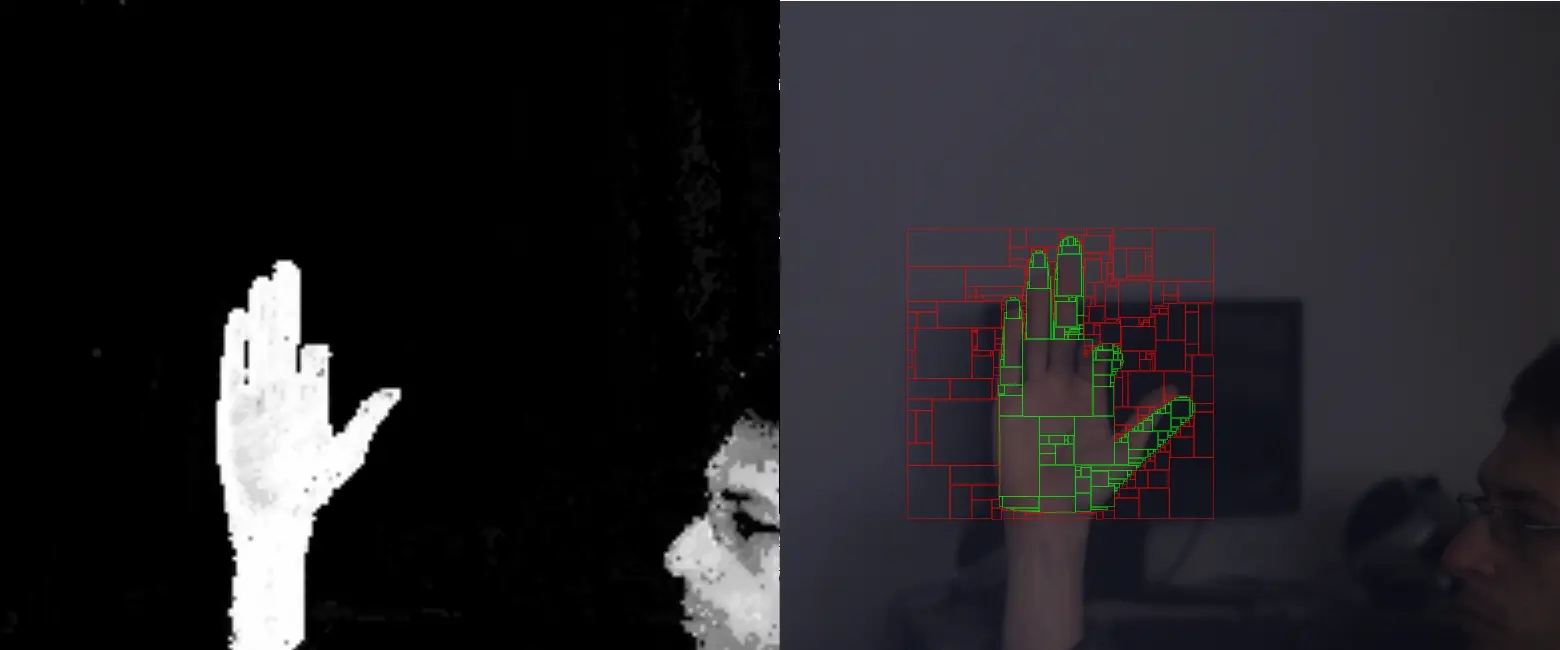

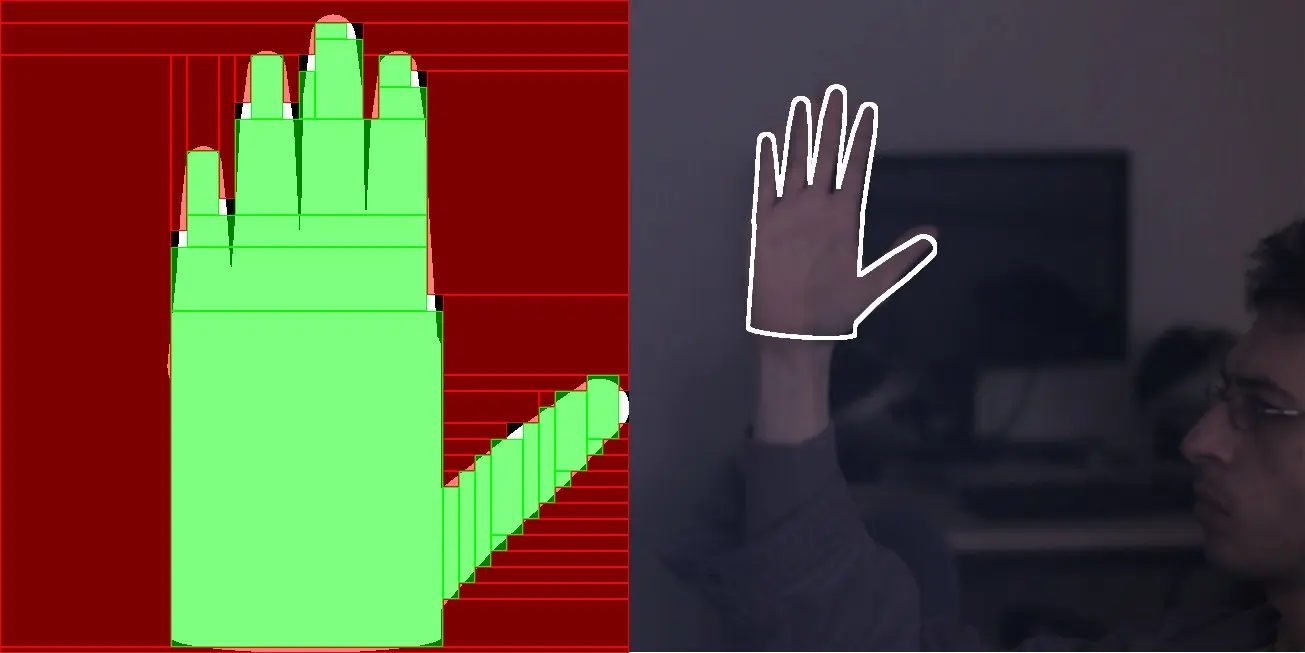

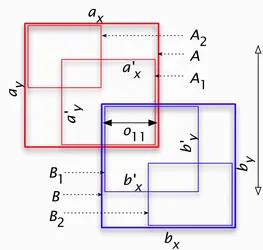

BlendPCR: Seamless and Efficient Rendering of Dynamic Point Clouds captured by Multiple RGB-D Cameras

Andre Mühlenbrock, Rene Weller, Gabriel Zachmann

Traditional techniques for rendering continuous surfaces from dynamic, noisy point clouds using multi-camera setups often suffer from disruptive artifacts in overlapping areas, similar to z-fighting. We introduce BlendPCR, an advanced rendering technique that effectively addresses these artifacts through a dual approach of point cloud processing and screen space blending. Additionally, we present a UV coordinate encoding scheme to enable high-resolution texture mapping via standard camera SDKs. We demonstrate that our approach offers superior visual rendering quality over traditional splat and mesh-based methods and exhibits no artifacts in those overlapping areas, which still occur in leading-edge NeRF and Gaussian Splat based approaches like Pointersect and P2ENet. In practical tests with seven Microsoft Azure Kinects, processing, including uploading the point clouds to GPU, requires only 13.8 ms (when using one color per point) or 29.2 ms (using high-resolution color textures), and rendering at a resolution of 3580 x 2066 takes just 3.2 ms, proving its suitability for real-time VR applications.

Source Code: https://www.github.com/muehlenb/blendpcr

Published in:

ICAT-EGVE 2024, Tsukuba, Japan, December 01-03, 2024 (Best Paper Award)

Files:

Paper

Supplementary Material

Slides

Video

Virtual Reality and Mixed Reality - 21st EuroXR International Conference, EuroXR 2024

Arcadio Reyes-Lecuona, Gabriel Zachmann, Monica Bordegoni, Jian Chen, Giannis Karaseitanidis, Alain Pagani, Patrick Bourdot

21st EuroXR International Conference, EuroXR 2024, Athens, Greece, November 27–29, 2024, Proceedings

This book constitutes the refereed proceedings of the 21st International Conference on Virtual Reality and Mixed Reality, EuroXR 2024, held in Athens, Greece, during November 27–29, 2024. The 14 full papers presented together with 1 short paper were carefully reviewed and selected from 47 submissions. The papers are grouped into the following topics: Designing Experiences, Human Factors, Rendering and Visualization, Interaction Techniques, and Education and Training. EuroXR aims to foster engagement between European industries, academia, and the public sector, to promote the development and deployment of XR tech niques in new and emerging, but also in existing fields.

Published in:

21st EuroXR International Conference, EuroXR 2024, Athens, Greece, November 27–29, 2024, Proceedings.

Embodiment in Virtual Environments - Analyzing the Effects of Latency and Avatar Representation

Niklas Bockelmann, Roland Fischer, Gabriel Zachmann

Having realistic and expressive avatars is crucial for VR applications, as they lead to a stronger sense of embodiment and presence. However, they also lead to increased system latency, which can cause several negative effects. We conducted a user study to investigate the (interaction) effects of avatar representation/quality and latency on embodiment, task efficiency, and cybersickness in VR. Specifically, we compared a high-quality, personalized point cloud avatar with a lower quality pre-modeled mesh avatar and latency settings between 150 and 300 ms. We found that the avatar quality had a greater effect on all components of embodiment than latency, and that the perception of the latter was influenced by the avatar representation. High-quality avatars were consistently and significantly rated superior and led to a less severe perception of latency. In contrast, avatar type and latency level had little effect on task efficiency and no notable one on cybersickness.

Published in:

International Conference on Cyberworlds (CW24), Kofu, Yamanashi, Japan, October 29-31, 2024

Files:

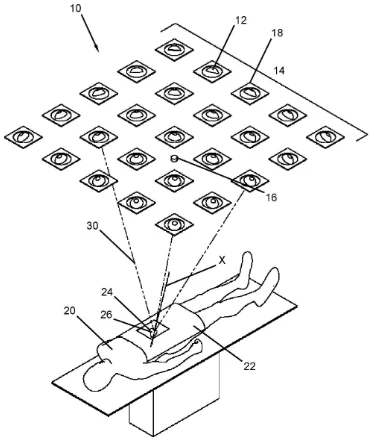

A Novel, Autonomous, Module-Based Surgical Lighting System

Andre Mühlenbrock, Hendrik Huscher, Verena Uslar, Timur Cetin, Rene Weller, Dirk Weyhe, Gabriel Zachmann

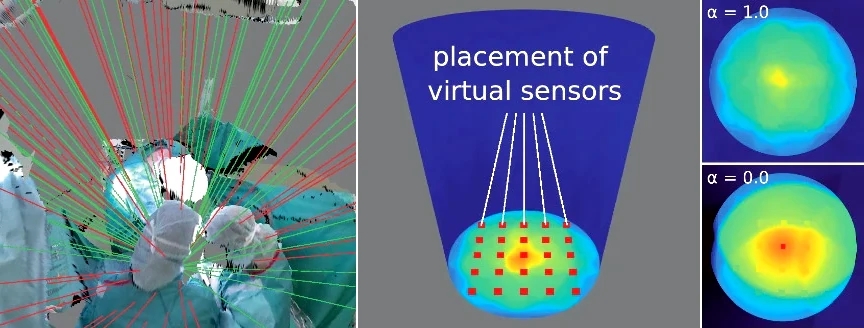

Optimal illumination of the surgical site is crucial for successful surgeries. Current lighting systems, however, suffer from significant drawbacks, particularly shadows cast by surgeons and operating room personnel. We introduce an innovative, module-based lighting system that actively prevents shadows using an array of swiveling, ceiling-mounted light modules. The intensity and orientation of these modules are autonomously controlled by novel algorithms utilizing multiple depth sensors mounted above the operating table. This paper presents our complete system, detailing the algorithms for autonomous control and the initial optimization of the light module setup. Unlike prior work that was largely conceptual and based on simulations, this study introduces a real prototype featuring 56 light modules and three depth sensors. We evaluate this prototype through measurements, semi-structured interviews (n=4), and an extensive quantitative user study (n=11). The evaluation focuses on illumination quality, shadow elimination, and suitability for open surgeries compared to conventional OR lights. Our results demonstrate that the novel lighting system and optimization algorithms outperform conventional OR lights for abdominal surgeries, according to both objective measures and subjective ratings by surgeons.

Published in:

ACM Transactions on Computing for Healthcare, Volume 6, Issue 1, January 2025.

Files:

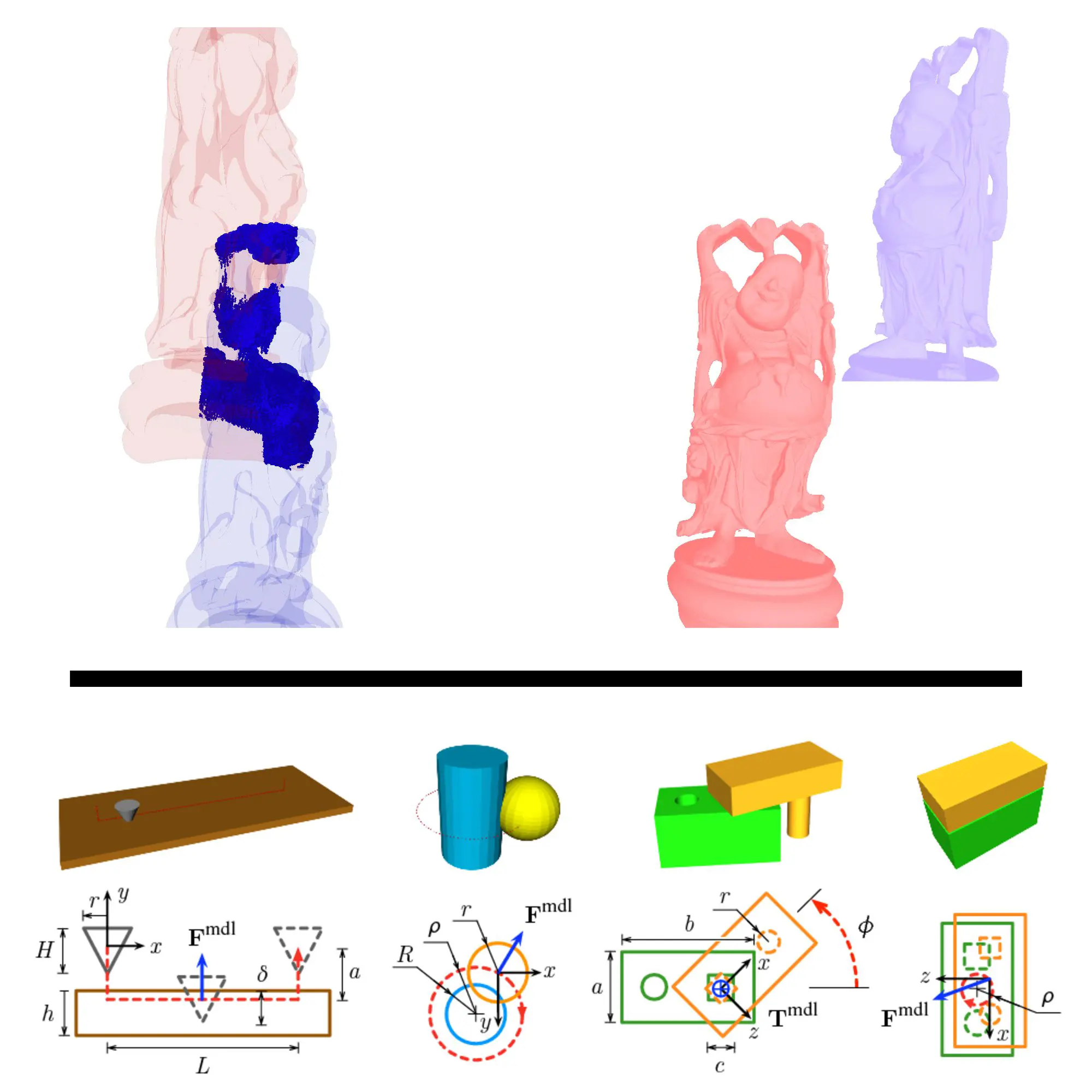

Uncertain Physics for Robot Simulation in a Game Engine

Hermann Meißenhelter, Rene Weller, Gabriel Zachmann

Physics simulations are crucial for domains like animation and robotics, yet they are limited to deterministic simulations with precise knowledge of initial conditions. We introduce a surrogate model for simulating rigid bodies with positional uncertainty (Gaussian) and use a non-uniform sphere hierarchy for object approximation. Our model outperforms traditional sampling-based methods by several orders of magnitude in efficiency while achieving similar outcomes.

Published in:

40th Anniversary of the IEEE Conference on Robotics and Automation (ICRA@40), Rotterdam, Netherlands, September 23-26, 2024

Files:

Extended Abstract

Poster

Video

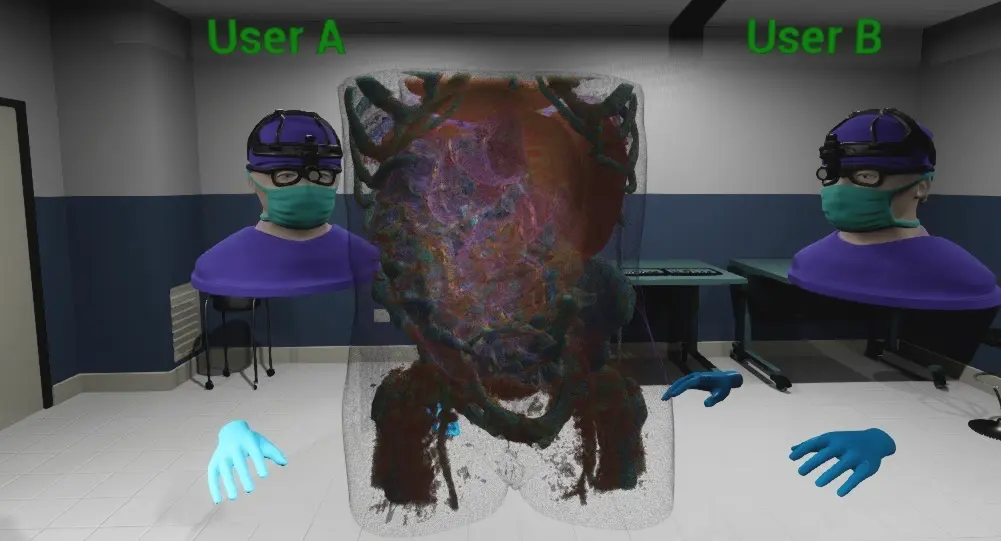

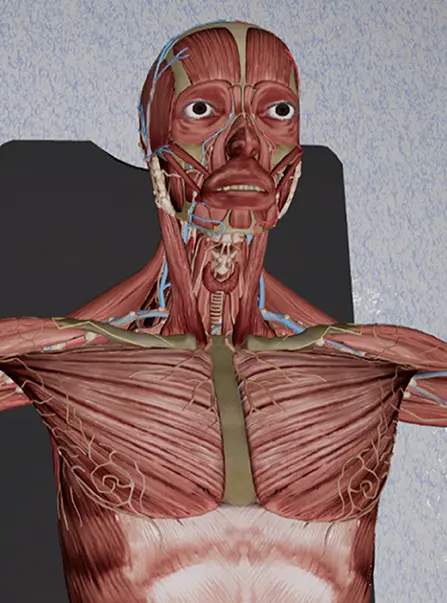

Enhancing Anatomy Learning Through Collaborative VR? An Advanced Investigation

Haya Almaree, Roland Fischer, Rene Weller, Verena Uslar, Dirk Weyhe, Gabriel Zachmann

Common techniques for anatomy education in medicine include lectures and cadaver dissection, as well as the use of replicas. However, recent advances in virtual real- ity (VR) technology have led to the development of specialized VR tools for teaching, training, and other purposes. The use of VR technology has the potential to greatly enhance the learning experience for students. These tools offer highly interactive and engaging learning environments that allow students to inspect and interact with virtual 3D anatomical structures repeatedly, intuitively, and immersively. Additionally, multi- user VR environments can facilitate collaborative learning, which has the potential to enhance the learning experience even further. However, the effectiveness of collabora- tive learning in VR has not been adequately explored. Therefore, we conducted two user studies, each with n1,2 = 33 participants, to evaluate the effectiveness of virtual collaboration in the context of anatomy learning, and compared it to individual learn- ing. For our two studies, we developed a multi-user VR anatomy learning application using UE4. Our results demonstrate that our VR Anatomy Atlas offers an engaging and effective learning experience for anatomy, both individually and collaboratively. How- ever, we did not find any significant advantages of collaborative learning in terms of learning effectiveness or motivation, despite the multi-user group spending more time in the learning environment. In fact, motivation tended to be slightly lower. Although the usability was rather high for the single-user condition, it tended to be lower for the multi-user group in one of the two studies, which may have had a slightly negative ef- fect. However, in the second study, the usability scores were similarly high for both groups. The absence of advantages for collaborative learning may be due to the more complex environment and higher cognitive load. In consequence, more research into collaborative VR learning is needed to determine the relevant factors promoting collab- orative learning in VR and the settings in which individual or collaborative learning in VR is more effective, respectively.

Published in:

Computers & Graphics, 2024

Journal article

Files:

Temporal Hierarchical Gaussian Mixture Models for Real-Time Point Cloud Streaming

Roland Fischer, Tobias Gels, Rene Weller, Gabriel Zachmann

Point clouds play an important role in robotics, autonomous driving, and telepresence applications with typical tasks such as SLAM and scene/avatar reconstruction. However, noisy sensor data, huge data loads, and inhomogeneous densities make efficient processing and accurate representation challenging, especially for real-time and streaming-based applications. We present a novel approach for compact point cloud representation and real-time streaming using a temporal hierarchical GMM-based generative model. Our level-based construction scheme allows us to dynamically adjust the maximum LOD and progressively transmit and render more detailed levels. We minimize the construction cost by exploiting the temporal coherence between consecutive frames. Combined with our highly parallelized and optimized CUDA implementation, we achieve real-time speeds with high-fidelity reconstructions. Our results show that we achieve significantly higher compression factors than previous work with similar accuracy, and that the temporal approach saves 20-36% construction time in our test scene.

Published in:

SIGGRAPH Posters, Denver, CO, USA, July 28 - August 01, 2024

Files:

Extended Abstract (preprint)

Poster

Movie

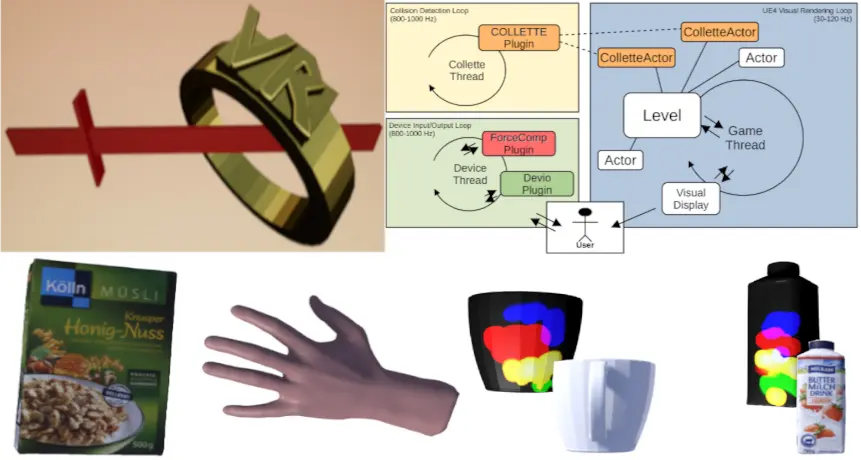

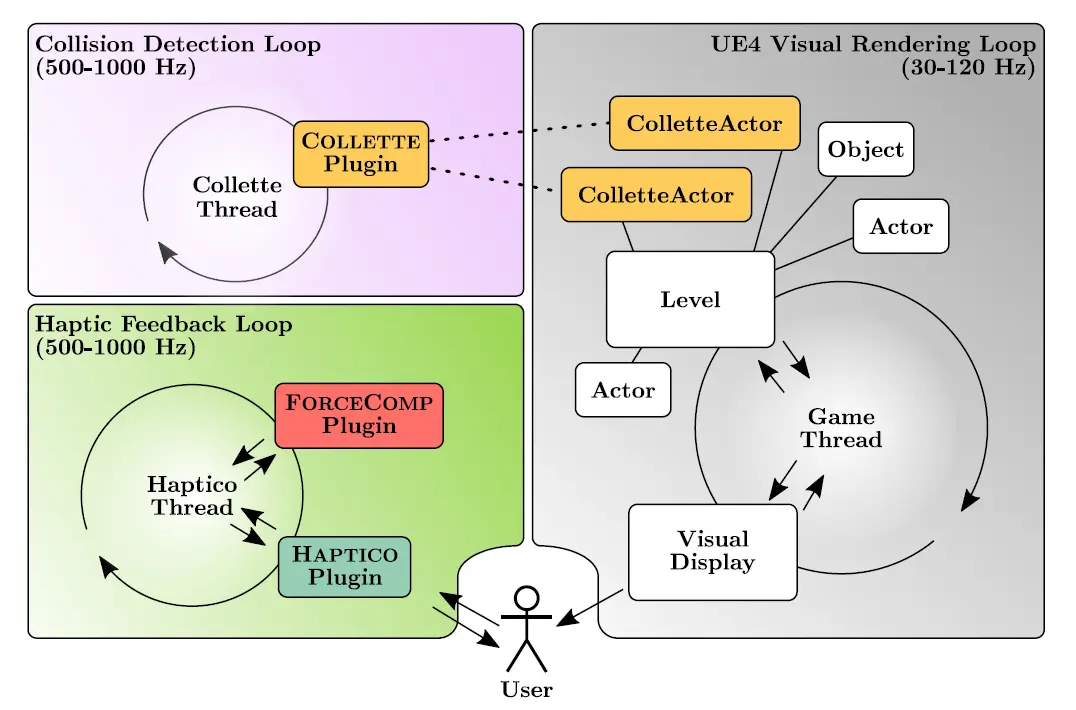

Immersive Medical VR Training Simulators with Haptic Feedback

Maximilian Kaluschke

Virtual reality and haptic feedback technologies are revolutionizing medical training, especially in orthopedic and dental surgery. These technologies create virtual simulators that offer a risk-free environment for skill development, addressing ethical concerns of traditional patient-based training. The challenge is to make simulators immersive, realistic, and effective in skill transfer to real-world scenarios. This dissertation presents a modular VR-based, haptic-enabled physics simulation system designed to meet these challenges. It features continuous, realistic 6 degrees-of-freedom force feedback with material removal capabilities, enhancing interaction with virtual anatomical structures and tools. Novel algorithms for collision detection, force rendering, and volumetric representation improve the realism and performance of VR haptic simulators. These algorithms were implemented in a versatile library compatible with various game engines, haptic devices, and virtual tools. Two advanced medical training simulators demonstrate this library: one for total hip arthroplasty and another for dental procedures like root canal treatment and caries removal. Enhanced with features like automated VR registration, sound synthesis, VR zoom, and eye tracking, these simulators significantly impact learning and skill transfer to real-life procedures. Expert evaluations and studies with dental students show substantial improvements in real-world skills after using the simulators. The research highlights the importance of hand-tool alignment and stereopsis in learning outcomes and provides new insights into dental training behaviors and the use of indirect vision. This work advances VR and haptic technology in medical training, offering tools that improve training efficiency and effectiveness, ultimately enhancing patient care and treatment outcomes.

Published in:

Staats- und Universitätsbibliothek Bremen, July 2024.

Files:

Dissertation

Slides

Talk (YouTube)

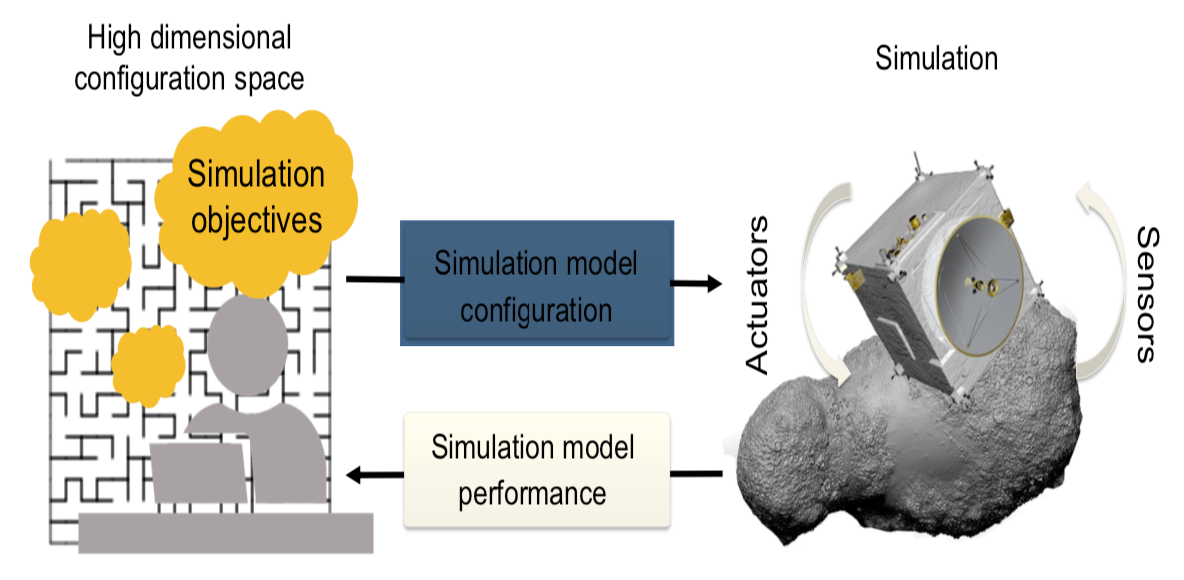

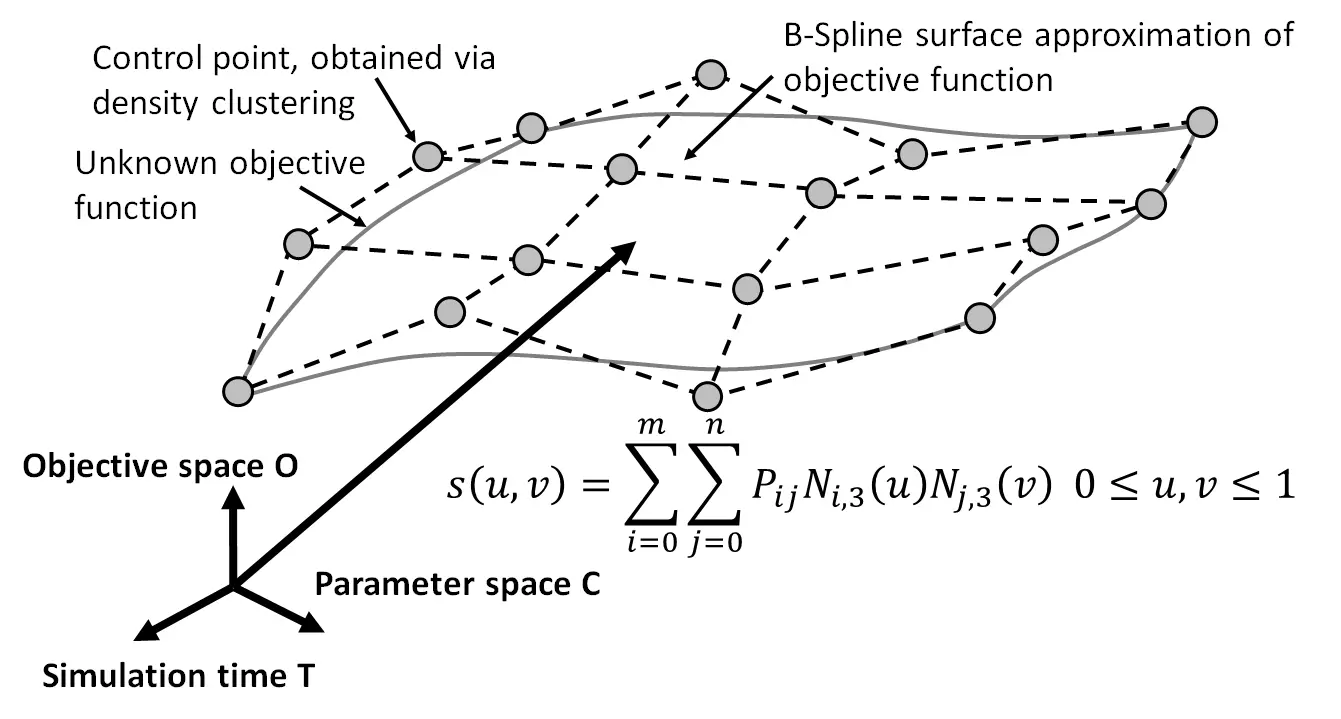

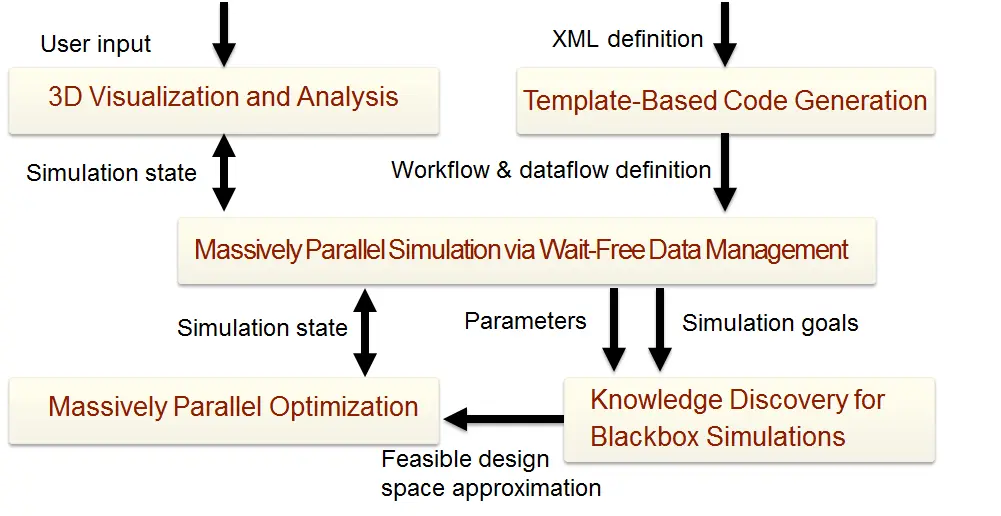

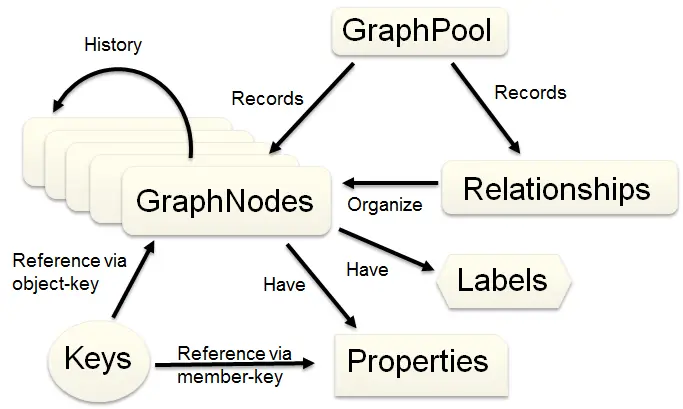

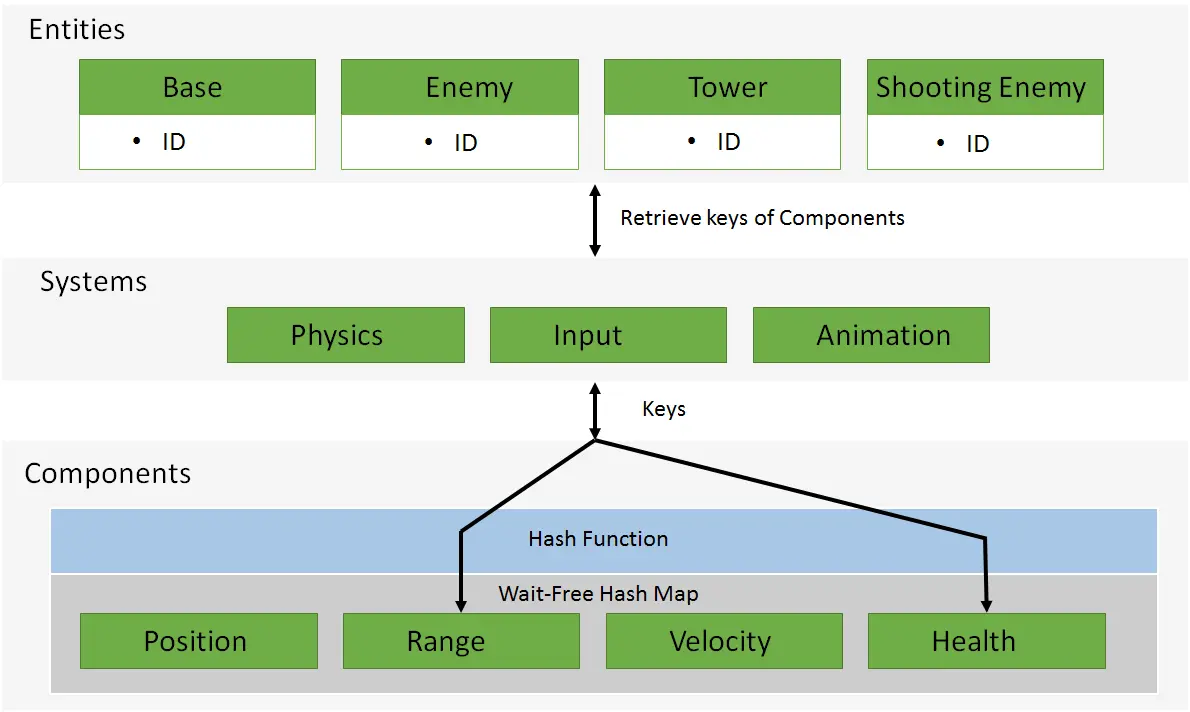

Geometric Computing for Simulation-Based Robot Planning

Toni Tan

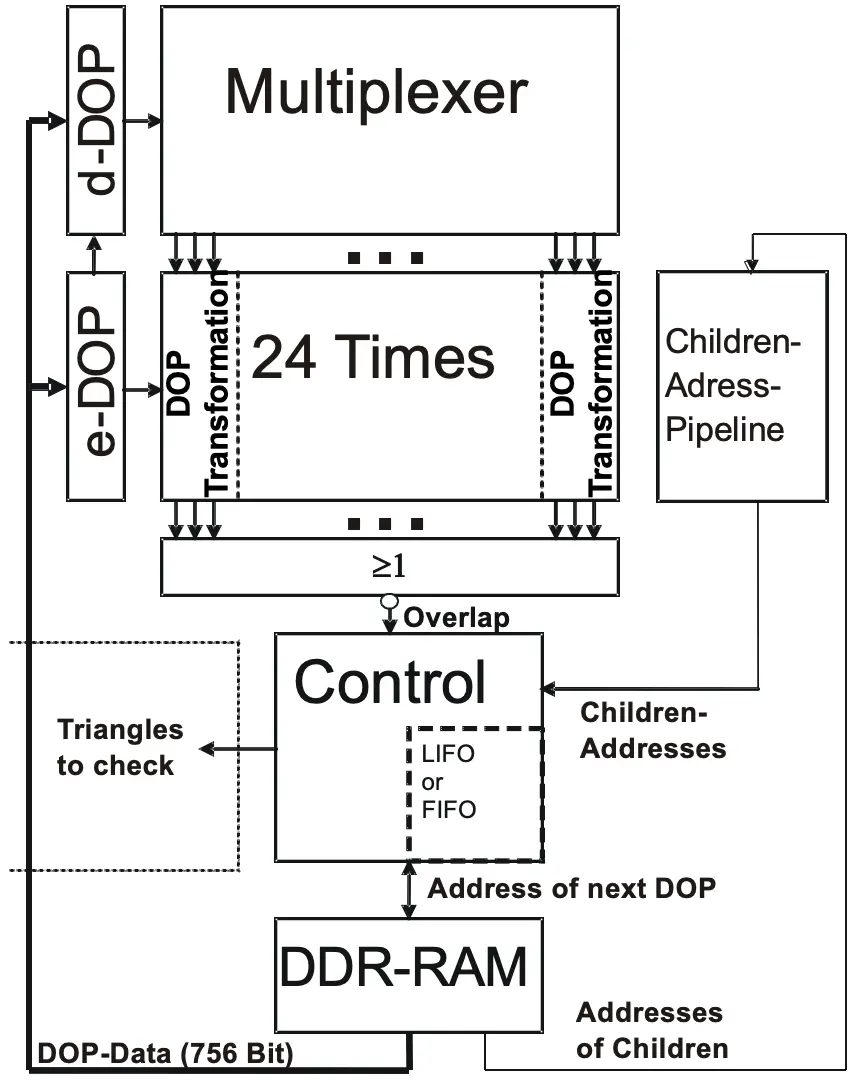

Simulation-based robot planning is a popular approach in robotics that involves using computer simulations to plan and optimize robot motions by envisioning the outcome of generated plans before their execution in the real world. This approach offers several benefits, including the ability to evaluate multiple motion plans, reduce trial-and-error in physical experimentation, and enhance safety by identifying potential collisions and other hazards before executing a motion. Although this approach can significantly benefit robotic manipulation tasks, such simulations are still computationally expensive and may require more computing power than the robotic agents can provide. In addition, uncertainties arising from, i.e., perception or simulation models must be taken into account. Current approaches often require running simulations multiple times with varying parameters to account for these uncertainties, making real-time action planning and execution difficult. This thesis presents an accelerated geometric computation, i.e., CD methods for such simulation, precisely an algorithm based on BVHs and SIMD instruction sets. The main idea is to increase the branching factor of BVH according to available SIMD width and simultaneously test BV nodes for intersection in parallel. In addition, this thesis presents compression strategies for BVH-based CD implemented on two existing CD algorithms, namely Doptree and Boxtree. The idea is to remove redundant information from BVHs, and compress 32-bit floating points used to represent BVHs. This greatly increases the number of simultaneous simulations done in parallel by robotic agents, with most benefitting remote robots, as their computing power is often limited. Furthermore, this thesis presents an idea of benchmarking as an online service. In the literature, it is quite often that the results of proposed algorithms are difficult to replicate due to missing hardware/software and different computing configurations. Combined with the idea of using a virtual machine to safely execute user-uploaded algorithms makes it possible to safely run benchmarks as an online service. Not only are the results reproducible, but they are also comparable, as they are done within the same hardware/software configurations. Finally, this thesis investigates an idea to address uncertainties by incorporating them into simulations. The main concept is to integrate uncertainty as a a probability distribution into CD algorithms. In this sense, CD algorithms will not only report collisions but also the probability when a collision occurs. The outcome is not a simple final state of the simulation but rather a probability map reflecting a continuous distribution of final states.

Published in:

Staats- und Universitätsbibliothek Bremen, July 2024.

Files:

Physically Based Real-Time Rendering of Atmospheres using Mie Theory

Simon Schneegans, Tim Meyran, Ingo Ginkel, Gabriel Zachmann, Andreas Gerndt

Most real-time rendering models for atmospheric effects have been designed and optimized for Earth's atmosphere. Some authors have proposed approaches for rendering other atmospheres, but these methods still use approximations that are only valid on Earth. For instance, the iconic blue glow of Martian sunsets can not be represented properly as the complex interference effects of light scattered at dust particles can not be captured by these approximations. In this paper, we present an approach for generalizing an existing model to make it capable of rendering extraterrestrial atmospheres. This is done by replacing the approximations with a physical model based on Mie Theory. We use the particle-size distribution, the particle-density distribution as well as the wavelength-dependent refractive index of atmospheric particles as input. To demonstrate the feasibility of this idea, we extend the model by Bruneton et al. [BN08] and implement it into CosmoScout VR, an open-source visualization of our Solar System. In a first step, we use Mie Theory to precompute the scattering behaviour of a particle mixture. Then, multi-scattering is simulated, and finally the precomputation results are used for real-time rendering. We demonstrate that this not only improves the visualization of the Martian atmosphere, but also creates more realistic results for our own atmosphere.

Published in:

Computer Graphics Forum (Eurographics), 2024

Journal article

Files:

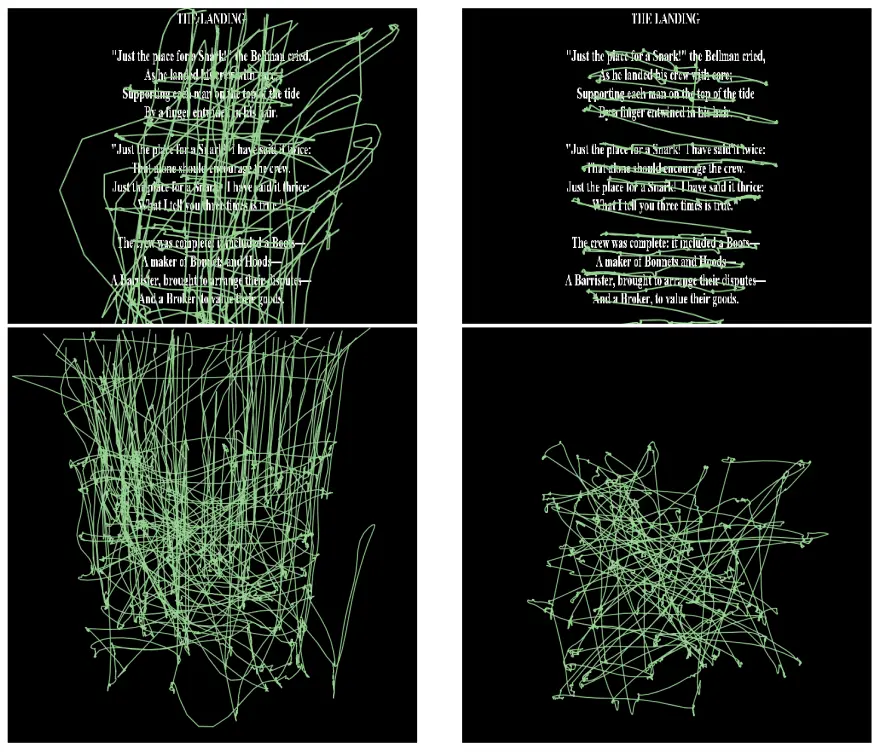

Reflecting on Excellence: VR Simulation for Learning Indirect Vision in Complex Bi-Manual Tasks

Maximilian Kaluschke, Rene Weller, Myat Su Yin, Benedikt W. Hosp, Farin Kulapichitr, Peter Haddawy, Siriwan Suebnukarn, Gabriel Zachmann

Indirect vision through a mirror, while bi-manually manipulating both the mirror and another tool is a relatively common way to perform operations in various types of surgery. However, learning such psychomotor skills requires extensive training; they are difficult to teach; and they can be quite costly, for instance, for dentistry schools. In order to study the effectiveness of VR simulators for learning these kinds of skills, we developed a simulator for training dental surgery procedures, which supports tracking of eye gaze and tool trajectories (mirror and drill), as well as automated outcome scoring. We carried out a pre-/post-test study in which 30 fifth-year dental students received six training sessions in the access opening stage of the root canal procedure using the simulator. In addition, six experts performed three trials using the simulator. The outcomes of drilling performed on realistic plastic teeth showed a significant learning effect due to the training sessions. Also, students with larger improvements in the simulator tended to improve more in the real-world tests. Analysis of the tracking data revealed novel relationships between several metrics w.r.t. eye gaze and mirror use, and performance and learning effectiveness: high rates of correct mirror placement during active drilling and high continuity of fixation on the tooth are associated with increased skills and increased learning effectiveness. Larger time allocation for tooth inspections using the mirror, i.e., indirect vision, and frequency of inspection are associated with increased learning effectiveness. Our findings suggest that eye tracking can provide valuable insights into student learning gains of bi-manual psychomotor skills, particularly in indirect vision environments.

Published in:

2024 IEEE Conference Virtual Reality and 3D User Interfaces (VR), Orlando, Florida, USA, March 16 - 21, 2024.

Files:

Links:

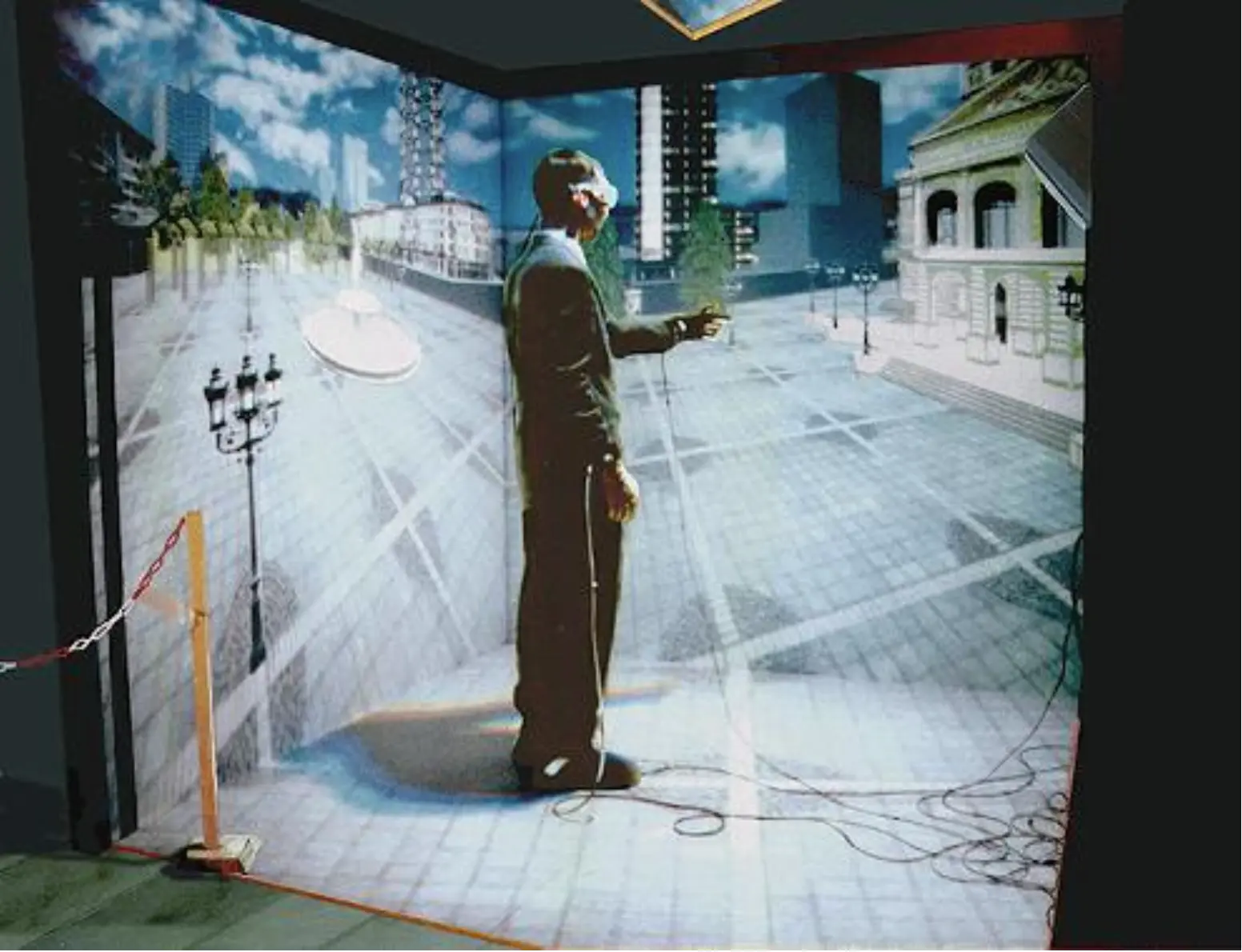

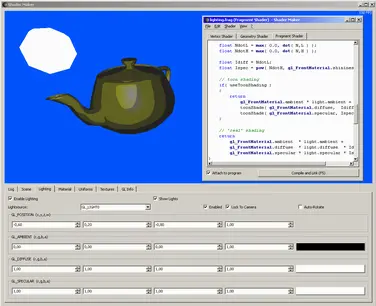

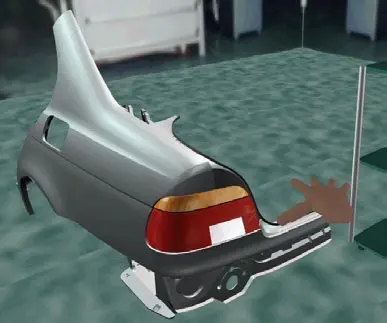

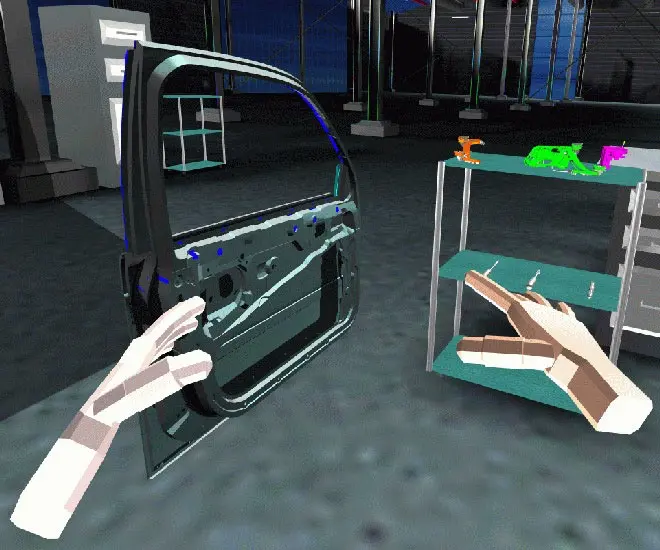

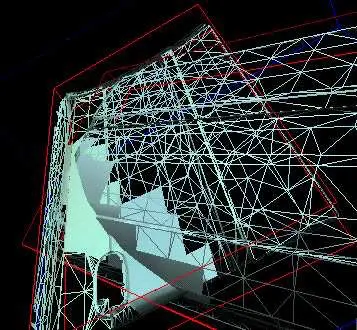

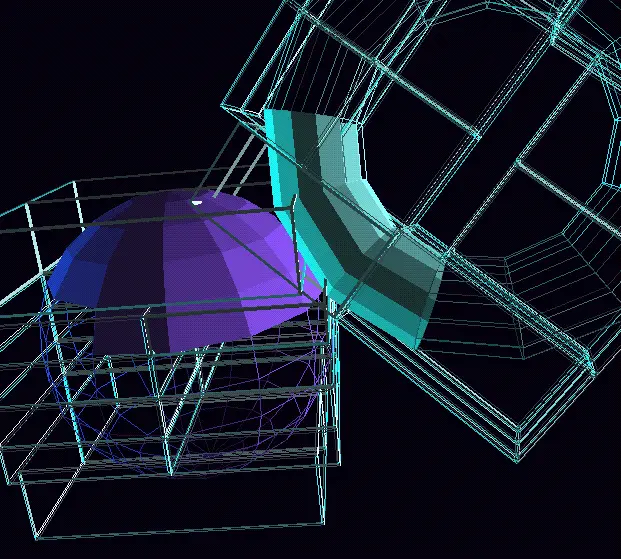

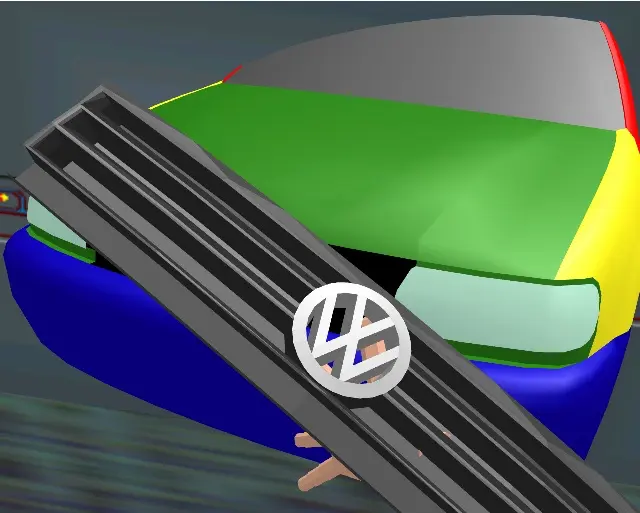

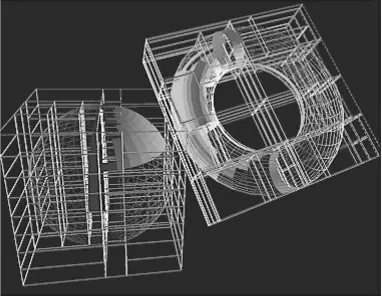

VR Research at Fraunhofer IGD, Darmstadt, Germany

Wolfgang Felger, Martin Göbel, Dirk Reiners, Gabriel Zachmann

In this article, we will present and describe some of the developments of Virtual Reality (VR) at the Fraunhofer Institute for Computer Graphics, more precisely its department for visualization and simulation (A4), later to be renamed into department for visualization and virtual reality1 in Darmstadt, Germany, from 1991 until 2000.

Published in:

IEEE VR conference workshops, 2024.

Files:

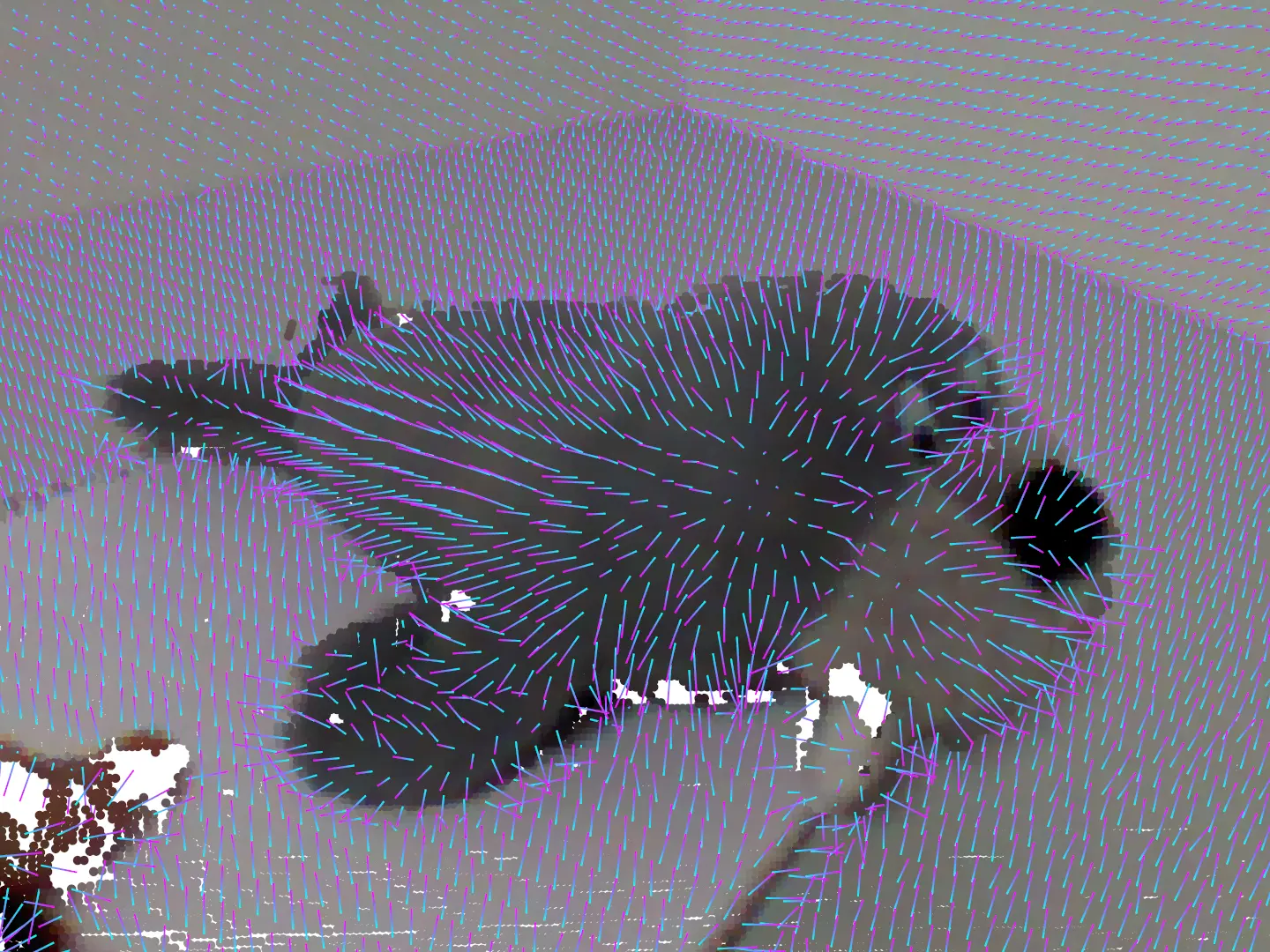

Effects of Markers in Training Datasets on the Accuracy of 6D Pose Estimation

Janis Rosskamp, Rene Weller, and Gabriel Zachmann

Collecting training data for pose estimation methods on images is a time-consuming task and usually involves some kind of manual labeling of the 6D pose of objects. This time could be reduced considerably by using marker-based tracking that would allow for automatic labeling of training images. However, images containing markers may reduce the accuracy of pose estimation due to a bias introduced by the markers. In this paper, we analyze the influence of markers in training images on pose estimation accuracy. We investigate the accuracy of estimated poses for three different cases: i) training on images with markers, ii) removing markers by inpainting, and iii) augmenting the dataset with randomly generated markers to reduce spatial learning of marker features. Our results demonstrate that utilizing marker-based techniques is an effective strategy for collecting large amounts of ground truth data for pose prediction. Moreover, our findings suggest that the usage of inpainting techniques do not reduce prediction accuracy. Additionally, we investigate the effect of inaccuracies of labeling in training data on prediction accuracy. We show that the precise ground truth data obtained through marker tracking proves to be superior compared to markerless datasets if labeling errors of 6D ground truth exist.

Published in:

Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2024, 4457-4466.

Files:

Links:

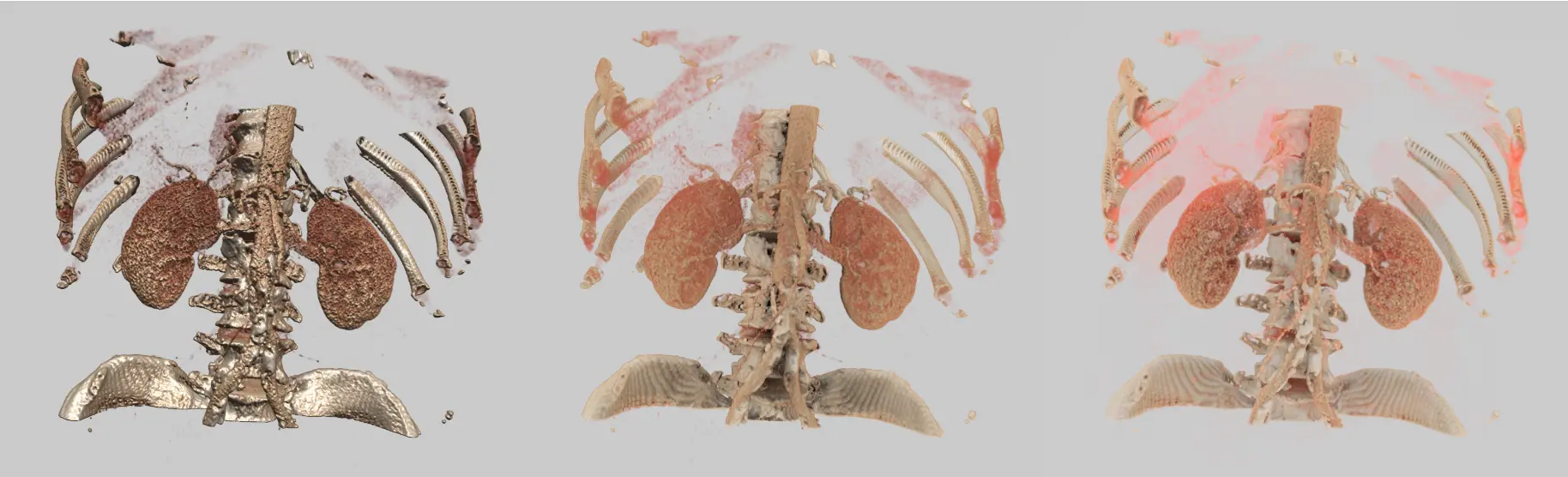

A Clinical User Study Investigating the Benefits of Adaptive Volumetric Illumination Sampling

Valentin Kraft, Christian Schumann, Daniela Salzmann, Dirk Weyhe, Gabriel Zachmann, and Andrea Schenk

Accurate and fast understanding of the patient’s anatomy is crucial in surgical decision making and particularly important in visceral surgery. Sophisticated visualization techniques such as 3D Volume Rendering can aid the surgeon and potentially lead to a benefit for the patient. Recently, we proposed a novel volume rendering technique called Adaptive Volumetric Illumination Sampling (AVIS) that can generate realistic lighting in real-time, even for high resolution images and volumes but without introducing additional image noise. In order to evaluate this new technique, we conducted a randomized, three-period crossover study comparing AVIS to conventional Direct Volume Rendering (DVR) and Path Tracing (PT). CT datasets from 12 patients were evaluated by 10 visceral surgeons who were either senior physicians or experienced specialists. The time needed for answering clinically relevant questions as well as the correctness of the answers were analyzed for each visualization technique. In addition to that, the perceived workload during these tasks was assessed for each technique, respectively. The results of the study indicate that AVIS has an advantage in terms of both time efficiency and most aspects of the perceived workload, while the average correctness of the given answers was very similar for all three methods. In contrast to that, Path Tracing seems to show particularly high values for mental demand and frustration. We plan to repeat a similar study with a larger participant group to consolidate the results.

Published in:

IEEE Transactions on Visualization and Computer Graphics, 2024, 1-8.

Files:

Optimizing the Illumination of a Surgical Site in New Autonomous Module-based Surgical Lighting Systems

Andre Mühlenbrock, René Weller, Gabriel Zachmann

Good illumination of the surgical site is crucial for the success of a surgery—yet current, typical surgical lighting systems have significant shortcomings, e.g. with regard to shadowing and ease of handling. To address these shortcomings, new lighting systems for operating rooms have recently been developed, consisting of a variety of swiveling light modules that are mounted on the ceiling and controlled automatically. For such a new type of lighting system, we present a new optimization pipeline that maintains the brightness at the surgical site as constant as possible over time and minimizes shadows by using depth sensors. Furthermore, by performing simulations on point cloud recordings of nine real abdominal surgeries, we demonstrate that our optimization pipeline is capable of effectively preventing shadows cast by bodies and heads of the OR personnel.

Published in:

Medical Imaging and Computer-Aided Diagnosis (MICAD 2022), edited by Ruidan Su, Yudong Zhang, Han Liu, and Alejandro F Frangi, 293–303. Singapore: Springer Nature Singapore, 2023.

Files:

Virtual Reality and Mixed Reality - 20th EuroXR International Conference, EuroXR 2023

Gabriel Zachmann, Krzysztof Walczak, Omar A. Niamut, Kyle Johnsen, Wolfgang Stuerzlinger, Mariano Alcañiz-Raya, Greg Welch, Patrick Bourdot

20th EuroXR International Conference, EuroXR 2023, Rotterdam, The Netherlands, November 29 – December 1, 2023, Proceedings

This book constitutes the refereed proceedings of the 20th International Conference on Virtual Reality and Mixed Reality, EuroXR 2023, held in Rotterdam, the Netherlands, during November 29-December 1, 2023.

The 14 full papers presented together with 2 short papers were carefully reviewed and selected from 42 submissions.

The papers are grouped into the following topics: Interaction in Virtual Reality; Designing XR Experiences; and Human Factors in VR: Performance, Acceptance, and Design.

Published in:

20th EuroXR International Conference, EuroXR 2023, Rotterdam, The Netherlands, November 29 – December 1, 2023, Proceedings

Collaborative VR Anatomy Atlas - Investigating Multi-User Anatomy Learning

Haya Almaree, Roland Fischer, Rene Weller, Verena Uslar, Dirk Weyhe, Gabriel Zachmann

In medical education, anatomy is typically taught through lectures, cadaver dissection, and using replicas. Advances in VR technology facilitated the development of specialized VR tools for teaching, training, and other tasks. They can provide highly interactive and engaging learning environments where students can immersively and repeatedly inspect and interact with virtual 3D anatomical structures. Moreover, multi-user VR environments can be employed for collaborative learning, which may enhance the learning experience. Concrete applications are still rare, though, and the effect of collaborative learning in VR has not been adequately explored yet. Therefore, we conducted a user study with n= 33 participants to evaluate the effectiveness of virtual collaboration on the example of anatomy learning (and compared it to individual learning). For our study, we developed an UE4-based multi-user VR anatomy learning application. Our results show that our VR Anatomy Atlas provides an engaging learning experience and is very effective for anatomy learning, individually as well as collaboratively. However, interestingly, we could not find significant advantages for collaborative learning regarding learning effectiveness or motivation, even though the multi-user group spent more time in the learning environment. Although rather high for the single-user condition, the usability tended to be lower for the multi-user group. This may be due to the more complex environment and a higher cognitive load. Thus, more research in collaborative VR for anatomy education is needed to investigate, if and how it can be employed more effectively.

Published in:

Virtual Reality and Mixed Reality (EuroXR 2023) , Rotterdam, The Netherlands, 29. November - 1. December 2023 (Best Paper Award).

Files:

Inpainting of Depth Images using Deep Neural Networks for Real-Time Applications

Roland Fischer, Janis Roßkamp, Thomas Hudcovic, Anton Schlegel, Gabriel Zachmann

Depth sensors enjoy increased popularity throughout many application domains, such as robotics (SLAM) and telepresence. However, independent of technology, the depth images inevitably suffer from defects such as holes (invalid areas) and noise. In recent years, deep learning-based color image inpainting algorithms have become very powerful. Therefore, with this work, we propose to adopt existing deep learning models to reconstruct missing areas in depth images, with the possibility of real-time applications in mind. After empirical tests with various models, we chose two promising ones to build upon: a U-Net architecture with partial convolution layers that conditions the output solely on valid pixels, and a GAN architecture that takes advantage of a patch-based discriminator. For comparison, we took a standard U-Net and LaMa. All models were trained on the publically available NYUV2 dataset, which we augmented with synthetically generated noise/holes. Our quantitative and qualitative evaluations with two public and an own dataset show that LaMa most often produced the best results, however, is also significantly slower than the others and the only one not being real-time capable. The GAN and partial convolution-based models also produced reasonably good results. Which one was superior varied from case to case but, generally, the former performed better with small-sized holes and the latter with bigger ones. The standard U-Net model that we used as a baseline was the worst and most blurry.

Published in:

International Symposium on Visual Computing (ISVC) 2023, Lake Tahoe, NV, USA, October 16 - 18, 2023.

Files:

How Observers Perceive Teleport Visualizations in Virtual Environments

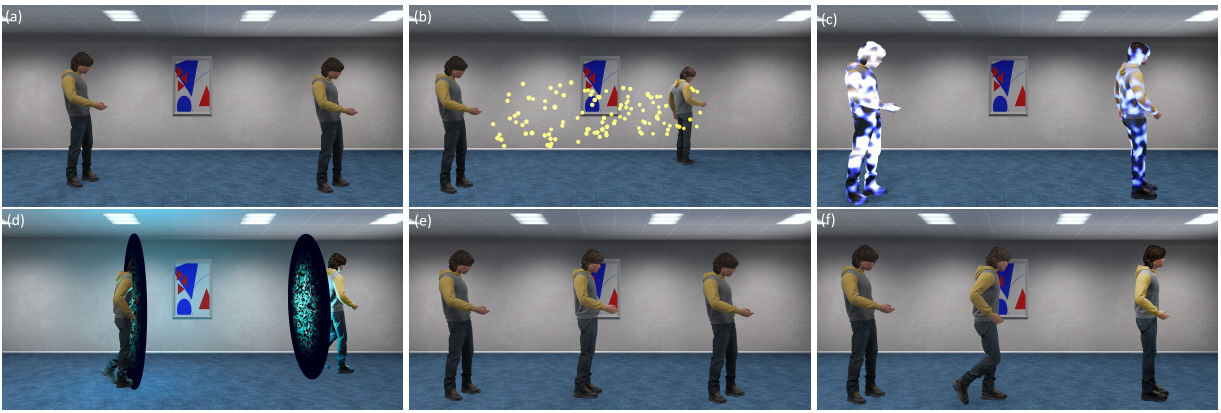

Roland Fischer, Marc Jochens, Rene Weller, Gabriel Zachmann

Multi-user VR applications have great potential to foster remote collaboration and improve or replace classical training and education. An important aspect of such applications is how participants move through the virtual environments. One of the most popular VR locomotion methods is the standard teleportation metaphor, as it is quick, easy to use and implement, and safe regarding cybersickness. However, it can be confusing to the other, observing, participants in a multi-user session and, therefore, reduce their presence. The reason for this is the discontinuity of the process, and, therefore, the lack of motion cues. As of yet, the question of how this teleport metaphor could be suitably visualized for observers has not received very much attention. Therefore, we implemented several continuous and discontinuous 3D visualizations for the teleport metaphor and conducted a user study for evaluation. Specifically, we investigated them regarding confusion, spatial awareness, and spatial and social presence. Regarding presence, we did find significant advantages for one of the visualizations. Moreover, some visualizations significantly reduced confusion. Furthermore, multiple continuous visualizations ranked significantly higher regarding spatial awareness than the discontinuous ones. This finding is also backed up by the users' tracking data we collected during the experiments. Lastly, the classic teleport metaphor was perceived as less clear and rather unpopular compared with our visualizations.

Published in:

ACM Symposium on Spatial User Interaction (SUI) 2023, Sydney, Australia, October 13 - 15, 2023.

Files:

Novel Algorithms and Methods for Immersive Telepresence and Collaborative VR

Roland Fischer

This thesis is concerned with collaborative VR and tackles many of its challenges. A core contribution is the design, development, and evaluation of a low-latency, real-time point cloud streaming and rendering pipeline for VR-based telepresence that enables to have high-quality live-captured 3D scenes and avatars in shared virtual environments. Additionally, we combined a custom direct volume renderer with an Unreal Engine 4-based collaborative VR application for immersive medical CT data visualization/inspection. We also propose novel methods for RGB-D/depth image enhancement and compression and investigate the observer’s perception of new 3D visualizations for the potentially confusing teleport locomotion metaphor in multi-user environments. Moreover, we designed and developed multiple methods to efficiently procedurally generate realistically-looking terrains for VR applications, that are capable of creating plausible biome distributions as well as natural water bodies. Lastly, we conducted a study and investigated the effects of collaborative anatomy learning in VR. Eventually, with the ensemble of contributions that we presented within this thesis, including not only novel algorithms and methods but also studies and comprehensive evaluations, we were able to improve collaborative VR on many fronts and provide critical insights into various research topics.

Published in:

Staats- und Universitätsbibliothek Bremen, October 2023.

Files:

The effect of 3D stereopsis and hand-tool alignment on learning effectiveness and skill transfer of a VR-based simulator for dental training

Maximilian Kaluschke, Myat Su Yin, Peter Haddawy, Siriwan Suebnukarn, Gabriel Zachmann

Recent years have seen the proliferation of VR-based dental simulators using a wide variety of different VR configurations with varying degrees of realism. Important aspects distin- guishing VR hardware configurations are 3D stereoscopic rendering and visual alignment of the user’s hands with the virtual tools. New dental simulators are often evaluated without analysing the impact of these simulation aspects. In this paper, we seek to determine the impact of 3D stereoscopic rendering and of hand-tool alignment on the teaching effective- ness and skill assessment accuracy of a VR dental simulator. We developed a bimanual simulator using an HMD and two haptic devices that provides an immersive environment with both 3D stereoscopic rendering and hand-tool alignment. We then independently con- trolled for each of the two aspects of the simulation. We trained four groups of students in root canal access opening using the simulator and measured the virtual and real learning gains. We quantified the real learning gains by pre- and post-testing using realistic plastic teeth and the virtual learning gains by scoring the training outcomes inside the simulator. We developed a scoring metric to automatically score the training outcomes that strongly correlates with experts’ scoring of those outcomes. We found that hand-tool alignment has a positive impact on virtual and real learning gains, and improves the accuracy of skill assessment. We found that stereoscopic 3D had a negative impact on virtual and real learn- ing gains, however it improves the accuracy of skill assessment. This finding is counter-intui- tive, and we found eye-tooth distance to be a confounding variable of stereoscopic 3D, as it was significantly lower for the monoscopic 3D condition and negatively correlates with real learning gain. The results of our study provide valuable information for the future design of dental simulators, as well as simulators for other high-precision psycho-motor tasks.

Published in:

PLoS ONE 18(10): e0291389, October 4, 2023

Files:

Links:

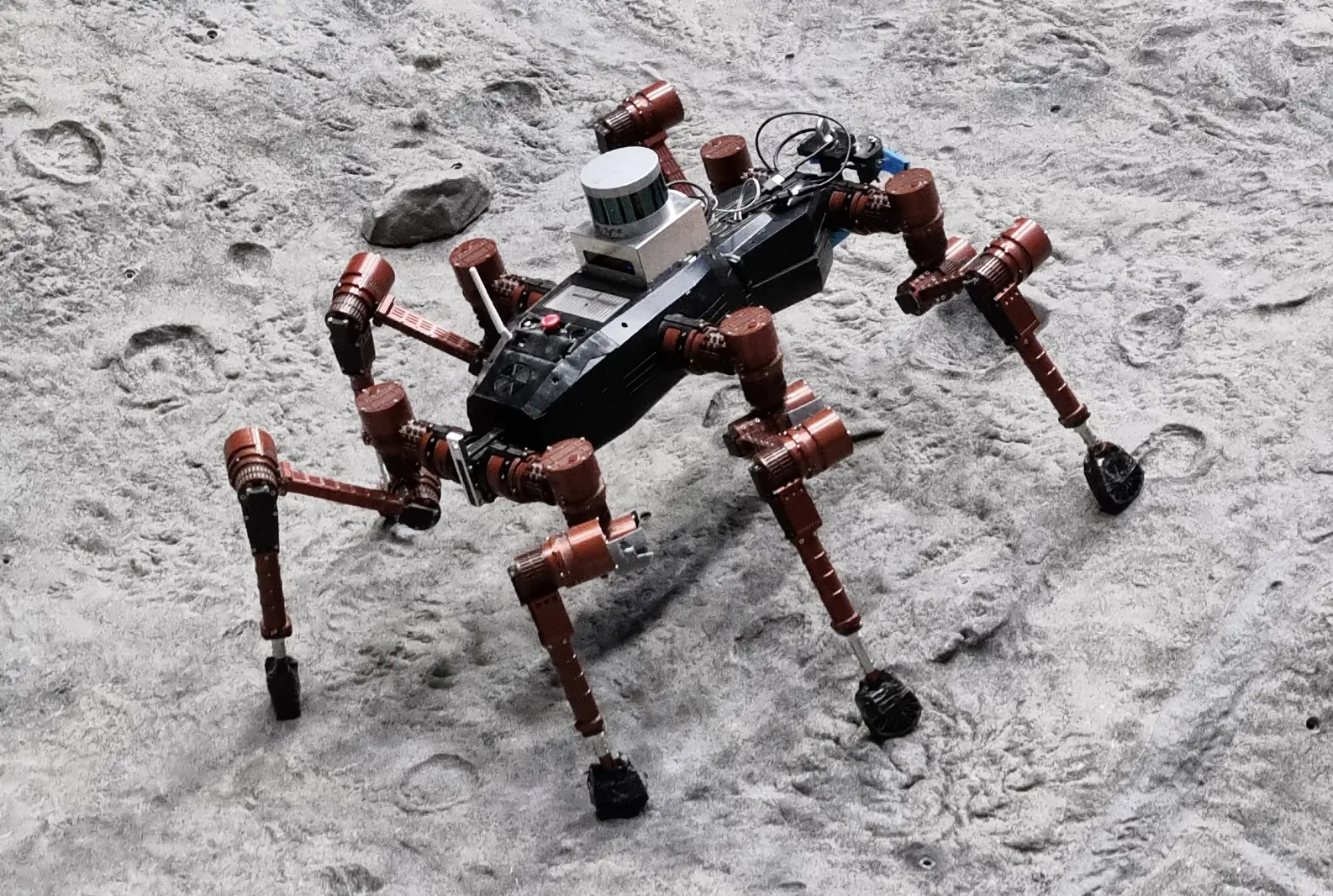

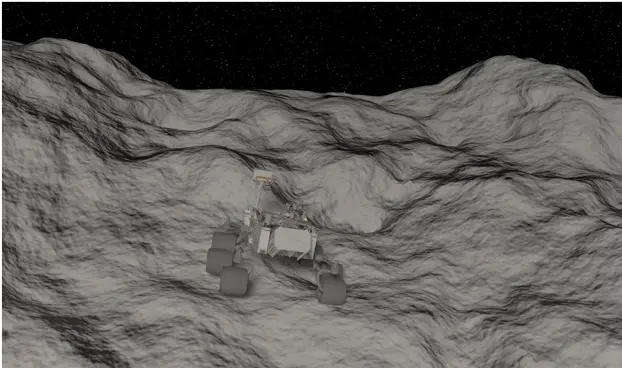

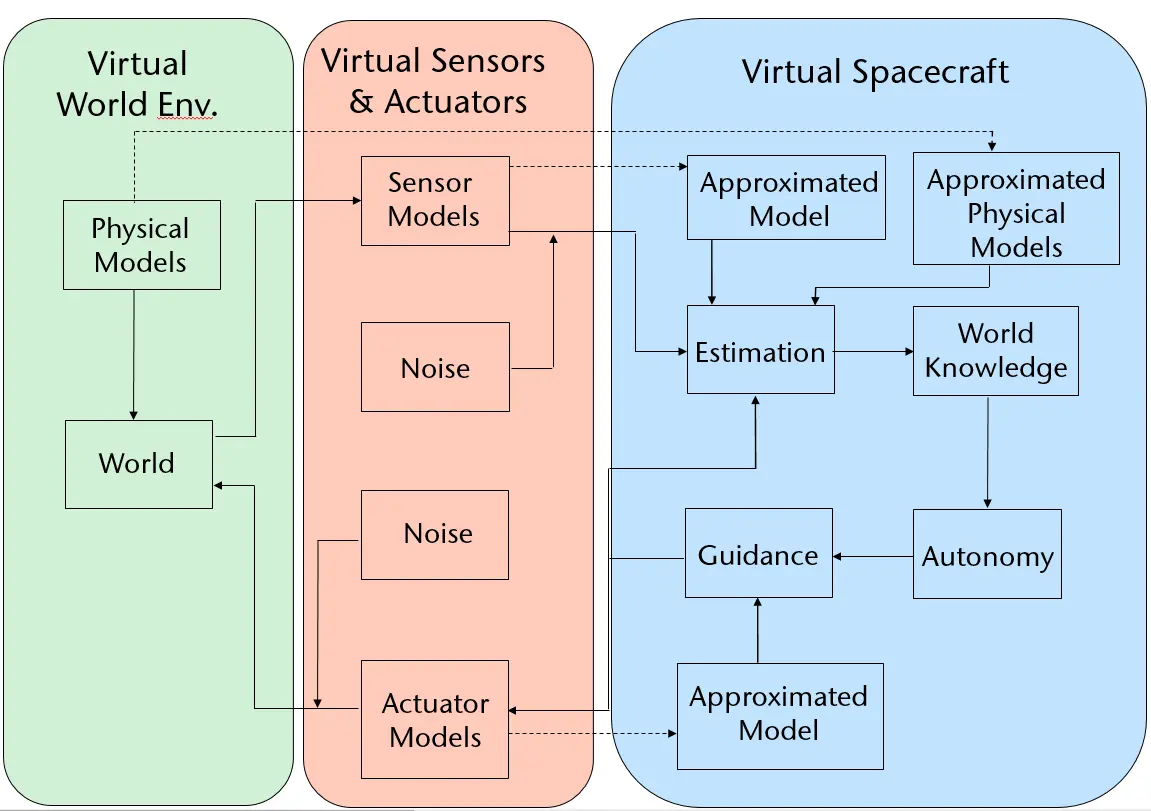

VAMEX3: Autonomously exploring mars with a heterogeneous robot swarm

Leon Danter, Joachim Clemens, Andreas Serov, Anne Schattel, Michael Schleiss, Cedric Liman, Mario Gäbel, Andre Mühlenbrock, and Gabriel Zachmann

In the search for past or present extraterrestrial life or a potential habitat for terrestrial life forms, our neighbor- ing planet Mars is the main focus. The research initiative ”VaMEx - Valles Marineris Explorer”, initiated by the German Space Agency at DLR has the main objective to explore the Valles Marineris on Mars. This rift valley sys- tem is particularly exciting because the past and current environmental conditions within the canyon, topograph- ically about 10 km beneath the global Martian surface, show an atmospheric pressure above the triple point of water - thus, physically allowing the presence of liquid water at temperatures above the melting point. The VaMEx3 project phase within this initiative aims to establish a robust and field-tested concept for a potential future space mission to the Valles Marineris performed by a heterogeneous autonomous robot swarm consist- ing of moving, running, and flying systems. These sys- tems, their sensor suites, and their software stack will be enhanced and validated to enable the swarm to au- tonomously explore regions of interest. This task in- cludes multi-robot multi-sensor SLAM, autonomous task distribution, and robust and fault-tolerant navigation with sensors that enable a redundant pose solution on the Mar- tian surface.

Published in:

ASTRA 2023, Leiden, Netherlands, October 18 - 20, 2023

Files:

Perceived Realism of Haptic Rendering Methods for Bimanual High Force Tasks: Original and Replication Study

Mario Lorenz, Andrea Hoffmann, Maximilian Kaluschke, Taha Ziadeh, Nina Pillen, Magdalena Kusserow, Jérôme Perret, Sebastian Knopp, André Dettmann, Philipp Klimant, Gabriel Zachmann, Angelika C. Bullinger

Realistic haptic feedback is a key for virtual reality applications in order to transition from solely procedural training to motor-skill training. Currently, haptic feedback is mostly used in low-force medical procedures in dentistry, laparoscopy, arthroscopy and alike. However, joint replacement procedures at hip, knee or shoulder, require the simulation of high-forces in order to enable motor-skill training. In this work a prototype of a haptic device capable of delivering double the force (35~N to 70~N) of state-of-the-art devices is used to examine the four most common haptic rendering methods (penalty-, impulse-, constraint-, rigid body-based haptic rendering) in three bimanual tasks (contact, rotation, uniaxial transition with increasing forces from 30 to 60~N) regarding their capabilities to provide a realistic haptic feedback. In order to provide baseline data, a worst-case scenario of a steel/steel interaction was chosen. The participants needed to compare a real steel/steel interaction with a simulated one. In order to substantiate our results, we replicated the study using the same study protocol and experimental setup at another laboratory. The results of the original study and the replication study deliver almost identical results. We found that certain investigated haptic rendering method are likely able to deliver a realistic sensation for bone-cartilage/steel contact but not for steel/steel contact. Whilst no clear best haptic rendering method emerged, penalty-based haptic rendering performed worst. For simulating high force bimanual tasks, we recommend a mixed implementation approach of using impulse-based haptic rendering for simulating contacts and combine it with constraint or rigid body-based haptic rendering for rotational and translational movements.

Published in:

Nature Scientific Reports, volume 13, July 11, 2023

Files:

Links:

Versatile Immersive Virtual and Augmented Tangible OR – Using VR, AR and Tangibles to Support Surgical Practice

Anke Verena Reinschluessel, Thomas Muender, Roland Fischer, Valentin Kraft, Verena Nicole Uslar, Dirk Weyhe, Andrea Schenk, Gabriel Zachmann, Tanja Döring, Rainer Malaka

Immersive technologies such as virtual reality (VR) and augmented reality (AR), in combination with advanced image segmentation and visualization, have considerable potential to improve and support a surgeon’s work. We demonstrate a solution to help surgeons plan and perform surgeries and educate future medical staff using VR, AR, and tangibles. A VR planning tool improves spatial understanding of an individual’s anatomy, a tangible organ model allows for intuitive interaction, and AR gives contactless access to medical images in the operating room. Additionally, we present improvements regarding point cloud representations to provide detailed visual information to a remote expert and about the remote expert. Therefore, we give an exemplary setup showing how recent interaction techniques and modalities benefit an area that can positively change the life of patients.

Published in:

CHI EA '23, Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems, 1 - 5. Hamburg, Germany, April 23 - 28, 2023.

Files:

Patent: Illuminating device for illuminating a surgical wound

Andre Mühlenbrock, Peter Kohrs, Adrian Fox, Gabriel Zachmann

The invention is a system for the illumination of surgical wounds, which consists of a plurality of small swiveling light units and a control system, which controls the light units so that the surgical wound is optimally illuminated. The patent essentially emerged from the SmartOT project.Published in:

Deutsches Patent- und Markenamt, Deutschland, April 13, 2023. United States Patent Application Publication, USA, November 21, 2024

Files:

Patent

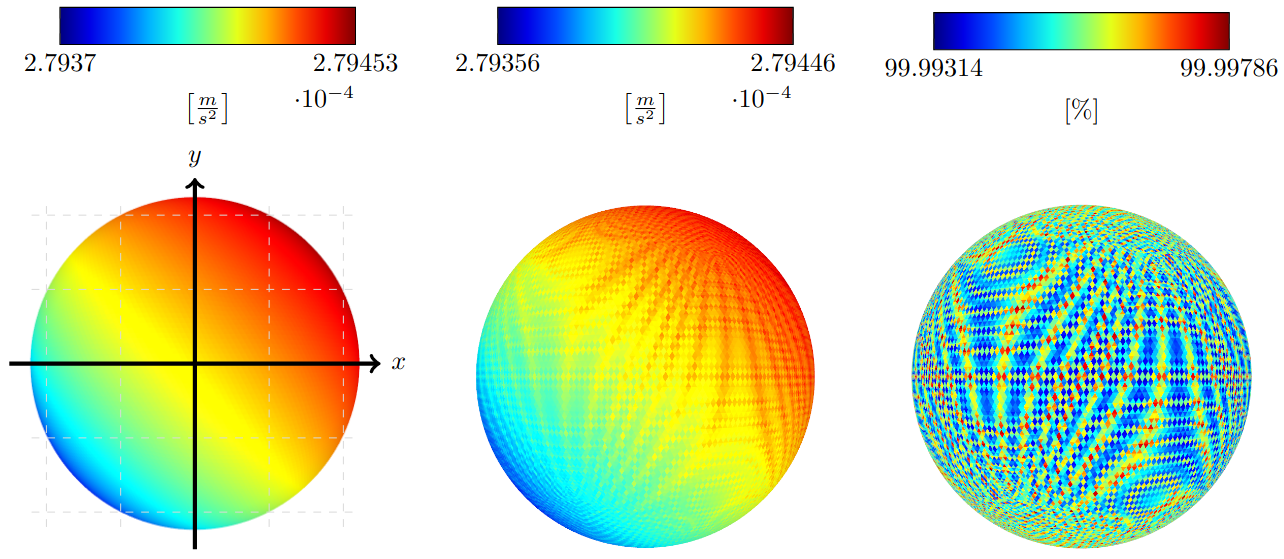

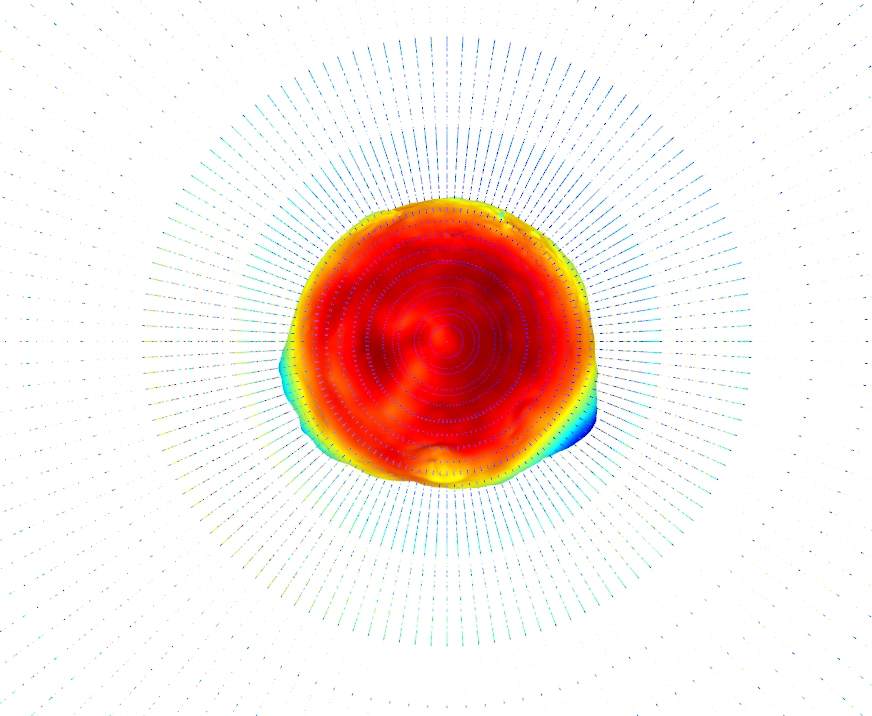

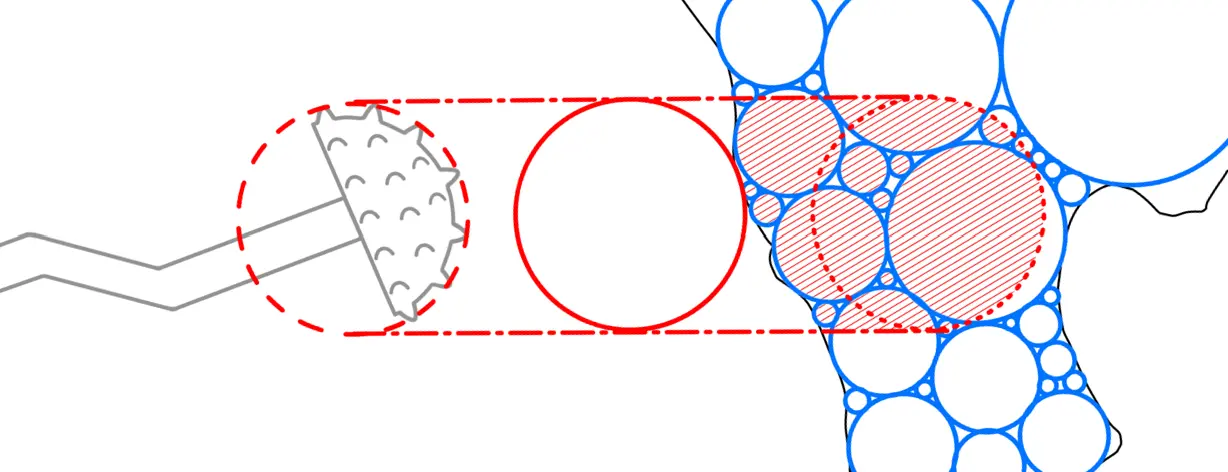

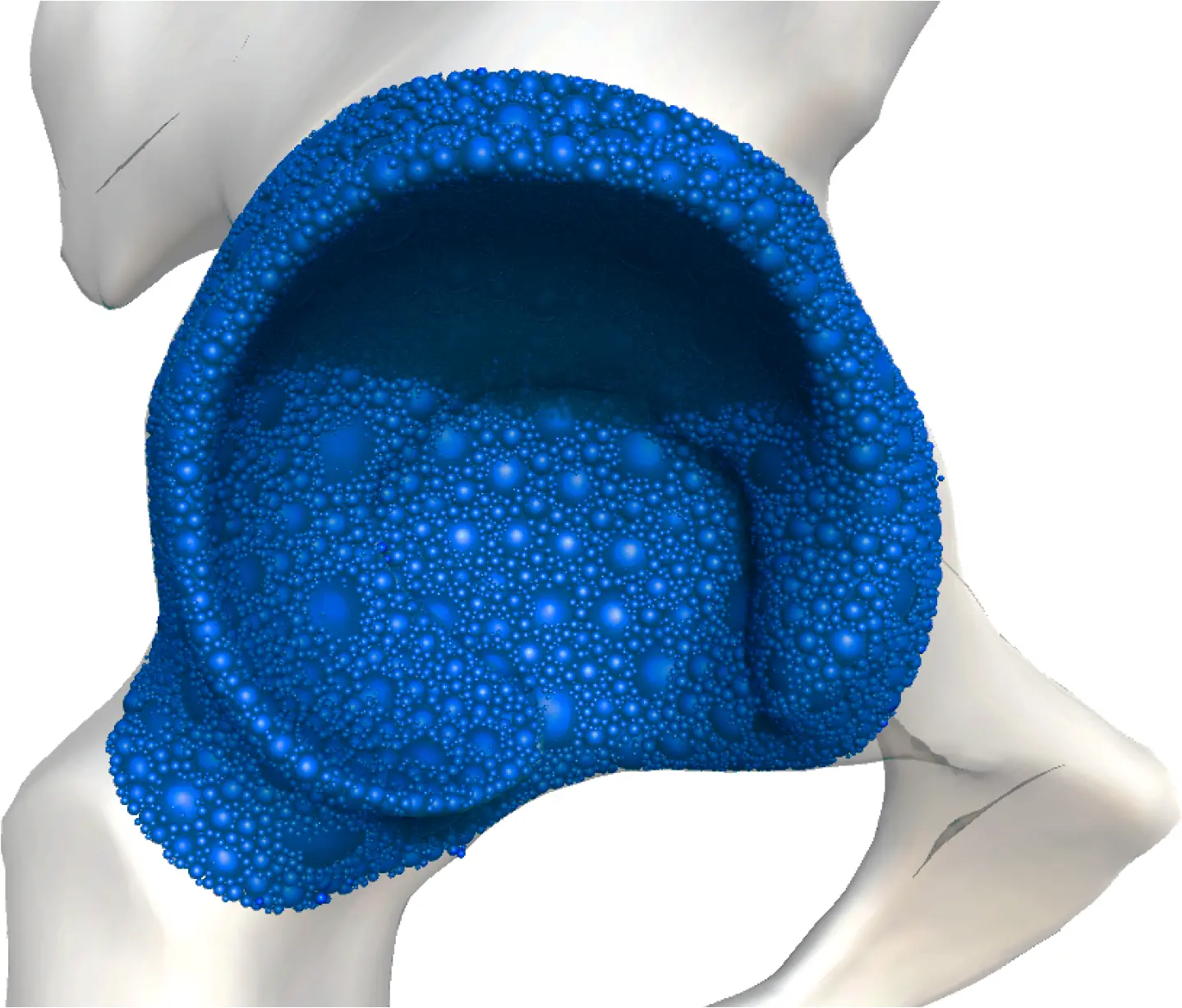

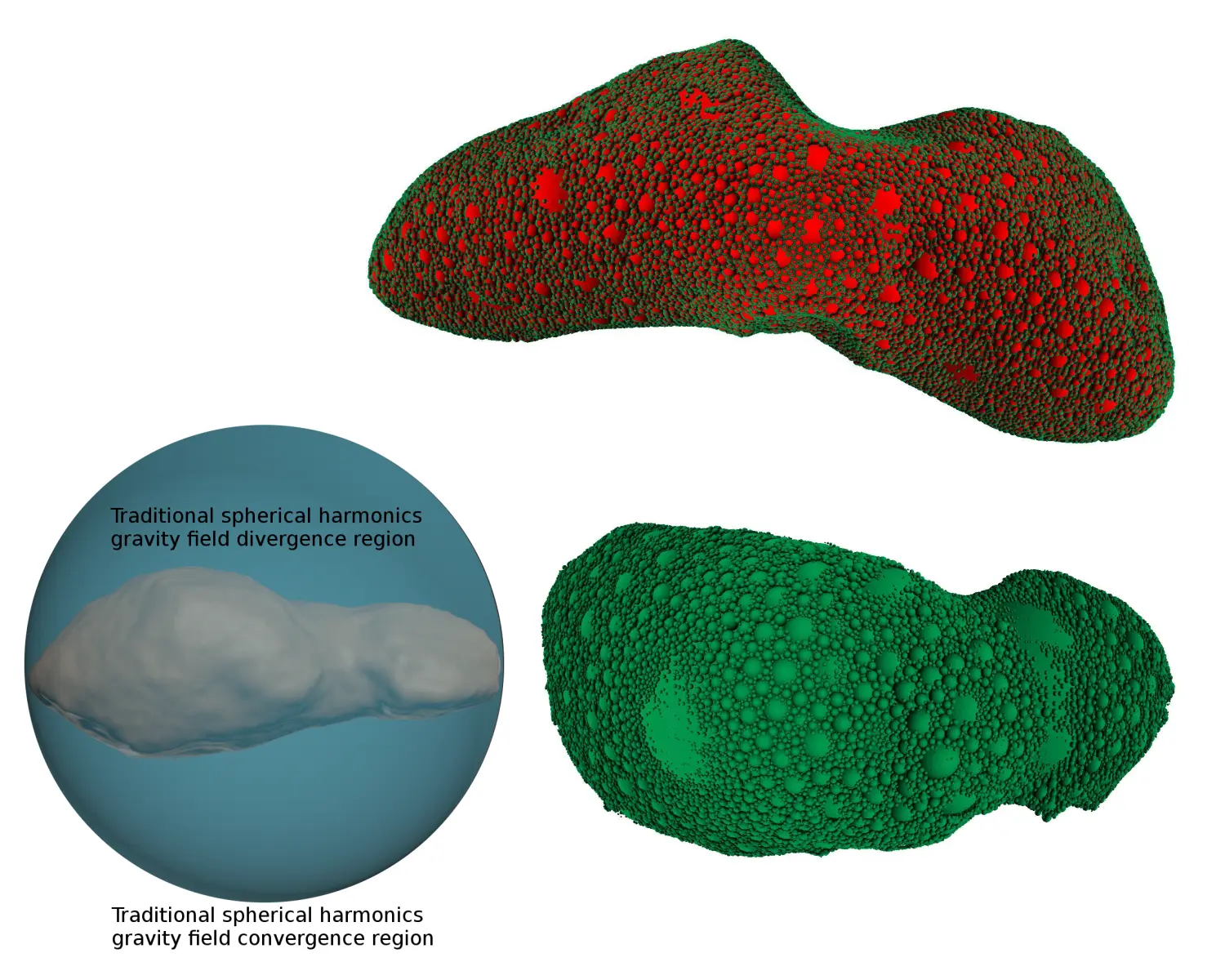

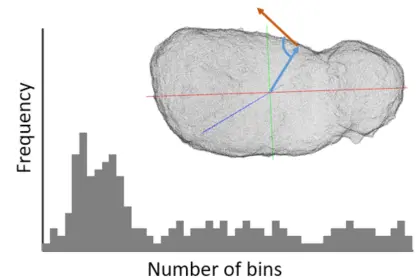

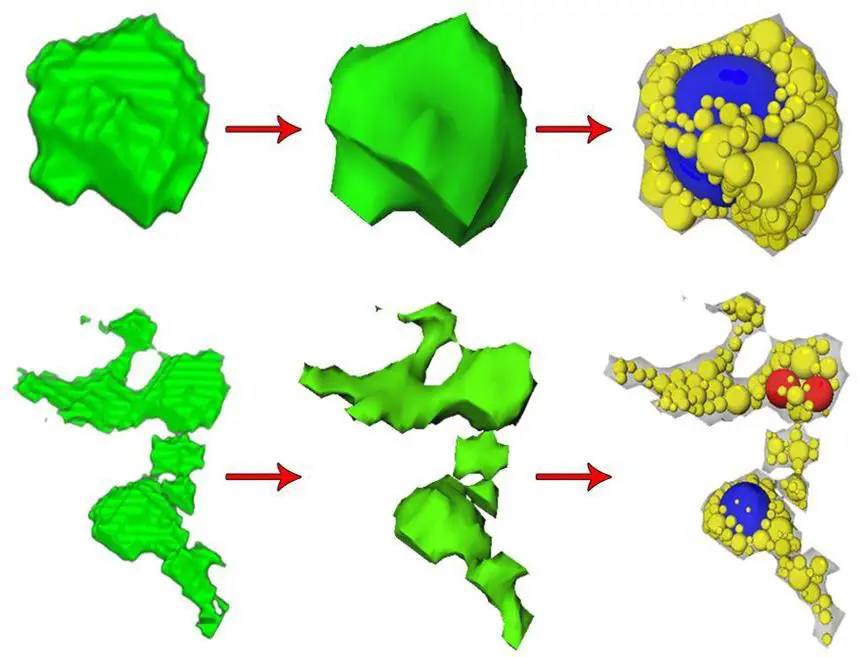

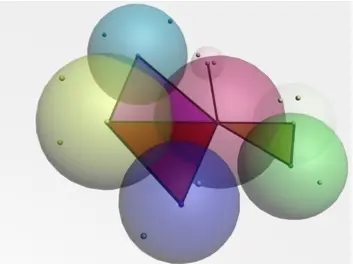

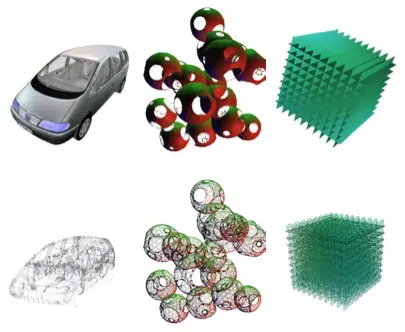

Adaptive Polydisperse Sphere Packings for High Accuracy Computations of the Gravitational Field

Hermann Meißenhelter, René Weller, Matthias Noeker, Tom Andert, Gabriel Zachmann

We present a new method to model the mass of celestial bodies based on adaptive polydisperse sphere packings. Using poly- disperse spheres in the mascon model has shown to deliver a very good approximation of the mass distribution of celestial bodies while allowing fast computations of the gravitational field. However, small voids between the spheres reduce the accuracy especially close to the surface. Hence, the idea of our adaptive sphere packing is to place more spheres close to the surface instead of filling negligible small gaps deeper inside the body. Although this reduces the packing density, we achieve greater accuracy close to the surface. For the adaptive sphere packing, we propose a mass assignment algorithm that uniformly samples the volume of the body. Additionally, we present a method to further optimize the mass distribution of the spheres based on least squares optimization. The sphere packing and the gravitational acceleration remain computable entirely on the GPU (Graphics Processing Unit).

Published in:

IEEE Aerospace Conference (AeroConf) 2023, Big Sky, Montana, USA, March 4 - 11, 2023.

Files:

A virtual reality simulation of a novel way to illuminate the surgical field – A feasibility study on the use of automated lighting systems in the operating theatre

Timur Cetin, Andre Mühlenbrock, Gabriel Zachmann, Verena Weber, Dirk Weyhe and Verena Uslar

Introduction: Surgical lighting systems have to be re-adjusted manually during surgery by the medical personnel. While some authors suggest that interaction with a surgical lighting system in the operating room might be a distractor, others support the idea that manual interaction with the surgical lighting system is a hygiene problem as pathogens might be present on the handle. In any case, it seems desirable to develop a novel approach to surgical lighting that minimizes the need for manual interaction during a surgical procedure.

Methodes: We investigated the effect of manual interaction with a classical surgical lighting system and simulated a proposed novel design of a surgical lighting system in a virtual reality environment with respect to performance accuracy as well as cognitive load (measured by electroencephalographical recordings).

Results: We found that manual interaction with the surgical lights has no effect on the quality of performance, yet for the price of a higher mental effort, possibly leading to faster fatigue of the medical personnel in the long run.

Discussion: Our proposed novel surgical lighting system negates the need for manual interaction and leads to a performance quality comparable to the classical lighting system, yet with less mental load for the surgical personnel.

Published in:

Frontiers in Surgery, March 02, 2023.

Files:

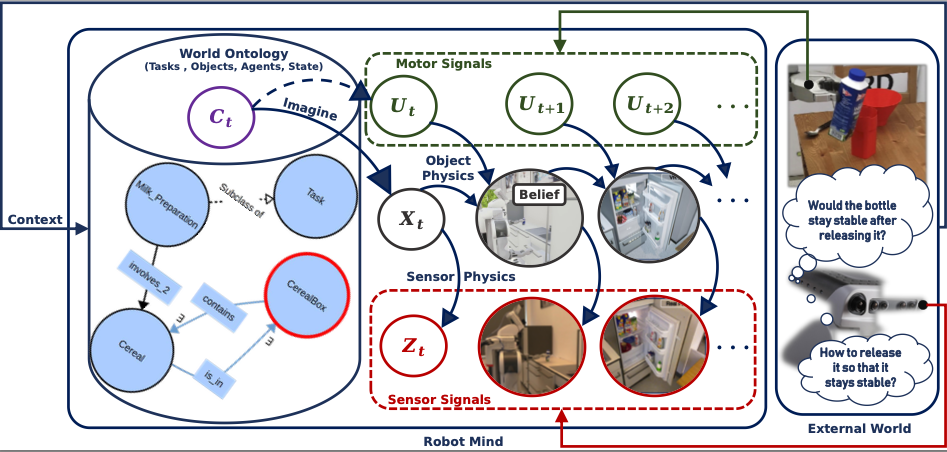

NaivPhys4RP - Towards Human-like Robot Perception "Physical Reasoning based on Embodied Probabilistic Simulation"

Franklin Kenghagho K., Michael Neumann, Patrick Mania, Toni Tan, Feroz Siddiky A., René Weller, Gabriel Zachmann and Michael Beetz

Perception in complex environments especially dynamic and human-centered ones goes beyond classical tasks such as classification usually known as the what- and where-object-questions from sensor data, and poses at least three challenges that are missed by most and not properly addressed by some actual robot perception systems. Note that sensors are extrinsically (e.g., clutter, embodiedness-due noise, delayed processing) and intrinsically (e.g., depth of transparent objects) very limited, resulting in a lack of or high-entropy data, that can only be difficultly compressed during learning, difficultly explained or intensively processed during interpretation. (a) Therefore, the perception system should rather reason about the causes that produce such effects (how/why-happen-questions). (b) It should reason about the consequences (effects) of agent-object and object-object interactions in order to anticipate (what-happen-questions) the (e.g., undesired) world state and then enable successful action on time. (c) Finally, it should explain its outputs for safety (meta why/how-happen-questions). This paper introduces a novel white-box and causal generative model of robot perception (NaivPhys4RP) that emulates human perception by capturing the Big Five aspects (FPCIU) of human commonsense, recently established, that invisibly (dark) drive our observational data and allow us to overcome the above problems. However, NaivPhys4RP particularly focuses on the aspect of physics, which ultimately and constructively determines the world state.

Published in:

2022 IEEE-RAS 21th International Conference on Humanoid Robots (Humanoids), Ginowan, Okinawa, Japan, November 28 - 30, 2022.

Files:

The OPA3L System and Testconcept for Urban Autonomous Driving

Andreas Folkers, Constantin Wellhausen, Matthias Rick, Xibo Li, Lennart Evers, Verena Schwarting, Joachim Clemens, Philipp Dittmann, Mahmood Shubbak, Tom Bustert, Gabriel Zachmann, Kerstin Schill, Christof Büskens

The development of autonomous vehicles for urban driving is widely considered as a challenging task as it requires intensive interdisciplinary expertise. The present article presents an overview of the research project OPA 3 L (Optimally Assisted, Highly Automated, Autonomous and Cooperative Vehicle Navigation and Localization). It highlights the hardware and software architecture as well as the developed methods. This comprises algorithms for localization, perception, high- and low-level decision making and path planning, as well as model predictive control. The research project contributes a cross-platform holistic approach applicable for a wide range of real-world scenarios. The developed framework is implemented and tested on a real research vehicle, miniature vehicles, and a simulation system.

Published in:

2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, October 8 - 12, 2022.

Files:

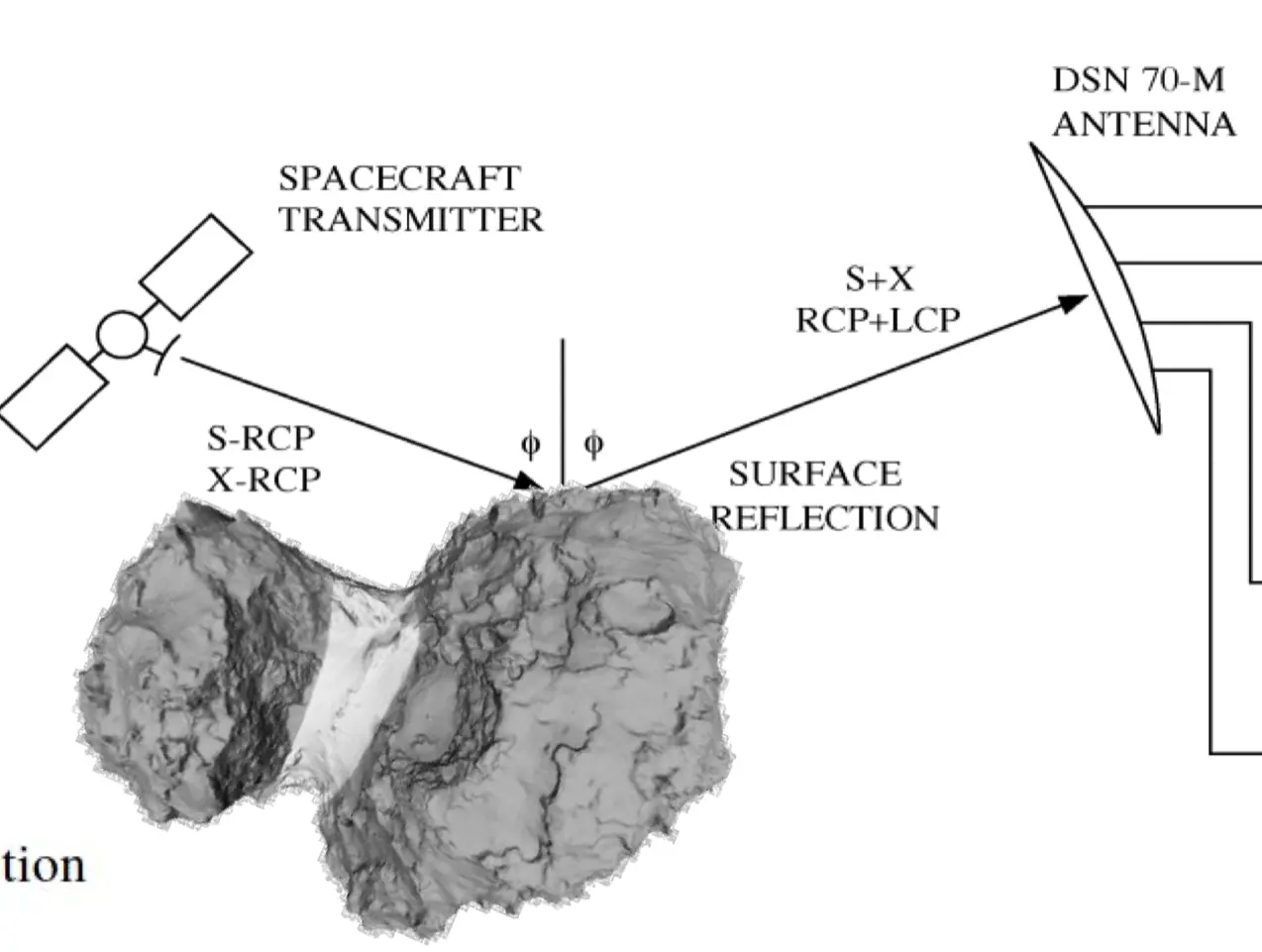

Simulation of the detectability of different surface properties with bistatic radar observations

Jonas Krumme, Thomas P. Andert, René Weller, Graciela González Peytaví, Gabriel Zachmann, Dennis Scholl, Adrian Schulz

Bistatic radar (BSR) is a well-established technology to probe surfaces of planets and also small bodies like asteroids and comets. The radio subsystem onboard the spacecraft serves as the transmitter and the ground station on Earth as the receiver of the radio signal in the bistatic radar configuration. A part of the reflected signal is scattered towards the receiver which records both the right-hand circular polarized (RHCP) and left-hand circular polarized (LHCP) echo components. From the measurement of those, geophysical properties like surface roughness and dielectric constant can be derived. Such observations aim at extracting the radar reflectivity coefficient of the surface, which is also called the radar-cross section. This coefficient depends on the physical properties of the surface. We developed a bistatic radar simulation tool that utilizes hardware acceleration and massively-parallel programming paradigms available on modern GPUs. It is based on the Shooting and Bouncing Rays (SBR) method (sometimes also called Ray-Launching Geometrical Optics), which we have adapted for the GPU and implemented using hardware- accelerated raytracing. This provides high-performance estimation of the scattering of electromagnetic waves from surfaces, which is highly desirable since surfaces can become very large relative to the surface features that need to be resolved by the simulation method. Our method can, for example, deal with the asteroids 1 Ceres and 4 Vesta, which have mean diameters of around 974 km and 529 km, resp., which are very large surfaces relative to the sizes of the surface features. But even smaller objects can require a large number of rays for sampling the surface with a density large enough for accurate results. In this paper, we present our new, very efficient simulation method, its application to several examples with various shapes and surface properties, and examine limits of the detectability of water ice on small bodies.

Published in:

International Astronautical Congress (IAC) 2022, Paris, France, September 18 - 22, 2022.

Files:

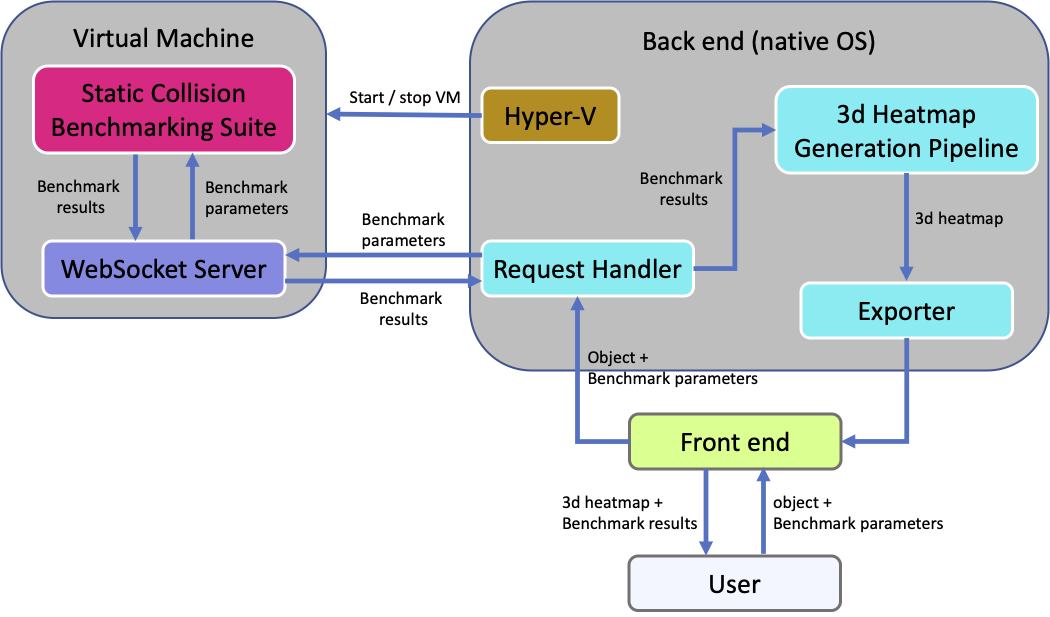

A Framework for Safe Execution of User-Uploaded Algorithms

Toni Tan, René Weller, Gabriel Zachmann

In recent years, a trend has existed for an open benchmark aiming for reproducible and comparable benchmarking results. The best reproducibility can be achieved when performing the benchmarks in the same hard- and software environment. This can be offered as a web service. One challenge of such a web service is the integration of new algorithms into the existing benchmarking tool due to security concerns. In this paper, we present a framework that allows the safe execution of user-uploaded algorithms in such a benchmark-as-a-service web tool. To guarantee security as well as reproducibility and comparability of the service, we extend an existing system architecture to allow the execution of user-uploaded algorithms in a virtualization environment. Our results show that although the results from the virtualization environment are slightly slower by around 3.7% to 4.7% compared with the native environment, the results are consistent across all scenarios with different algorithms, object shapes, and object complexity. Moreover, we have automated the entire process from turning on/off a virtual machine, starting benchmark with intended parameters to communicating with the backend server when the benchmark has finished. Our implementation is based on Microsoft Hyper-V that allows us to benchmark algorithms that use Single Instruction, Multiple Data (SIMD) instruction sets as well as access to the Graphics Processing Unit (GPU).

Published in:

ACM Web3D 2022: The 27th International Conference on 3D Web Technology, Evry-Courcouronnes, France, November 2 - 4, 2022.

Files:

Paper

Slides

Supplemental Material

Demo

Links:

Comparing Methods for Gravitational Computation: Studying the Effect of Inhomogeneities

Matthias Noeker, Hermann Meißenhelter, Tom Andert, René Weller, Özgür Karatekin, Benjamin Haser

Current and future small body missions, such as the ESA Hera mission or the JAXA MMX mission demand good knowledge of the gravitational field of the targeted celestial bodies. This is not only motivated to ensure the precise spacecraft operations around the body, but likewise important for landing manoeuvres, surface (rover) operations, and science, including surface gravimetry. To model the gravitation of irregularly-shaped, non-spherical bodies, different methods exist. Previous work performed a comparison between three different methods, considering a homogeneous density distribution inside the body. In this work, the comparison is continued, by introducing a first inhomogeneity inside the body. For this, the same three methods, being the polyhedral method and two different mascon methods are compared.

Published in:

Europlanet Science Congress 2022, Granada, Spain, September 18-23, 2022, EPSC2022-562, doi.org/10.5194/epsc2022-562.

Files:

Virtual Reality and Mixed Reality - 19th EuroXR International Conference, EuroXR 2022

Gabriel Zachmann, Mariano Alcañiz Raya, Patrick Bourdot, Maud Marchal, Jeanine Stefanucci, Xubo Yang

19th EuroXR International Conference, EuroXR 2022, Stuttgart, Germany, September 14–16, 2022, Proceedings

This book constitutes the refereed proceedings of the 19th International Conference on Virtual Reality and Mixed Reality, EuroXR 2022, held in Stuttgart, Germany, in September 2022.

The 6 full and 2 short papers were carefully reviewed and selected from 37 submissions. The conference presents contributions on results and insights in Virtual Reality (VR), Augmented Reality (AR), and

Mixed Reality (MR), commonly referred to under the umbrella of Extended Reality (XR), including software systems, immersive rendering technologies, 3D user interfaces, and applications.

Published in:

19th EuroXR International Conference, EuroXR 2022, Stuttgart, Germany, September 14–16, 2022, Proceedings

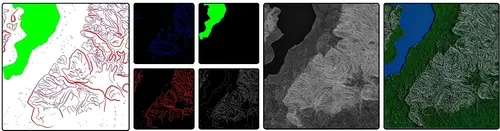

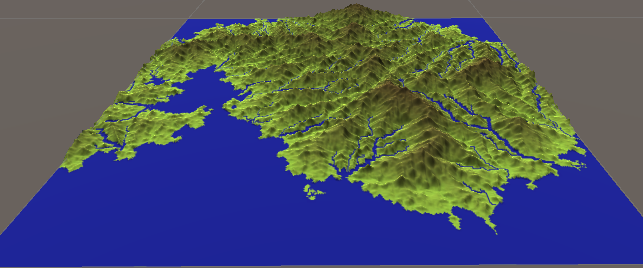

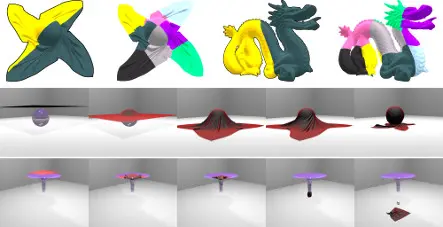

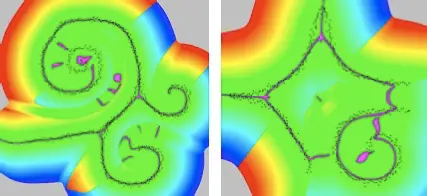

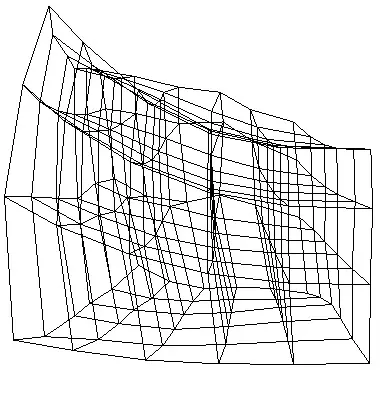

Procedural Generation of Landscapes with Water Bodies Using Artificial Drainage Basins

Roland Fischer, Judith Boeckers, Gabriel Zachmann

We propose a method for procedural terrain generation that focuses on creating huge landscapes with realistically-looking river networks and lakes. A natural-looking integration into the landscape is achieved by an approach inverse to the usual way: After authoring the initial landmass, we first generate rivers and lakes and then create the actual terrain by ``growing'' it, starting at the water bodies. The river networks are formed based on computed artificial drainage basins. Our pipeline approach not only enables quick iterations and direct visualization of intermediate results but also balances user control and automation. The first stages provide great control over the layout of the landscape while the later stages take care of the details with a high degree of automation. Our evaluation shows that vast landscapes can be created in under half a minute. Also, with our system, it is quite easy to create landscapes closely resembling real-world examples, highlighting its capability to create realistic-looking landscapes. Moreover, our implementation is easy to extend and can be integrated smoothly into existing workflows.

Published in:

Computer Graphics International (CGI), Geneva, Switzerland, September 12-16, 2022. LNCS vol. 13443.

Files:

Future Directions for XR 2021-2030: International Delphi Consensus Study

Jolanda G. Tromp, Gabriel Zachmann, Jerome Perret, Beatrice Palacco