SmartOT

SmartOT is a joint project of several project partners which aims to develop an automatic lighting system and an intelligent control concept for operating theaters. The project partners are the Digital Media Lab, the Computer Graphics and Virtual Reality Research Lab (CGVR) and the TZI of the University of Bremen, Dr. Mach GmbH & Co. KG, Kizmo GmbH, Qioptiq Photonics GmbH & CO. KG and the University Clinic for Visceral Surgery at the Pius-Hospital Oldenburg. The project is funded by the german Federal Ministry of Education an Research (BMBF - Bundesministerium für Bildung und Forschung).

Previous surgical lamp solutions require frequent manual positioning in order to ensure sufficiently good lighting conditions during a surgery. To avoid the resulting distractions and short interruptions in the operating procedure, an automatic lighting system with a large number of light modules is being developed. This lighting system is designed to automatically detect and avoid shadows casts by surgeons and assistants and to illuminate the site as uniform as possible.

Autonomous lighting control software (AuLiCo)

In the SmartOT project, we developed the Autonomous Lighting Control Software AuLiCo, which receives the depth images from multiple depth cameras as input, processes them, and outputs control commands for the individual lighting modules of the lighting system. This software is used both in the real demonstrator and in the VR simulator, where the Autonomous Lighting Control Software is loaded as a library.

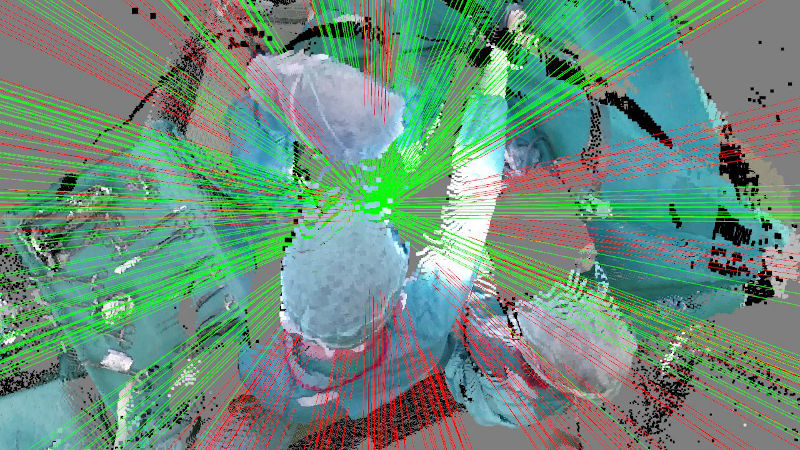

Figure 1: Light control and occlusion test during surgery.

Figure 2: Occlusion test between light modules and surgical wound.

Architecture and details (AuLiCo)

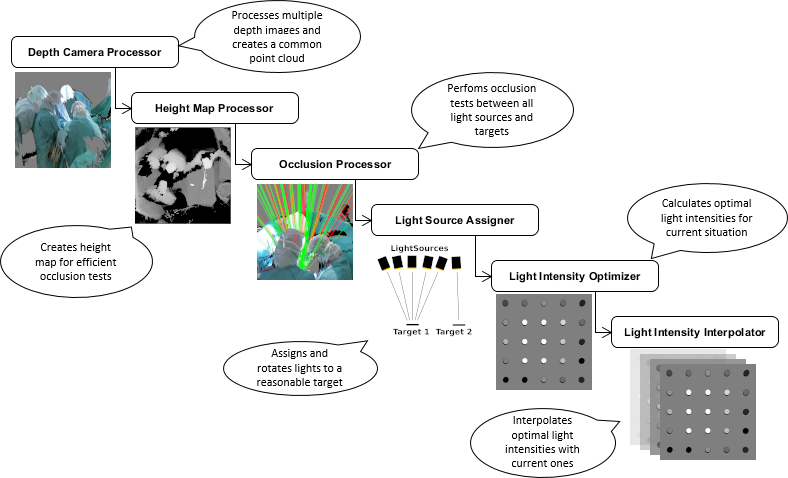

Processing

The idea is to create a common height map from the point cloud of multiple depth sensors. Using this height map, we can efficiently test whether the light from a light module is blocked by surgeons on its way to the surgical wound. Based on the information whether the light of a light module reaches the surgical wound, we control the light modules in such a way that the most constant and shadow-free illumination of the surgecal wound is achieved. Light modules whose light is blocked are switched off completely in order to create as few shadows as possible.

The following graphic illustrates the processing:

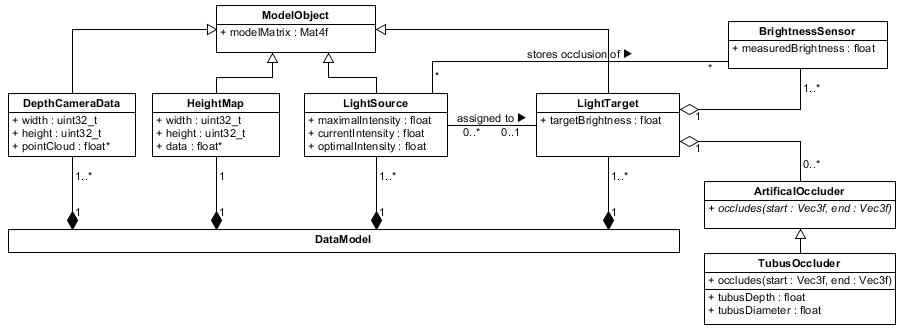

Data Model

The data model on which we work is essentially limited to the four classes DepthCameraData, HeightMap, LightSource and LightTarget. While the DepthCameraData stores the preprocessed point cloud of a single depth sensor, the HeightMap class stores height information from the point clouds of all depth sensors in the area around the operating table. The LightSource class represents a single light module which can be assigned to a single LightTarget – e.g. the surgecal wound or a table with surgery tools. Furthermore, a LightTarget can own multiple BrightnessSensors at different locations for brightness optimization and multiple ArtificalOccluders, which are considered by the Occlusion Processor too.

The following class diagram gives an overview:

VR Simulator

Our VR simulator enables testing and further development of the newly developed lighting system by using a head-mounted display. We are currently expanding this VR simulator with various functionalities, e.g. the simulation of shadows cast by the lighting system by our own hands and arms in real time. In addition, we have built in functionalities to perform attention tests, which are conducted under the direction of the University of Oldenburg. The 3D model of the operating room (Fig. 3 and Fig. 4) was realized by project partner KIZMO.

Figure 3: Image of the VR Simulator.

Figure 4: Lighting system reacts to movements in the VR simulator.

Figure 5: Lighting Simulation with 100 light modules.

Light planning software

One of our initial tasks was to find optimal lamp positions to ensure that uniform and optimal lighting can be maintained during typical surgeries. We initially developed the lighting design software in consultation with Dr. Mach and Qioptik to enable the testing and analysis of different light module configurations (number and position). In addition, point cloud recordings of real operations can be used to analyze the expected shadow cast by a light module configuration.

Figure 6: Manually testing various light module configurations.

Figure 7: Shadow of a recorded point cloud cast on a virtual site by different light modules.

Figure 8: Algorithm-based optimization of light module configurations on point clouds.

Recordings of open abdominal surgeries (depth images only)

Figure 6: Timelapse of surgery 4. Shown is the resulting pointcloud from the three depth images of the kinects. The points are colored according to the Kinect with which they were captured.

The following files are depth image recordings of real open abdominal surgeries. They were each recorded simultaneously with three ceiling-mounted Microsoft Kinect v2 to avoid occlusions by the conventional surgical lights. Due to memory limitations and for pre-processing, the files contain only about 1 frame per second per Kinect. The files provided do not contain color images.

| Surgery | Duration | Size (zipped) | Size (unzipped) | Note |

| Surgery 1 | 2:08 h | 2.85 GiB | 9.26 GiB | Incomplete. |

| Surgery 2 | 4:02 h | 5.27 GiB | 17.6 GiB | Incomplete. |

| Surgery 3 | 3:00 h | 4.00 GiB | 13.0 GiB | Incomplete. Grid recording. |

| Surgery 4 | 3:28 h | 4.57 GiB | 15.0 GiB | Complete. Grid recording. |

| Surgery 5 | 2:24 h | 2.76 GiB | 10.4 GiB | Complete. |

| Surgery 6 | 3:27 h | 4.11 GiB | 15.0 GiB | Complete. |

| Surgery 7 | 5:45 h | 8.38 GiB | 25.1 GiB | Complete. |

| Surgery 8 | 3:00 h | 3.86 GiB | 13.0 GiB | Complete. |

| Surgery 9 | 3:11 h | 3.78 GiB | 13.8 GiB | Complete. Grid recording. |

Explanations to the notes:

- Incomplete: Surgery was not recorded to the end.

- Complete: Surgery was completly recorded.

- Grid recording: Contains recording of the grid for registration at the beginning.

Each file contains the recordings of three kinects for one surgery. In addition, each file contains the intrinsic camera parameters in the header as well as the model-to-world matrices of the kinects, which were derived via our grid registration procedure. Since they are binary files, they can be easily read in any programming language without the need of additional libraries.

Using the recordings:

- The binary file structure is shortly described [here].

- This data may only be used for scientific purposes. If you publish research which is using this material, cite the following paper: Fast and Robust Registration of Multiple Depth-Sensors and Virtual Worlds (2021)

Publications

- Fast, Accurate and Robust Registration of Multiple Depth Sensors without need for RGB and IR Images. The Visual Computer, Springer May 17, 2022.

- Optimizing the Arrangement of Fixed Light Modules in New Autonomous Surgical Lighting Systems, SPIE Medical Imaging, 2022.

- Fast and Robust Registration of Multiple Depth-Sensors and Virtual Worlds, Cyberworlds, 2021.

- Fast and Robust Registration and Calibration of Depth-Only Sensors (Best Poster Award), Eurographics, 2021. [Video, Code]

- Lattice Registration C++ Library: A small C++ library which implements our registration procedure to be used with arbitrary depth sensors.

- QT Demo Application (Windows x64 Build): Simple demo application to test our registration procedure with multiple Microsoft Azure Kinects [Screenshot]

- UE4 Project + Plugin (Source Code): An Unreal Engine 4.26 project with plugin implementing our registration procedure including the registration of depth sensors with the virtual world.

License

This original work is copyright by University of Bremen.

Any software of this work is covered by the European Union Public Licence v1.2.

To view a copy of this license, visit

eur-lex.europa.eu.

Any other assets (3D models, movies, documents, depth-image recordings etc.) are covered by the

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

To view a copy of this license, visit

creativecommons.org.

If you use any of the assets or software to produce a publication,

then you must give credit and put a reference in your publication.

If you would like to use our software in proprietary software,

you can obtain an exception from the above license (aka. dual licensing).

Please contact zach at cs.uni-bremen dot de.

Related Links

- Further information: Project Website