VIVATOP

VIVATOP is a joint project of the Digital Media Lab and the Computer Graphics and Virtual Reality Lab (CGVR) at the University of Bremen, the Fraunhofer Institute for Digital Medicine (MEVIS), the apoQlar GmbH, the cirp GmbH, the szenaris GmbH and the University Clinic for Visceral Surgery at the Pius-Hospital Oldenburg. The project is funded by the german Federal Ministry of Education an Research (BMBF - Bundesministerium für Bildung und Forschung).

The aim of the VIVATOP project is to provide extensive support for surgeons in the operating room by using a combination of 3D printed organ models, RGB-D cameras, and modern Augmented- and Virtual Reality techniques. These novel techniques have the potential to greatly reduce risks, increase efficiency, and make operations more successful. VIVATOP encompasses assist systems for various stages from the preoperative planning phase to intraoperative support as well as training and education.

With the above-mentioned techniques, we create a virtual multi-user environment in which surgeons and medical students are able to collaborate with each other and external experts as if they were in the same room using real-time personalized avatars, can follow and assist in ongoing operations thanks to live-captured and 3D reconstructed representations of the physical scene, and can immersively inspect and interact with virtual 3D organs which are accurately reconstructed based on the patient's data. This helps to plan the operations. 3D-printed organ models act as accompanying controllers and provide haptic feedback.

Short Introductory Video (german)

Use Cases

Preoperative Planning

Every surgery has to be extensively planned beforehand to minimize risks. We try to assist the surgeons during this phase by providing the possibility to collaborate with (external) colleagues in a multi-user virtual environment. There they will be able to view, inspect and manipulate 3D patient data in form of reconstructed virtual organs. A key feature for immersion and usability is the haptic feedback from the physical 3D-printed organ models which act as controllers for the corresponding virtual models.

Intraoperative Support

During a live surgery, multiple depth cameras will be used to capture the ongoing operation. This data will be streamed into the virtual environment and a 3D (point cloud) reconstruction is rendered to allow external experts to view and support the surgery. Additionally, the users can be represented by real-time personalized point cloud avatars.

Training

The training of students and doctors is another vital use case that greatly benefits from the physical and virtual organ models as well as the collaborative VR applications.

Our Contributions

Within the VIVATOP project, our department is mostly concerned with providing the general multi-user VR OR environment, as well as, the research and development of a live-streaming solution for the RGB-D data. This includes compression and filtering, as well as, the rendering of point clouds.

Multi-User Virtual OR

The VR environment is based on a client-server architecture and the Unreal Engine 4. It features a lobby system for concurrent sessions, Roomscale VR and teleport locomotion, 3D avatars (basic ones and live reconstructed ones), and interactive organ models.

Point Cloud Streaming

The RGB-D data of the depth cameras that are set up in the operation room has to be streamed to the external experts in real-time, therefore, fast data processing and minimal latencies are key requirements. To accommodate those, we developed a modular multi-camera streaming solution based on our library called DynCam. More information about it can be read on its page DynCam. Additionally, efficient compression is needed to keep the required bandwidth low. To achieve the best results, we compress the individual color- and depth images and developed novel, more effective, lossless depth compression algorithms.

Other important tasks we address with our pipeline, are denoising, filtering, and merging of the data as well as the eventual computation of the point cloud.

Point Cloud Visualization and Avatars

For accurate and low-latency 3D visualization of the RGB-D data, we developed 2 fast point cloud rendering solutions that are able to dynamically render huge point cloud sets directly in Unreal Engine 4. The first one is a direct splatting approach using Unreal's Niagara particle system. The second solution is a fast mesh reconstruction that exploits the ordered nature of the data and dynamically transforms the vertices of a regular plane mesh. Remote users can be visualized with point cloud avatars, too, if they have RGB-D cameras themselves.

Medical Volume Rendering

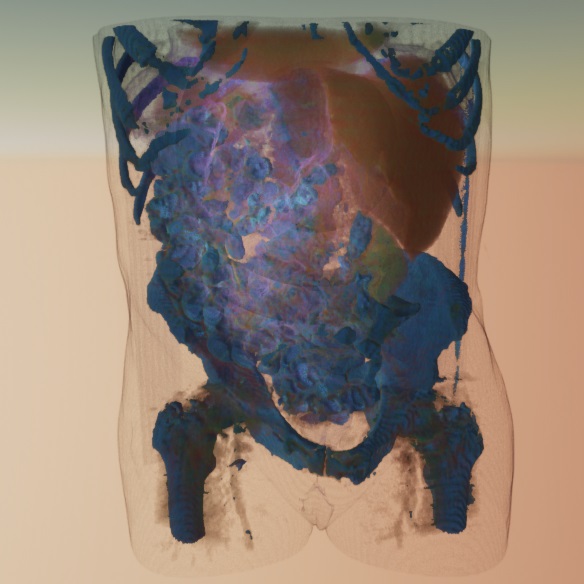

The 3D visualization of volumetric medical data like CT scans is another important topic we are working on. 3D game engines, which we use for the VR environment, usually don't directly provide appropriate solutions. As part of a master thesis, a direct volume renderer for CT data was developed and integrated into the Unreal Engine 4 which allows high-quality real-time visualization in VR. For more information about it, we refer to the related page Ray-Marching-Based Volume Rendering of Computed Tomography Data in a Game Engine.

Videos

Publications

- Introducing Virtual & 3D-Printed Models for Improved Collaboration in Surgery, at the 18th Annual Meeting of the German Society for Computer- and Robot-Assisted Surgery (CURAC 2019), Reutlingen, Germany, September 19 - 21, 2019. [Paper preprint]

- Application Scenarios for 3D-Printed Organ Models for Collaboration in VR & AR, at the Mensch und Computer 2019 (MuC 2019), Hamburg, Germany, September 08 - 11, 2019.

- Improved Lossless Depth Image Compression, in the Journal of WSCG, Vol.28, No.1-2, ISSN 1213-6972; selected paper from the WSCG'20, Pilsen, Czech Republic, May 19 - 21, 2020. [Paper]

- Volumetric Medical Data Visualization for Collaborative VR Environments, at the EuroVR 2020, Valencia, Spain, November 27 - 29, 2020. [Paper]

- Fast and Robust Registration and Calibration of Depth-Only Sensors, at the Eurographics 2021 (EG 2021) Posters , Vienna, Austria, May 03 - 07, 2021. [Paper]

- Fast, Accurate and Robust Registration of Multiple Depth Sensors without need for RGB and IR Images, in the The Visual Computer, Springer May 17, 2022. Selected paper from the 2021 International Conference on Cyberworlds (CW), Caen, France, September 28 - 30, 2021. [Paper]

- Evaluation of Point Cloud Streaming and Rendering for VR-based Telepresence in the OR, at the EuroXR 2022, Stuttgart, Germany, September 14 - September 16, 2022. [Paper]

Related Links

Press

- May 2022: NDR Visite Report (in German) “Leberkrebs: Holomedizin liefert bei Operation 3-D-Bilder” (accessible until 17th May 2023)

- March 2022: NWZ Report (local newspaper) “3D-printing and virtual reality reach medicine” (in German)

- March 2022: Increased Patient Safety through three-dimensionality (in German)

- March 2021: VIVATOP: More Insight during Liver Surgery