DynCam

DynCam is a platform-independent library for real-time, low latency streaming of point clouds, focusing on telepresence in VR.

With the spread of inexpensive VR headsets, collaborative virtual environments are playing an increasingly important role in many application areas. In these environments virtual prototypes and simulations can be inspected and discussed. Remote experts can also join in and provide direct assistance with ongoing tasks in a telepresence-like fashion. For the immersive representation of personalized avatars and the local one often depth cameras are used. However, occurring occlusion and huge data rates are problems which have to be addressed. In addition, keeping the latency minimal and performing a realistic rendering are challenges which have to be solved as well.

With our library color and depth information of several distributed RGB-D cameras are processed, combined and streamed over the network. The resulting single point cloud can be rendered on multiple clients in real time. The required bandwidth for streaming is reduced by a number of fast compression methods. The library has a modular pipeline structure and follows a functional reactive programming approach whereby the calculations are implicitly executed in parallel. This helps to keep the latencies low and makes it easy to extend and integrate the library. We also provide a plugin for the Unreal Engine for fast and straightforward integration.

Main Features

- Fast computation and fusion of multiple point clouds

- Low latency network streaming of point clouds

- Real-time compression of RGB-D data and point clouds

- Support of several RGB-D cameras

- Reactive, multithreaded architecture

- Modular, easy-to-extend design

- Recording and playback of RGB-D data

- Plugin for the Unreal Engine for an easy integration

- Dynamic rendering of point clouds with our complementary Unreal Engine point cloud rendering technique

General Architecture

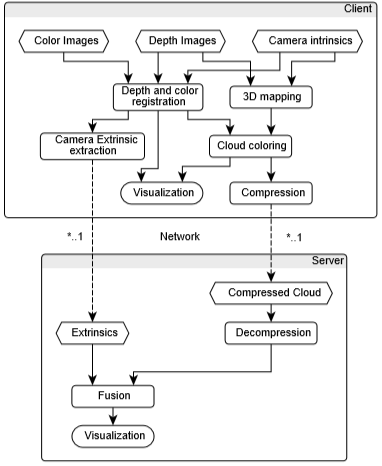

The pipeline consists of four main layers of nodes. The interface to the depth camera and the intrinsics build the first layer. In the second one, the registration of color- and depth images are done as well as an optional early computation of a 3D point cloud. After that, the extrinsic parameters are computed using a calibration pattern and, if the point cloud was generated already, a colored point cloud is created. The last layer finally consists of the local visualization of the point cloud or the compression and streaming of the data to remote instances. The compression and streaming can be applied at either the registered color- and undistorted depth image or the point cloud itself. In the first case, the computation of the point cloud is done at the remote instances. Finally, the point clouds are fused and visualized on each remote instance.

Compression and transmission of the data

To reduce the network traffic we provide the option to use several compression methods, whereby we focus on computational fast algorithms as we aim to keep the latencies minimal for a smooth real-time VR experience. Currently, the main approach is the compression and transmission of the processed color and depth images and a late point cloud computation on the remote instances. For this case we employ JPEG compression for the color information, optionally PNG is available as well, and a combination of quantization and LZ4 for the depth images. A second supported approach is to compute the point cloud early and compress and transmit it directly for which LZ4 can be used too. We currently work on different novel compression methods for both approaches.

Visualization

For the real-time rendering of huge dynamic point clouds, we created a custom rendering technique for the Unreal Engine. This technique was implemented by Valentin Kraft in his master thesis. Further informations and the source coe are available here: UE4_GPUPointCloudRenderer. The main idea is to use a combination of dynamic textures which store the positional- and color information for each point and shader-based splatting. Each point is represented by a triangle which is visualized as a circular splat using the pixel shader. For the correct rendering order, a novel compute shader-based sorting technique was implemented.

Publications

- DynCam: A Reactive Multithreaded Pipeline Library for 3D Telepresence in VR, Virtual Reality International Conference (VRIC) 2018, Laval, France, Apri 4-6, 2018.

Files

-

Paper preprint

-

Talk

-

Source code (Warning: WIP version/research code. There might be bugs and not working features.)

Videos

License

This original work is copyright by University of Bremen.

Any software of this work is covered by the European Union Public Licence v1.2.

To view a copy of this license, visit

eur-lex.europa.eu.

The Thesis provided above (as PDF file) is licensed under Attribution-NonCommercial-NoDerivatives 4.0 International.

Any other assets (3D models, movies, documents, etc.) are covered by the

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

To view a copy of this license, visit

creativecommons.org.

If you use any of the assets or software to produce a publication,

then you must give credit and put a reference in your publication.

If you would like to use our software in proprietary software,

you can obtain an exception from the above license (aka. dual licensing).

Please contact zach at cs.uni-bremen dot de.