Redirected walking in virtual reality with auditory step feedback and rotation during eye blinks

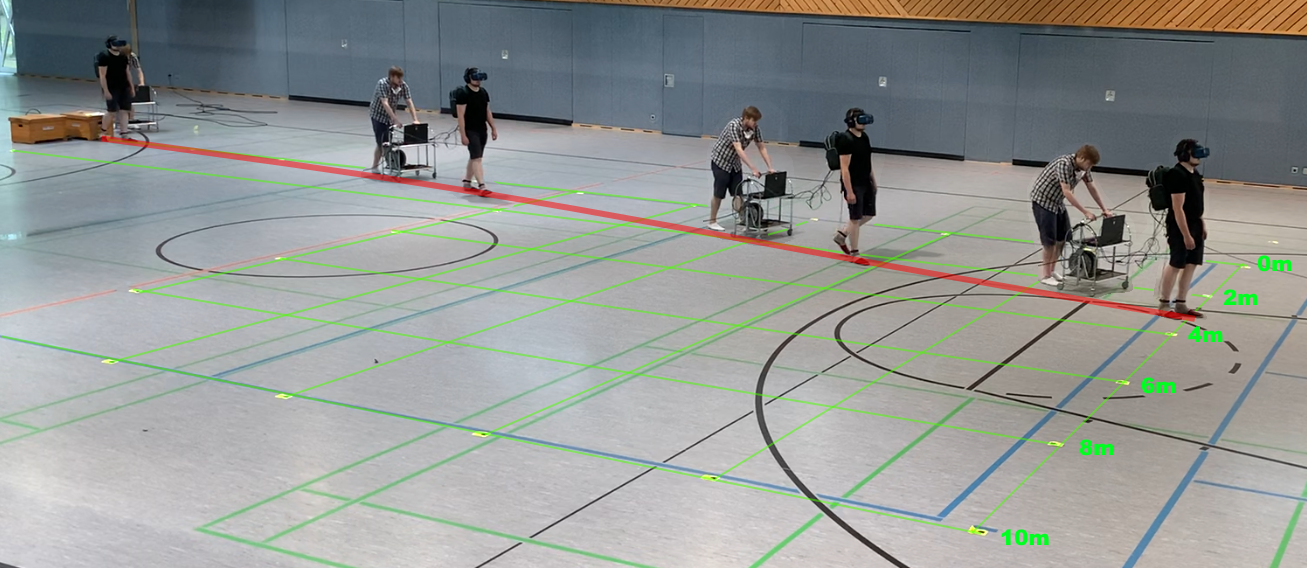

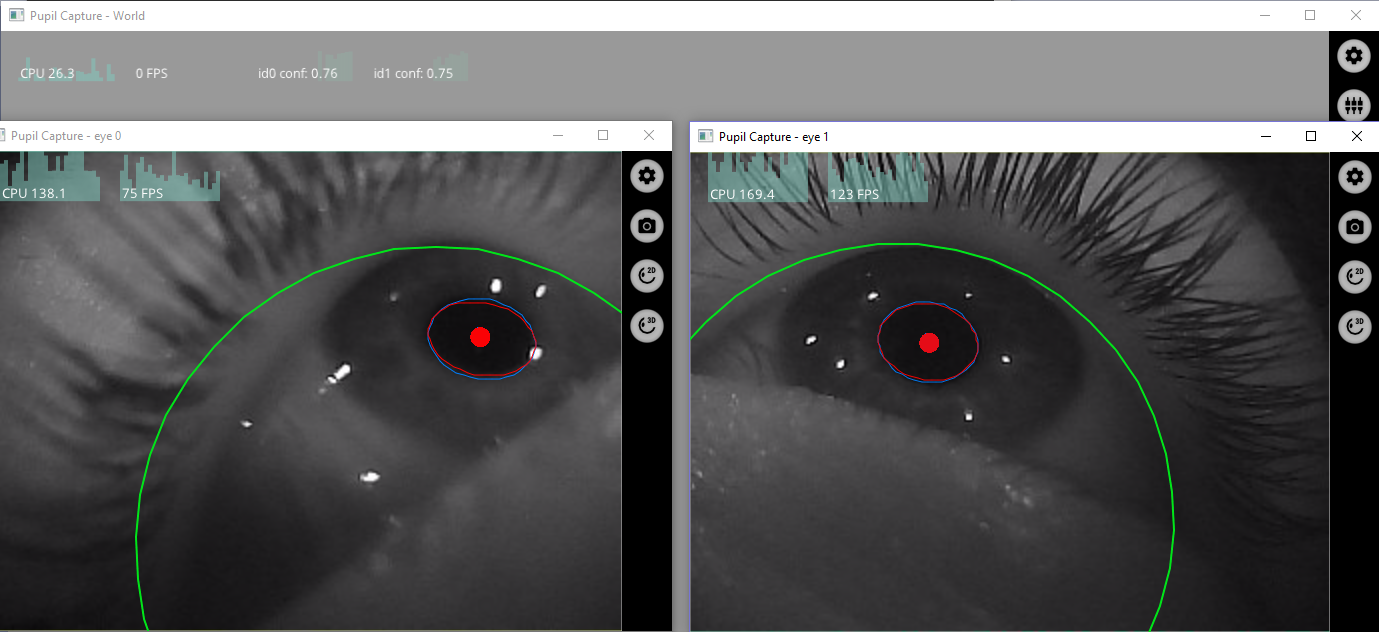

In this experiment, 20 participants were manipulated in virtual reality using two different redirected walking methods. These manipulations should be as healthy as possible and imperceptible. These techniques could be used to map a larger virtual room onto a smaller real room in which the users walk in circles unnoticed. A new acoustic and an already known visual manipulation method were used. In the known visual method, the participants are rotated during the moment in which they blink. Eye tracking from Pupil Labs was used for this. The second acoustic method uses a self-constructed step detection based on an Arduino Uno, which was triggered with as little latency as possible with force sensors under the soles of the test subjects' shoes when they step on the ground. This step noise feedback led to a virtual acoustic deviation of the test persons from the actual path, which should lead to a real counter-movement of the test persons.

Description

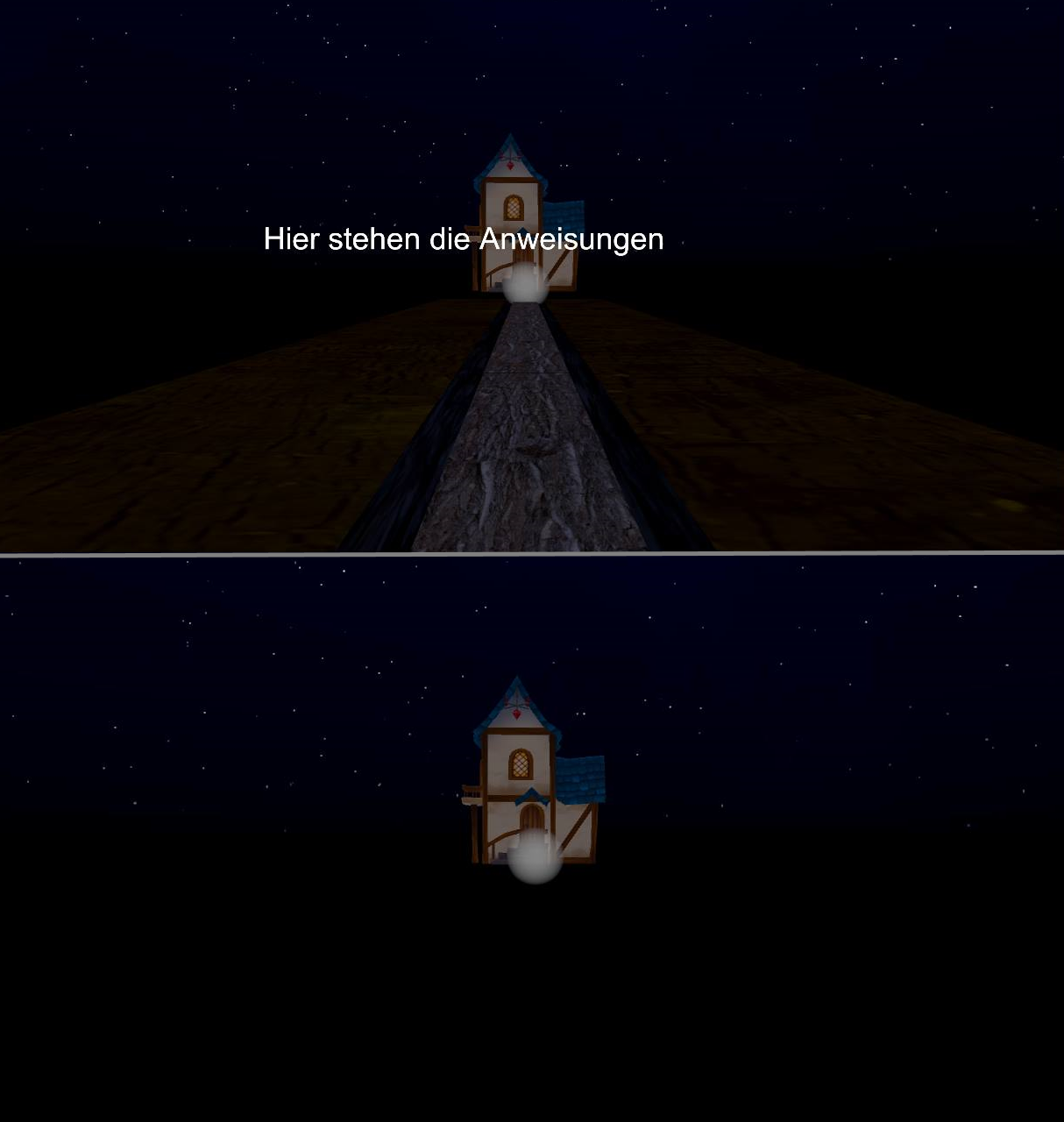

In order to test these two methods, there were 4 randomized runs per subject, in which they were supposed to approach a house on a straight virtual path. This virtual environment was designed with the Unity 3D engine. There was a run without any manipulation, a run with acoustic, one with optical and a run with a combination of both methods. The path to the house was hidden at the beginning of each run. Therefore only the target (house) and the sound of the step noises of the participants could be used as orientation. The possible noises in the environment were the steps on the gravel path, the puddles of water at the edges of the path or the grass in the area beyond the path. The real path to be walked in this experiment was 20m long, which could be achieved with the internally built-in sensors and room recognition of the used Vive Cosmos. With this HMD a tracking area could be used which is large compared to previous work. Whether redirected walking by means of acoustic footstep noise feedback can cause a positive, imperceptible deviation of the test persons from the virtual path perceived as a straight line and whether it can be combined with visual redirected walking, should be investigated in this work.

Results

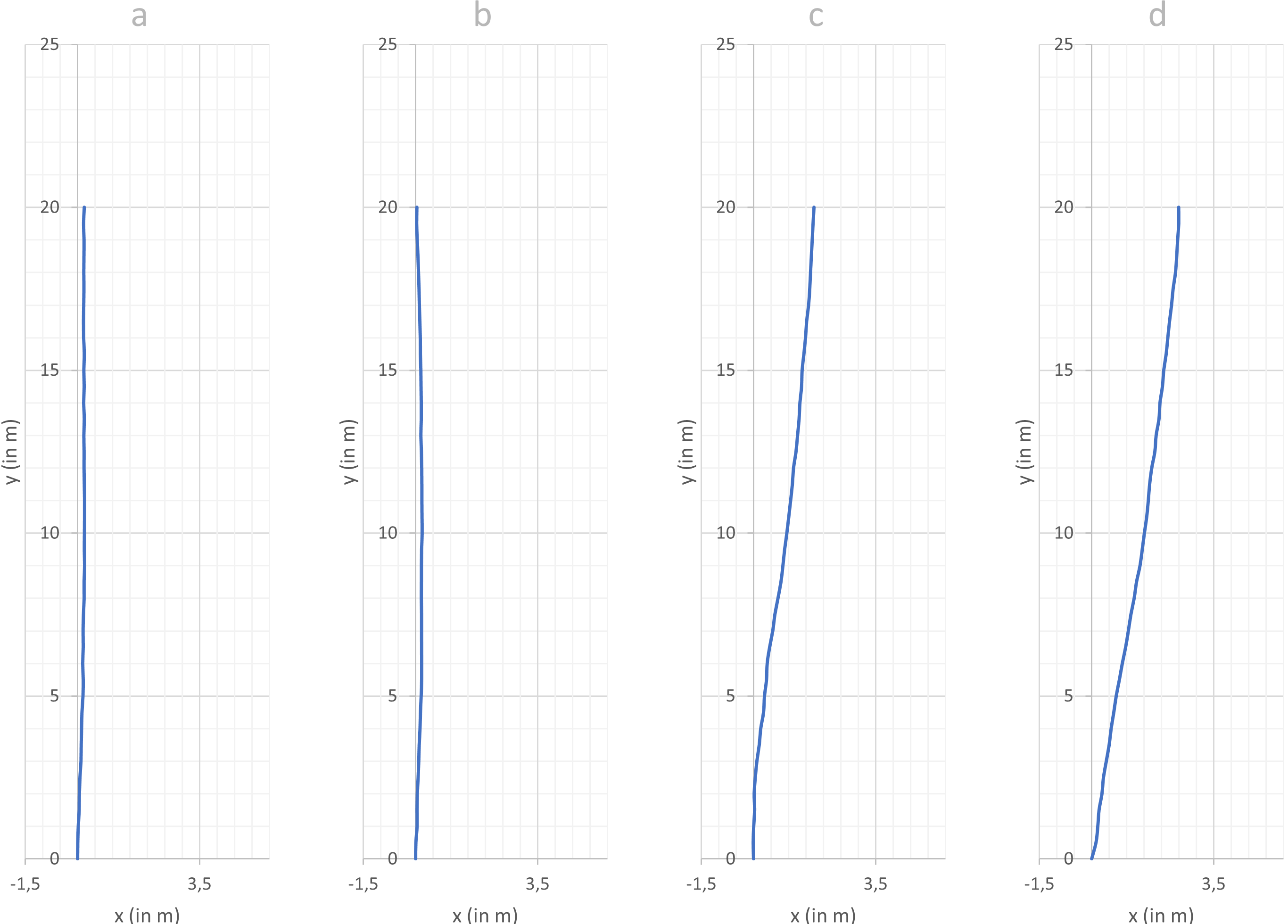

After evaluating the questionnaires, this work came to the conclusion that the two redirected walking methods were not recognized as manipulations by the test persons. Furthermore, the simulation had no negative effects on the health of the test subjects. In the following two figures, the achieved deviation from the path is shown as a box plot and the average paths run are shown (a = scenario without manipulation, b = scenario only with visual manipulation by means of blinking, c = scenario only with acoustic manipulation, d = combination from blinking and acoustic manipulation). The short form ASRDW stands for acoustic step feedback redirected walking in the box plot. As can be clearly seen in the figures, no significant difference could be determined between the scenario without manipulation and the scenario with blinking. Which could have been due to the strength of the manipulation applied. The paradox here, however, is that the combination of both manipulation methods showed a significantly higher deviation compared to the purely acoustic manipulation. This resulted in an average unnoticed deviation of 295.5 cm up to 510 cm. The acoustic method alone achieved an average deviation of 167.5 cm up to 270 cm and was thus significantly higher than the scenario without manipulation. The strengths of the manipulations used were set low in this work, which means that the potential of the acoustic method presented here can be further explored in the future.

Files

Full version of the bachelor's thesis (German only)

License

This original work is copyright by University of Bremen.

Any software of this work is covered by the European Union Public Licence v1.2.

To view a copy of this license, visit

eur-lex.europa.eu.

The Thesis provided above (as PDF file) is licensed under Attribution-NonCommercial-NoDerivatives 4.0 International.

Any other assets (3D models, movies, documents, etc.) are covered by the

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

To view a copy of this license, visit

creativecommons.org.

If you use any of the assets or software to produce a publication,

then you must give credit and put a reference in your publication.

If you would like to use our software in proprietary software,

you can obtain an exception from the above license (aka. dual licensing).

Please contact zach at cs.uni-bremen dot de.