A Volume-Based Penetration Measure for 6DOF Haptic Rendering of Streaming Point Clouds

We developed an algorithm to calculate the penetration volume of 3D CAD objects and unknown environments that are scanned live by a Microsoft Kinect. It is based on our Inner spheres packings, which allow the algorithm to compute the approximate penetration volume in less than 1 millisecond. The algorithm was used to implement 6-DOF haptic rendering of dynamic virtual environments.

Description

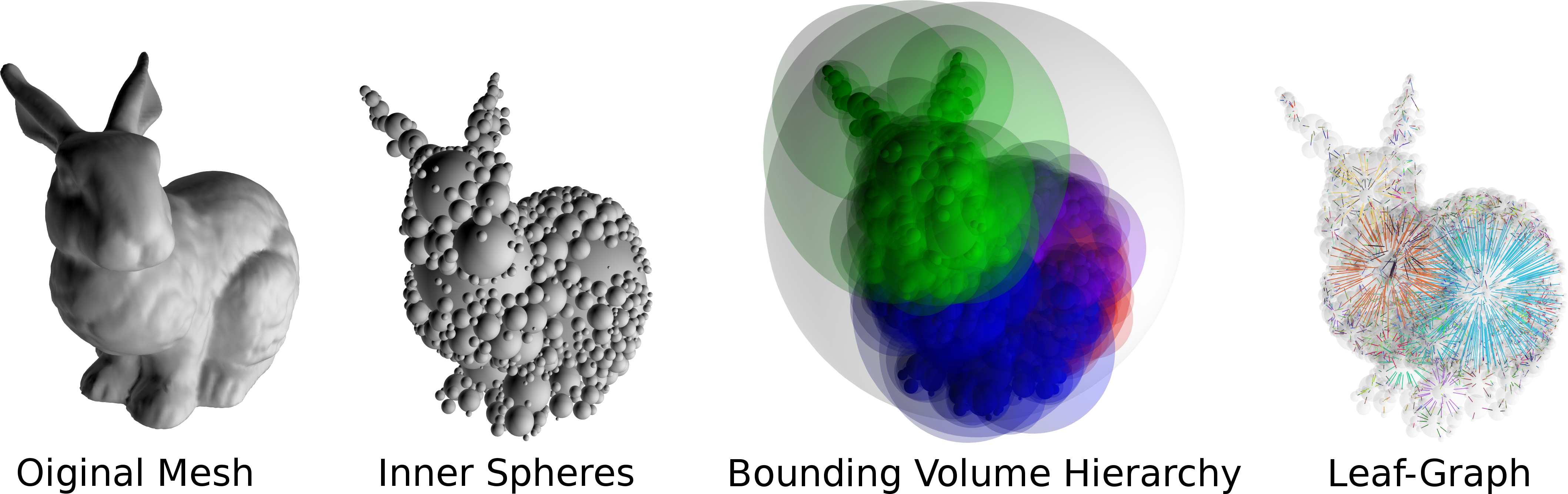

Collision detection and their resolution is a fundamental issue in most computer simulations. Usually, solutions that crudely approximate the volume of a 3D CAD object suffice, like simple bounding volumes, such as axis-aligned bounding boxes or orientated bounding boxes. Inner spheres offer a much more precise approximation of the volume of a 3D model. We additionally generate a connected graph over the inner spheres to represent the propagation of force inside the objects volume.

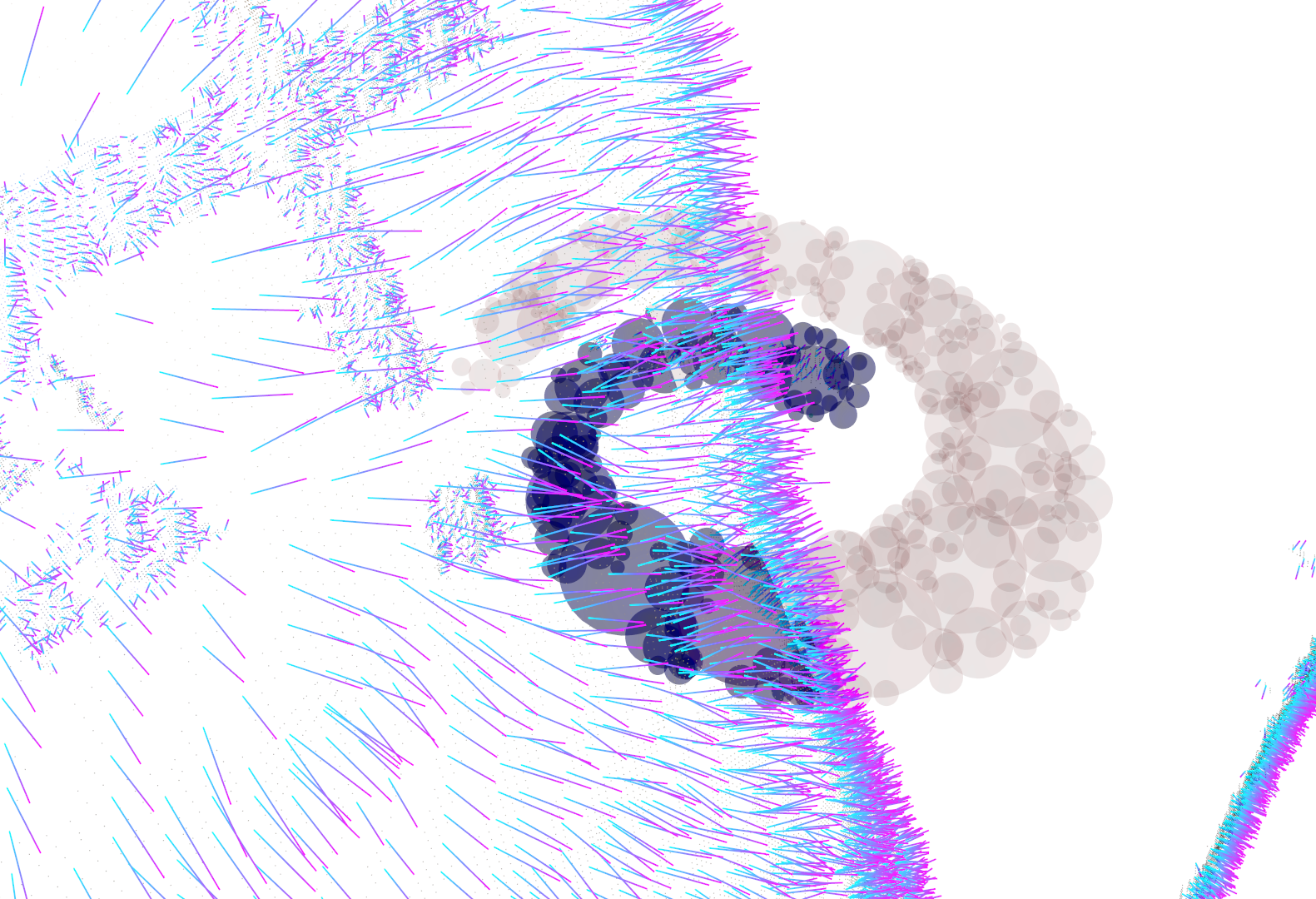

We used a Kinect to approximate the real environment by a point cloud. The point cloud is generated and augmented with surface normals by plane fitting via principal component analysis in real-time. We designed an interpolation method for the point cloud that alleviates the problem of the Kinect's low frequency.

We developed a penetration measure between the inner spheres and the point cloud. This involves finding colliding spheres and then determining which un-collided spheres are located inside the penetrated point cloud and which are outside. In order to implement haptic rendering we used the previously mentioned surface normals and the penetration volume of each sphere in order to calculate a physically plausible force feedback that will resolve the collision. This feedback is applied to a haptic device, which enables the user to interact with the virtual scene.

Results

To evaluate the quality and performance of the force feedback we developed a simulation framework that can simulate recorded or synthetic sensor data. This enabled us to compare different running parameters and methods under fair circumstances. We developed multiple algorithms that have different strengths and weaknesses. My first idea were two brute force approaches that do not regard the sphere typology that the leaf-graph describes, instead they do a global search to determine if a sphere is inside or outside. The performance was analyzed by comparing the respective methods average frame times in a recorded haptic interaction under realistic circumstances (sensor data of haptic and Kinect are both recorded in live operation, average penetration depth of the haptic tool is 10% of its volume).

The performance analysis shows that all three approaches are capable to stay within 1ms of average frame time to achieve the desired 1 kHz frequency. The leaf-graph has the best performance at all tested sphere packing sizes. The synthetic benchmarks are deliberately design in such a way that the result should be predictable. We generated synthetic haptic interaction that moved the virtual tool in a linear motion towards the point cloud. The point cloud is synthetically generated to approximate the inside of a sphere.

The calculated penetration depths show a curve that nicely corresponds to the respective virtual tools cross-sections along their main axes. Overall, the developed solution performs nicely in most situations and enables real-time haptic rendering of streaming point clouds.

Files

Full version of the master's thesis

Corresponding publication in IEEE World Haptics Conference 2017

Here is a movie that shows the method in action:

License

This original work is copyright by University of Bremen.

Any software of this work is covered by the European Union Public Licence v1.2.

To view a copy of this license, visit

eur-lex.europa.eu.

The Thesis provided above (as PDF file) is licensed under Attribution-NonCommercial-NoDerivatives 4.0 International.

Any other assets (3D models, movies, documents, etc.) are covered by the

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

To view a copy of this license, visit

creativecommons.org.

If you use any of the assets or software to produce a publication,

then you must give credit and put a reference in your publication.

If you would like to use our software in proprietary software,

you can obtain an exception from the above license (aka. dual licensing).

Please contact zach at cs.uni-bremen dot de.