Tracking of Nonrigid Objects by Runtime Multi-Domain Cluster Adaptation

This thesis deals with the creation and investigation of a new approach for tracking general objects. It combines data of multiple sensors by clustering results of weak classifiers to classify the input data.

Description

A fundamental task in computer vision is the detection of objects.

Therefore this thesis is concerned with the creation and investigation of a new approach to detect general objects.

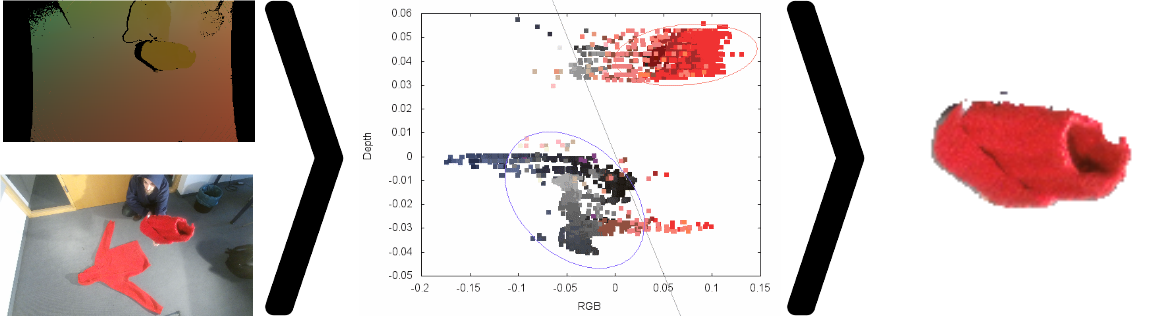

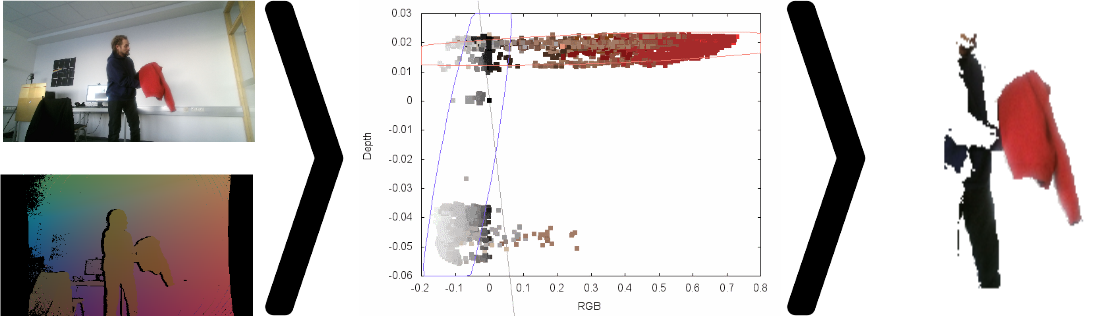

This approach classifies input data as object and surroundings and tracks that object over time.

It combines an input image with data from multiple different sensors to compensate for uncertainty or failures of individual sensors.

In addition the algorithm is designed so its runtime strongly scales with parallelization.

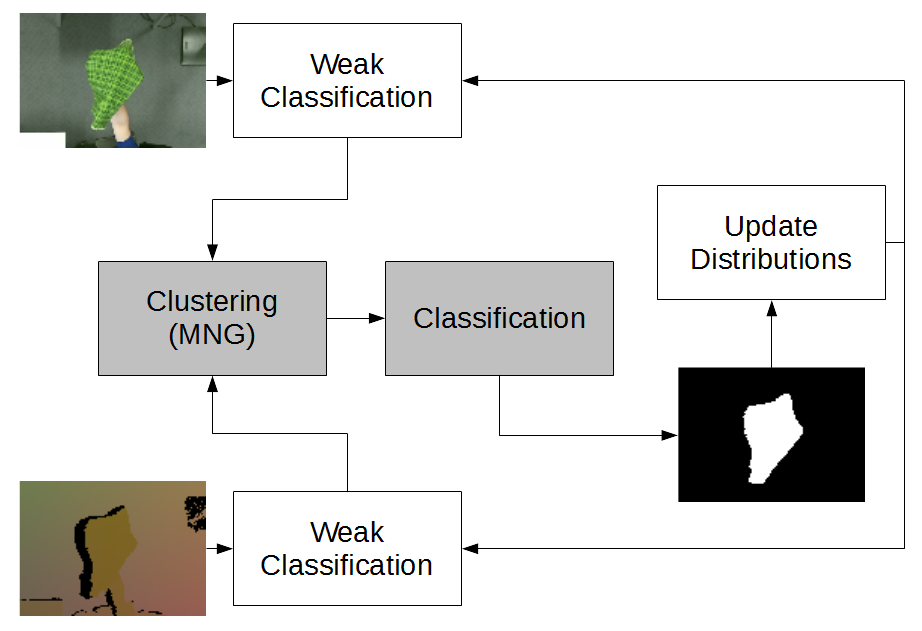

In order to compensate for the problem of comparing different measurement units from the different sensors, weak classifiers are applied independently to each input space.

These weak classifiers determine estimations on the class of each input vector of each input space, representing congruencies and uncertainties.

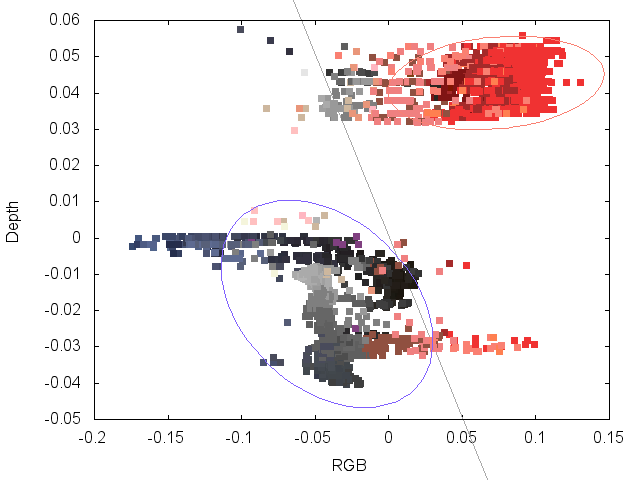

By combining estimations of corresponding input vectors as estimation vectors, an estimations space is created.

This estimations space is clustered using Matrix Neural Gas (MNG) to combine the sensor data and compensate for inherent uncertainty.

Adapting the results from previous steps, the system tracks the object and creates two clusters representing the tracked object and its surroundings,

which are then used to classify the data.

Based on the final classification, input space distributions representing the object and its surroundings are updated, and used as basis for the weak classifiers of the next iteration.

Results

Timing tests showed an average runtime of 200ms.

This time marks an upper bound, since the algorithm itself allows further optimizations regarding its runtime, especially in terms of parallelization.

The tests also showed that the concept of tracking an object by clustering the output of multiple weak classifiers processing different types of sensor data can be used to compensate for inherent uncertainties.

However, only 13 of 35 sequences were classified correctly.

This is attributed to the huge inhomogeneity of the surroundings, which occurs since all data not classified as part of the tracked object is gathered in the surroundings.

This leads to great distances between input vectors of the surroundings that result in great gaps in the estimation space.

Furthermore, small sets of misclassified input vectors can affect the relatively small set of input vectors classified as object, which leads to further misclassification.

Using the spatial domain of the Kinect amplifies these failures, since in this domain the object is indistinguishable from entities connected to it, i.e. persons holding the object. This is a hardly avoidable fact.

Files

Full version of the master's thesis (German only)

Here is a movie that shows a successful classification:

License

This original work is copyright by University of Bremen.

Any software of this work is covered by the European Union Public Licence v1.2.

To view a copy of this license, visit

eur-lex.europa.eu.

The Thesis provided above (as PDF file) is licensed under Attribution-NonCommercial-NoDerivatives 4.0 International.

Any other assets (3D models, movies, documents, etc.) are covered by the

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

To view a copy of this license, visit

creativecommons.org.

If you use any of the assets or software to produce a publication,

then you must give credit and put a reference in your publication.

If you would like to use our software in proprietary software,

you can obtain an exception from the above license (aka. dual licensing).

Please contact zach at cs.uni-bremen dot de.