Sculpting

As mentioned in the introduction the overall setup in terms of hardware is the following:

- zSpace

- PHANTOM Omni

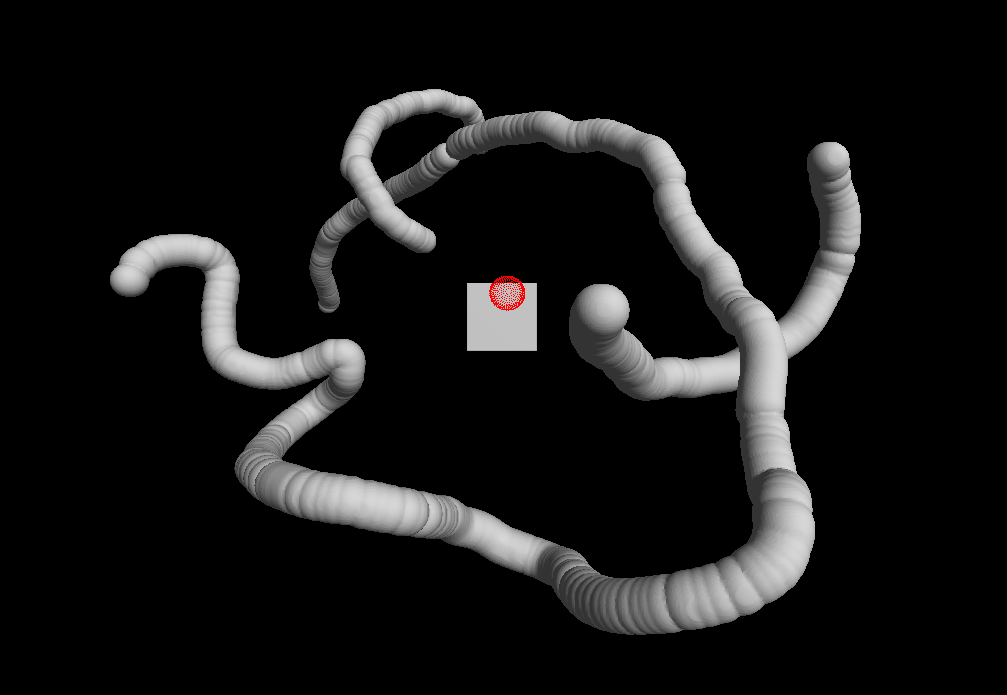

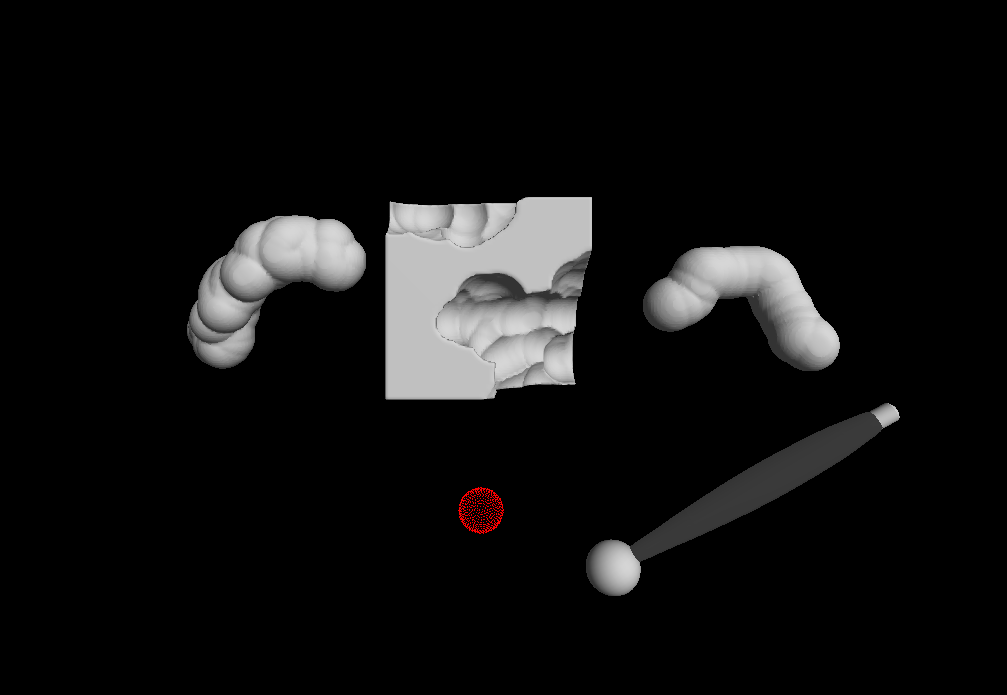

Looking on the aspect of implementation and software many different algorithms and libraries were used to achieve the goal of implementing a prototype of a sculpting game. The basic framework of the game is built on a third-party library called voxelTerrain. This library makes it easy is to create a voxel terrain and is highly parallelized so it takes advantage of a large number of CPU cores. The library implements the well-known marching cubes algorithm for an iso-surface of voxel, transvoxel for LOD, paging, network synchronization, physics-wrapper and a graphics-wrapper for Ogre3D. The transvoxel is used to close gaps between two different level-of-details. Since the zSpace offers a stereoscopic display we wanted to implement stereo (off-axis) and head tracking as well. As part of the research in this area we discovered that many solutions for stereo are not completely correct and often implement Toe-in stereo. In this projection the camera has a fixed and symmetric aperture but for correct (off-axis) stereo we need a perspective projection. As side effect the Toe-in method will cause increased discomfort levels and may result in dizziness. As a result we implemented a mathematical formula for the non-symmetric camera frustum for correct stereo and head tracking. Every time the user moves his head the view frustum gets calculated again. In our game the sighted player designs 3D sculptures. The blind player then needs to find out what the sculpture looks like by using non-visual input devices such as the haptic device. The player gets direct feedback by touching the virtual object. This includes a formal method for collision detection. For this we used a method called regular placement (regular equidistribution). The points on surface of the sphere, which represents the haptic handle within the virtual environment, are used for the collision detection. To calculate the penetration depth of the haptic handle we calculate the vectors from the center of the sphere to its surface points and the approximated intersection point with the surface of an object within the given voxel container. Surface points within the object are used to calculate an averaged force vector which also represents the direction of the force.

To make the game idea more flexible we decided to implement a Voxelizer as well. Thus, pre-modeled objects e.g. exported from Blender can be imported into the game. Those objects could represent a great starting point for a modified "Find the error" game. In this case there are no errors but differences. The sighted player removes or adds something to the model and the blind player has to find all differences by using the haptic device. At the beginning of the game, before anything has been modified, the blind player can experience the original object to make it easier to find differences. However, the blind player can switch between the original and the modified version of the object. In this game two teams with two players each would compete against each other. The goal is to make as little modifications as possible so that the teammate still can recognize differences. Notice that this idea was not implemented within the project. To refer back to the technical point of view the Voxelizer uses a simple algorithm to find out which voxel are located inside the object or on its surface. Initially all triangles are inserted into an R-Tree, a tree data structure used for spatial searching. The main idea of the algorithm is to use a ray-cast first to get all voxel intersected by the ray. The odd-even-rule then indicates whether the voxel is completely inside the object (number of intersections is odd) or not. For those voxel which are located outside the object we interpolate the density of the voxel based on the distance to the nearest surface point. This point is performantly queried from the R-Tree.

All in all our prototype implements the following features:

- Voxel Terrain

- Stereo

- Head Tracking

- Haptic

- Voxelizer

Additional Dependencies:

- zSpace SDK v3.0.0.318

- Force Dimension SDK v3.5

- Chai3D v2.0.0

- Boost v1.57.0

- Ogre3D v1.10

- Assimp v3.1.1

- VoxelTerrain library v1.2

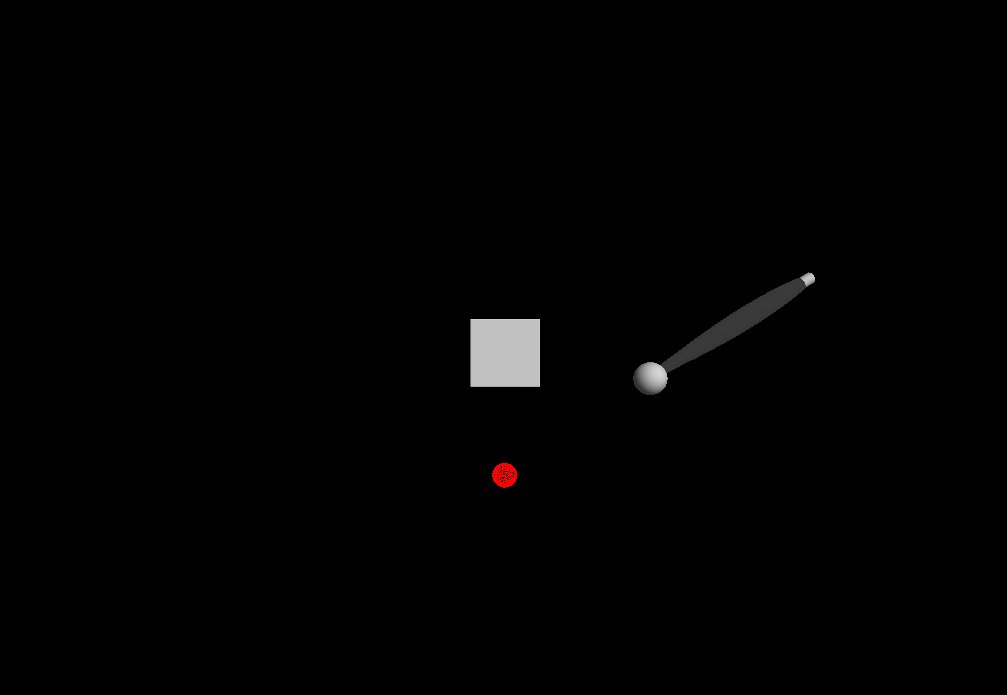

Screenshots: