Theses

On this page, you can find a number of

topics

for which we are looking for a student who is interested

in working on them as part of their thesis

(bachelor or master).

The

list

is sorted in reverse chronological order;

that means, the further a topic is towards the bottom,

the more likely it is already taken (or no longer relevant to us).

However, this list is by no means exhaustive!

In fact, we always have many more topics available.

So, please also check out our research projects; in all projects,

there are lots of opportunities for doing a thesis.

In addition, there are quite a few "free floating"

topics, which are not listed here

nor are they connected with research projects;

those are ideas we would like to try out or get familiar with.

If you are interested in one of the topics, please send me (or the respective contact person) an email with your transcript of record and 1-2 sentences of motivation.

If you would like to talk to us abouth thesis topics,

just make an appointment with one of the project members

or researchers of my group.

You can also come to my office hours (mondays 6pm - 8pm,

no appoitment needed).

Please make sure to send me or the researchers your

transcript of records.

Ethics

Unlike 20 years ago, a lot of computer science research can and will have a huge impact on our society and the way we live. That impact can be good, but today, our research could also have a considerable negative impact.

I encourage you to consider the potential impact, both good and bad, of your work. If there is a negative impact, I also encourage you to try to think about ways to mitigate that.

As a matter of course, I expect you to follow ACM's

Code of Ethics and Professional Conduct.

I think, we all should go a step further

and change the scientific peer-reviewing system,

not only for paper submissions but also for grant proposal submissions,

before we start a thesis, a new product development, etc.

Here is an interview with Brent Hecht,

who has a point with his radical proposal, I think.

This article (in German) explains quite well, I think,

how

agile software development can include ethical considerations

("Ethik in der agilen Software-Entwicklung", August 2021, Informatik Spektrum der Gesellschaft für Informatik).

Doing Your Thesis Abroad

If you are interested in doing your thesis abroad, please

talk to us, we might be able to help with establishing a contact.

You also might want to look for financial aid,

such as this DAAD stipend.

Doing Your Thesis with a Company

If you are interested in doing your thesis at a company, we might be able to help establish a contact, for instance, with Kizmo, Kuka (robot developer), Icido (VR software), Volkswagen (VR), Dassault Systèmes 3DEXCITE (rendering and visualization), ARRI (camera systems), Maxon (Hersteller von Cinema4D), etc.

Doing Your Thesis in the Context of a Research Project

We always have a number of research projects going on, and in the context of those, there are always a number of topics for potential master's or bachelor's theses. If you are interested in such an "embedded" thsis topic, please pick one of those research projects, then talk to the contact given there or talk to me.

Formalities

If you feel comfortable with writing in English, I encourage

you to write your thesis in English.

(Or, if you want to become more fluent in English writing.)

I recommend to write your thesis using LaTeX!

There are no typographic requirements regarding your thesis:

just make it comfortable to read; I suggest you put some effort

into making it typographically pleasing.

A good starting point is the

Classic Thesis Template

by André Miede.

(Archived Version 4.6)

But feel free to use some other style.

Regarding the structure of your thesis, just look at some of the examples in our collection of finished thesis.

Referencing / citation: with the natbib LaTeX package, this

should be relatively straight-forward, just pick one of the predefined

citation/referencing styles.

If you are interested in variants,

here is the Ultimate Citation Cheat Sheet

that contains examples of the three most prevalent styles.

I suggest to follow the MLA style.

(Source)

Recommendations While Doing the Actual Work

- When you start doing your thesis, keep kind of a diary or log book,

where you record your ideas, and keep track of what you have done.

Eamples:- Max' notes on his tablet (thanks Max!)

- My notes as a stack of papers in a folder when I did my master's thesis (called "Diplomarbeit" at the time)

- Lab notebooks by Hahn and Bell

- Lab notebooks by other famous people: Leonardo da Vinci, Graham Bell, Thomas Edison 1, 2, 3. (Source)

- Good Laboratory Notebook Practices (Source)

- Have your laptop/computer make a backup every day automatically! (I just narrowly escaped a total disaster! one week after I had left the research institution where I did my thesis, the hard disk of the big machine (SGI Onyx) that contained all my data crashed completely! and that was one week before my deadline!)

- Research the scientific literature! When you put a lot of effort and work into something, it is very annoying to find out only later that the problem was already solved. Good tools online for doing literature research are Google Scholar, Consensus, and ScienceOS.

- The Missing Semester of Your CS Education. Courses how to master the command-line, use a powerful text editor, use fancy features of version control systems, and much more! Video recordings of the lectures are available on YouTube.

Recommendations for Writing Up

- Write in active voice, not passive voice, whenever you describe what you have done or when you have made choices. This is also recommended in the APA style and you can read more about when to use active voice and when to use passive voice. Also, see these suggestions and explanations by the Oxford University.

- Whenever you describe methods, algorithms, or software that others have developed, say so, i.e., "give credit where credit is due". (There is nothing wrong with using ideas, software, etc., from others, so long as you give credit.)

- Motivate your decision and choices. You can do so by reasoning, by citing previous work, by making experiments, etc.

- Evaluate your algorithms and methods. If you have developed an algorithm, the evaluation consists of experiments about its performance (quantitative and/or qualitative); ideally, you can also make a theoretical analysis using big-O calculus. If you have developed a user interaction method, the evaluation consists of user studies.

- When you describe the prior work in Section 2 of your thesis (a.k.a. state-of-the-art), also try to assess their good features and their limitations. (Usually, one sentence is enough.)

- In your bibliography (list of references), there should be no more than 10% of references to web pages! (I.e., blog posts, tutorials, manuals, etc.) Also, instead of citing papers on arXiv.org, try to find the official publication (in a journal or conference).

- In your chapter "Conclusions", try to summarize what you have done, describe for which cases your new method performs well and by what factor it performs better than the state-of-the-art; also, describe the limitations of your new method.

- When you describe your algorithms, please use pseudo-code (and equations, if there are any). Never use Blueprints or flow graphs. Real code (and blueprints) goes into an appendix. If you want to look at some good examples, you can look at the following theses: Hermann Meißenhelter's , Roland Fischer's, my own. (This list is, of course, by no means exhaustive!)

- Look at some of the examples on our Finished Theses page.

- Before you turn in your thesis, ask your advisor to have a quick look at it.

- When you turn in your thesis, please send me a PDF via mail.

Guidelines for Type(s) of Chart to use in your Thesis

At some point in your work, you probably will generate some charts to present your results. Some charts are better in showing specific facets than other charts. In the following table, you can find an overview of which chart is useful in communicating which properties of the data [B. Saket, A. Endert, and Ç. Demiralp: "Task-Based Effectiveness of Basic Visualizations", IEEE Transactions on Visualization and Computer Graphics, vol. 25, no. 7, pp. 2505–2512, July 2019].

How to use the table: first, pick the purpose of your visualization of your data; for example, let's assume you want to find correlations. So, you go to the "Correlations" row. Next, pick your top criterion; in our example, let's assume you strive to maximize user preference. So, you go to the cell under the "User preference" column. Finally, pick one of the chart types on the left hand side in that cell (they are ranked by score regarding the respective criterion you picked). In our example, you should probably use the lines chart; if that does not fit your purposes (for whatever other reasons), then you probably want to pick the bar chart instead. The arrows symbolize "performs better than" relationships between chart types (inside that cell).

Criteria we Use When Grading Your Thesis

Bei der Beurteilung einer Master- bzw. Bachelor-Arbeit verwenden wir folgende Kriterien:

- Kenntnisse und Fähigkeiten (was bringt die Studentin mit?)

- Systematik und Wissenschaftlichkeit (kann die Studentin wissenschaftlich arbeiten?)

- Initiative, Einsatz, Durchhaltevermögen (wie stellt sich die Studentin während der Arbeit an? wie ist ihre Frustrationstoleranz? hat sie eigene Ideen und geht diese mit Energie an? macht sie evtl. "Dienst nach Vorschrift"?)

- Qualität der Ergebnisse (was kam bei der Arbeit tatsächlich heraus?)

- Präsentation der Ergebnisse (kann die Studentin präzise und verständlich über ihre Arbeit berichten? das betrifft sowohl die schriftliche Ausarbeitung als auch den Vortrag)

Recommendations for Your Presentation During Your Defense (Colloquium)

- Length: for master's theses, your talk should not exceed 20 minutes; for bachelor's theses, you should err towards 15 minutes.

- You can omit the slide "Structure of the talk" (contrary to what you probably learnt). Reason: the structure is always the same, i.e., motivation, problem/task, related/prior work, concept/architecture/algorithms, implementation, evaluation, conclusions, future work.

- In your introduction, try to motivate your work; to do so, try to answer two questions: 1) what is the "big picture" where your works fit in? 2) what is the exact problem you are trying to solve? 3) in which way do existing solutions / scientific works fall short?

- Focus on the "meat", i.e., your algorithms, your user study, or your software architecture; basically, any- and everything that is hard computer science.

- Towards the end, show plots, show pictures, show videos.

- Draw conclusions: what is now possible with your novel stuff? point out limitations that still exist.

- Also good practice: show a video at the end.

- Bad practice: too much text, no diagrams.

- Do not use online slide presentation tools. (Google Docs, et al.)

- Practice your talk! You can ask your friends, girl friend, or record yourself. (I know it might hurt, but it is helpful.)

- Don't forget to invite your advisor/supervisor(s) to your defense!

Links

For printing your thesis, you might want to consider

Druck-Deine-Diplomarbeit.

We have heard from other students that they have

had good experiences with them (and I have seen nice examples of their print products).

Also, there is a friendly copy shop,

Haus der Dokumente,

on Wiener Str. 7, right on the campus.

The List

Master Thesis: Geometric Analysis and Invariant Identification in Neural Network Feature Space Manifolds

Subject

How does a neural network truly "see" data? While we often use tools like t-SNE or UMAP to visualize feature spaces, these methods often lose the underlying geometric structure. This thesis explores "Onion Peeling", an iterative geometric technique, to deconstruct the feature manifolds of trained deep learning models.

This thesis will develop methods to systematically analyze feature space geometry by iteratively computing convex hulls, removing boundary points (i.e. "peeling the hull points away"), and examining the resulting layered structure. The goal is to identify geometric invariants that persist across different feature spaces, whether from different object classes, network architectures, or training objectives.

Research Questions

- How do the "layers" of feature space manifolds differ structurally?

- Can "Onion Peeling" reveal consistent geometric signatures across feature spaces?

- What do these invariants tell us about learned representations?

Your Tasks/Challenges:

- Research and implement robust algorithms for N-dimensional (convex) hull indentification , e.g. via Quickhull or the CGAL-library, or approximate methods for high dimensions.

- Develop a pipeline to "peel" feature spaces and visualize the resulting density geometry

- Quantify the similarity between "peeled" manifolds to identify universal features and/or invariants

Requirements:

- Strong background in machine learning and deep learning.

- Solid background in (or the willingness to learn about) dimensionality reduction methods (e.g. PCA, t-SNE, UMAP).

- Solid background in Python / C++.

- Solid background in (or the willingness to learn about) Computer Graphics

- Knowledge of Unreal Engine 5 or a similar engine is a plus.

Contact:

Thomas Hudcovic, hudo at uni-bremen.de

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Bachelor/Master Thesis: Live 3D Scene Reconstruction Using Drone-Based Camera Systems and Gaussian Splatting

Subject

Recent advances in 3D Gaussian Splatting (3DGS) have reshaped the field of computer graphics by enabling high-fidelity static scene reconstruction from image collections with real-time rendering performance. For dynamic scenes, methods such as GPS-Splatting+ (building upon 3DGS) demonstrate that real-time, RGB-only 3D reconstruction is feasible. However, these approaches rely on densely distributed, stationary camera arrays, which limits scalability, deployment flexibility, and applicability in real-world environments.

This thesis proposes a robotics-driven alternative: a mobile system composed of two lightweight drones, each equipped with an onboard camera, to provide complementary viewpoints of a target scene. These drones should move with a 3D View that displays the content. Their live video streams will be integrated into a Gaussian-Splatting-based pipeline to achieve real-time 3D reconstruction that adapts to drone motion and supports interactive virtual viewpoint control. The implementation may build directly on the open-source GPS-Splatting+ codebase: https://yaourtb.github.io/GPS-Gaussian+

Depending on the focus you choose for your thesis, you may use real-world drones, but you may also choose to build a Proof-of-Concept in a virtual environment such as the Unreal Engine 5.

Requirements:

- Solid background in computer graphics.

- Solid background in communication via networks.

- Solid background in Python / C++.

- When working with real drones: Good knowledge of robotics, including camera calibration and pose estimation.

- When implementing this in a virtual environment: Knowledge with Unreal Engine 5.

Contact:

This thesis will be supervised by Thomas Hudcovic and Andre Muehlenbrock. If you're interested, please contact us!

Thomas Hudcovic, hudo at uni-bremen.de

Andre Muehlenbrock, muehlenb at uni-bremen.de

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

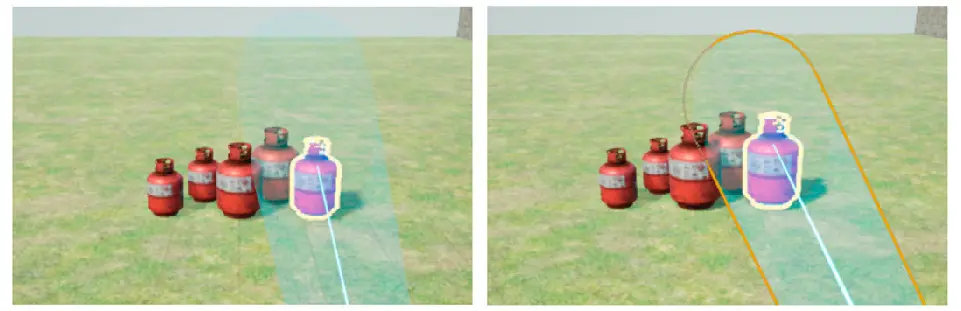

Master's Thesis: 3D Selection Technique in VR with Machine Learning Prediction

Subject

Recently, we have published a new 3D object selection technique called LenSelect). It works by increasing target sizes dynamically.

We think, that this technique could greatly benefit from a reliable way to predict the potential target (or a set of targets) that the user will likely try to hit, especially in cluttered environments or when the target is very distant.

In order to do so, leveraging machine learning seems to be a promising approach, see for instance: Predicting ray pointer landing poses in VR using multimodal LSTM- based neural networks, in IEEE Conf. on Virtual Reality and 3D User Interfaces 2025.

The idea of this thesis is to use a target prediction, dynamically increase the target size like in LenSelect, and do this during the whole target acquisition process of the user.

Your Tasks/Challenges:

- Get the target prediction from the paper to work, or implement your own, using eye gaze tracking, hand tracking, head tracking data. (I know one of the authors, maybe he will provide the code to me.)

- Get our old code of LenSelect to work (Unreal project).

- Make the target prediction work fast enough to be used at runtime.

- Evaluate the new technique w.r.t. user error rate, task completion time, and user satisfaction.

- Make it so that targets don't expand and shrink back and forth (temporal filtering?).

Requirements:

- Some experience in VR.

- Experience with machine learning, e.g., CNNs and Transfomers.

- Ideally, some experience with Unreal.

Contact:

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Master/Bachelor Thesis: Real-Time Reconstruction and Visualization of Environments as 3D Gaussian Splats on the Apple Vision Pro

Subject

3D Gaussian Splats (3DGS) allow for pretty fast visualization of point clouds with very high quality. The input are images or photos from a real environment, from which the splats are then reconstructed to represent the geometry and colors. There is a lot of source code available to do all this and there is even code available to render such 3DGS directly on the Apple Vision Pro.

The idea of this thesis is to take all this code and implement an app on the Apple Vision Pro that would allow the user to walk around in their real environments while the app would reconstruct that environment in real time as 3DGS and render those to the user.

Your Tasks/Challenges:

- Make this code work on our Apple Vision Pro.

- Test it with some given or pre-processed 3DGS sets.

- Take the images of the Apple Vision Pro cameras, and feed them into some existing codes for reconstructing 3DGS.

- Merge the output of that into the existing set of 3DGS and render them on the Vision Pro.

- Optional: develop and add an interaction technique to interact with the 3DGS.

- Optional: integrate point cloud segmentation algorithms.

- Optional: optimize the reconstruction (e.g., by integrating some of the latest 3DGS reconstruction methods).

Requirements:

- Experience in computer graphics, e.g., by attending the "Einfuehrung in Computergraphik" course.

- Experience with programming.

- Ideally, some experience with programming on Mac.

Contact:

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Master Thesis: Fast Heuristic Force-Feedback for Arbitrary Shapes Using Machine Leaarning

Subject

For many applications, force feedback does not necessarily have to be physically-correct;

usually, plausible forces (i.e., approximate magnitutde, approximate directoin) serve the

purpose just as well.

So, the idea is to train a neural network to generate forces for a pair of objects

whenever they overlap. Probably, this would mean that

for each pair of objects a specific network needs to be trained.

But maybe, it is even possible to train networks that generalize so well that they

can be trained for a larger set of objects.

Your Tasks/Challenges:

- Research about using neural networks for physics engines;

- Get familiar with graph neural networks.

- Think about the question in which representation the graphical objects should be presented to the network (mesh, point cloud, distance field, Gaussian splats?)

- Develop a training set

- Make experiments to evaluate the quality of the forces and the time performance.

Requirements:

- Experience with neural networks,

- Ideally, you have already attended Advanced Computer Graphics and/or Virtual Reality and Physically-Based Simulation,

- Good ability to work self-driven.

Contact:

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Bachelor Thesis: Analysis of Differentiable Physics Simulators

Subject

The goal of this thesis is to extend our study of two differentiable physics simulators for a reasoning problem. An example problem scenario is answering the question: "Could a pot on the floor have plausibly fallen from a nearby stove?" We formulate this as a trajectory optimization problem, which is solved using a gradient-based solver that receives its gradients from a differentiable physics engine. To be more precise, we utilize an in-house, CPU-based rigid body simulator (using sphere packings and forward-mode automatic differentiation) and compare it with NVIDIA's Warp, a GPU-based simulator that relies on signed distance fields and reverse-mode differentiation. Problem scenarios can be formulated in an XML format and supplied to both simulators for evaluation.

Your Tasks/Challenges:

- Familiarize yourself with the existing in-house software and the NVIDIA Warp simulator.

- Set up and evaluate multiple complex scenarios, comparing different simulator and solvers.

- Provide qualitative results (e.g., videos and side-by-side images) to visually compare the optimized trajectories.

- Find a way to make the curent loss comparable between the simulators.

Requirements:

- Good C++ skills

- Good ability to work self-driven.

- (Optional) Some experience with Unreal Engine for visualization.

Contact:

Hermann Meißenhelter, meissenhelter at uni-bremen.de

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

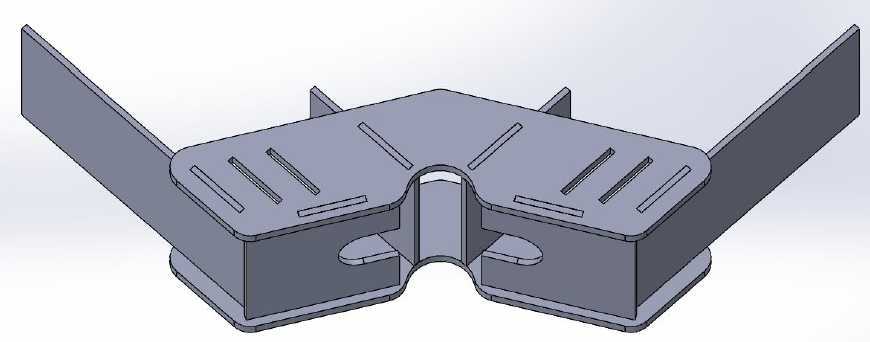

Master/Bachelor Thesis: Dense Surface-Oriented Packing for 3D Containers and Its Analysis

Subject

Packing problems occur in many different forms and real-world applications. In this project, we consider a variable set of objects and a single arbitrary container in 3D space. AutoPacking is software that packs arbitrary 3D objects into a 3D container and is provided. The first goal of this thesis is to develop a fast physics-based algorithm that packs objects densely at the surface, while also maintaining an inhomogeneous distribution. The idea is to shuffle objects locally and then utilize a gravitational force for compact placement near the surface. The second goal is to develop a new metric, i.e., to estimate the density near the container's outer surface. While working on this project, it is important to be prepared for potential challenges. These could include issues such as high memory consumption, rendering problems, or long runtimes.

Your Tasks/Challenges:

- Get comfortable with a large and non-trivial codebase/software, 'AutoPacking', and our in-house collision detection library.

- Develop an efficient physics-based algorithm to pack objects densely near the surface. Actually, AutoPacking already utilizes a rigid body simulator, but without gravitational forces.

- Develop a metric to quantitatively evaluate the packing quality near the surface.

Requirements:

- Good C++ skills

- Good ability to work self-driven.

Contact:

Hermann Meißenhelter, meissenhelter at uni-bremen.de

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Bachelor/Master Thesis: Comparative Study of Dynamic Point Cloud Rendering in VR

Research Question:

Which Rendering Technique for Live Human Point Clouds Feels Most Realistic and Immersive in Virtual Reality?

Description:

In this thesis, you will explore which rendering technique for live point clouds of people is perceived as the highest quality and most immersive by VR users. The study will be conducted in a virtual reality environment using an existing framework that already includes several real-time rendering methods. The visual output will be displayed in VR using Unreal Engine.

Background:

People can be captured in real time using depth sensors such as the Microsoft Azure Kinect. These sensors generate a live point cloud of the person, but the data is often noisy. There are various techniques to render these point clouds—some of which are already implemented in the framework, including a custom-developed method.

Possible Extensions (especially for a Master’s thesis):

- Develop your own rendering technique, such as a custom version of Gaussian Splatting or real-time Neural Radiance Fields (NeRFs). Experience with OpenGL and neural networks would be helpful.

- Add screen-space shadows and run performance comparisons between all rendering techniques.

What You’ll Work With:

- A live point cloud capture system (e.g. Azure Kinect)

- An existing rendering framework

- Unreal Engine for VR visualization

Requirements:

- Experience with Unreal Engine

- Designing and conducting user studies (especially in VR)

- Optional but beneficial: Strong C++ skills and basic OpenGL knowledge

Framework you would work with: https://github.com/muehlenb/BlendPCR

Contact:

Andre Mühlenbrock, muehlenb at uni-bremen.de

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Master Thesis: Collision Detection using Neural Networks for Sequential Data

Subject

Neural networks encode a lot of valuable information in their latent space. However, utilizing that in geometric computing is a challenge. One example is collision detection, which is the problem of deciding whether or not two meshes intersect with each other.

Collisions of objects in a space usually happen while objects are moving around, i.e. have a path. Paths of objects can be interpreted as (continuous) sequences of transformations. The task of this thesis is to train a neural network suitable for sequential data (e.g., Transformers or State Space Models) and assess whether it is capable of not only deciding (or even predicting!) whether a collision has happened (or will happen), but also whether it can predict the size of the intersection volume. Maybe a whole scene with many objects and their paths can be encoded and the output could be the same scene but with intersection volumes?

Ancillary questions will be the performance of the method (assuming it is possible), both in terms of runtime and in terms of accuracy.

Your Tasks/Challenges:

- Investigate other research on geometric computing using neural networks

- Find a promising architecture for the collision detection problem

- Train and evaluate the networks

Requirements:

- Good understanding of machine learning and deep learning concepts, familiarity with neural network architectures

- Some proficiency in programming and familiarity with one of the popular deep learning frameworks (Pytorch is a plus)

- Some knowledge of optimization problems (the training of neural networks is an optimization problem)

- Familiarity with GPGPU programming and basics of CUDA are a plus

Contact:

Prof. Dr. Gabriel Zachmann, zach informatik.uni-bremen.de

Dr. Rene Weller, weller at informatik.uni-bremen.de

Master Thesis: Study - Human Gaze Data vs. NN Feature Data

Subject

Neural networks have proven themselves to be a valuable tool for solving certain problems in a wide variety of application domains. The inner workings of neural networks still remain a mystery, especially if and how they relate to the actual input domain (e.g. pixel space). The goal for this thesis is to design and conduct a research study to collect gaze data from participants via eye-tracking in VR and compare this data with neural network feature data, performing same task on the same dataset, e.g. identify certain shapes, which is akin to semantic segmentation. The dataset as well as the training data (labels) is already provided.

Your Tasks/Challenges:

- Develop and implement a VR-App to collect human gaze data via eye-tracking in Unreal Engine

- Design and conduct a research study using this VR-App and analyze the data

- Compare the gaze data with feature map data of neural networks, using the same same task (CNNs and ViTs)

Requirements:

- Understanding (or willingness to learn) of machine learning and deep learning concepts, familiarity with basic encoder-decoder (e.g. U-Net) neural networks

- Experience in programming and familiarity with one of the popular deep learning frameworks can help

- Experience in designing and conducting a user/research study (or willingness to learn)

- Experience with VR and Unreal Engine (or willingness to learn)

Contact:

Thomas Hudcovic,

hudo at uni-bremen dot de

Prof. Dr. Gabriel Zachmann, zach informatik.uni-bremen.de

Master Thesis: Real-time Manipulation of Stereoscopic 3D Rendering in VR using Shader Programming

Subject

In stereoscopic cinema, various technical tricks are employed to influence the perceived 3D depth by the audience and to direct attention to specific areas of a scene. These techniques include, for example, varying the interpupillary distance (IPD) for different objects within a scene and subsequently combining them. Such manipulations can be used to make objects appear larger or smaller, alter the perception of distances, or guide the viewer's focus to particular elements. Virtual Reality (VR), with its direct control over stereoscopic rendering, offers promising possibilities to utilize these effects interactively and in real-time. Shader programming provides a flexible and performant way to control image generation in this context.

Your Tasks

The goal of this Master's thesis is to investigate and develop methods for real-time manipulation of stereoscopic 3D rendering in VR scenes using shader programming. Techniques used in stereoscopic film production will be analyzed and adapted for application in VR. Potential tasks include:

- Studying the fundamentals of stereoscopic 3D rendering, depth perception, and shader programming.

- Analyzing and categorizing depth manipulation techniques in stereoscopic film.

- Developing and implementing shader-based methods to replicate selected depth manipulation techniques in VR.

- Investigating the effects of the implemented techniques on depth perception and user experience in VR.

- Evaluating the performance and real-time capability of the developed methods.

- Developing application examples or an interactive demo application to showcase the results.

Requirements

- Strong knowledge of computer graphics and 3D geometry

- Experience in programming with C++

- Knowledge of shader programming (e.g., GLSL)

- Knowledge in the field of Virtual Reality is an advantage

- Independent and structured work ethic

Contact:

Rene Weller, weller informatik.uni-bremen.de Prof. Dr. Gabriel Zachmann, zach informatik.uni-bremen.de

Master Thesis: Accelerating Sphere-Based Bounding Volume Hierarchies (BVHs) for Continuous Collision Detection (CCD) with Raytracing Hardware

Subject

Continuous Collision Detection (CCD) is a crucial technique in computer graphics and simulation. Unlike discrete collision detection, which only checks for object overlap at specific time steps, CCD calculates the precise moment of a collision between moving objects. This is essential to prevent objects from passing through each other during fast movements or large time steps. A challenge in collision detection arises because the movement of a vertex between two frames forms a line. Therefore, CCD is closely related to ray tests and thus raytracing. Bounding Volume Hierarchies (BVHs), a hierarchical data structure that encloses objects with simple volumes (e.g., spheres or boxes), are frequently used for efficient collision queries, similar to their application in raytracing.

Your Tasks

This Master's thesis aims to investigate how the raytracing hardware of modern GPUs can be used to accelerate sphere-based BVHs for CCD. Modern GPUs have specialized hardware for raytracing, optimized for efficient ray-intersection calculations. This hardware will be leveraged to speed up the computationally intensive calculations in CCD with sphere-based BVHs. Potential tasks include:

- Studying the fundamentals of continuous collision detection, Bounding Volume Hierarchies, and raytracing.

- Developing an algorithm for performing CCD with sphere-based BVHs using raytracing hardware.

- Implementing the algorithm on a modern GPU.

- Evaluating the algorithm's performance compared to traditional CCD methods.

- Analyzing the accuracy and robustness of the algorithm.

Requirements

- Strong knowledge of computer graphics and 3D geometry

- Experience in programming with C++

- Knowledge of GPU programming (e.g., CUDA or Vulkan) is an advantage

- Independent and structured work ethic

Contact:

Rene Weller, weller informatik.uni-bremen.de Prof. Dr. Gabriel Zachmann, zach informatik.uni-bremen.de

Bachelor Thesis: Generating 2D character animations with deep generative models

Subject

This thesis aims to investigate and find the best approach for generating 2D characters and sprite sheet animations for that character. Creation of 2D characters now is a task that can quickly be done with deep generative models, but we aim to go a step further and make a sprite sheet for that character with these models. Sprite sheets are images that contain different states of the character for each frame, and if you play frames one after each other, you have an animation. Making a sprite sheet animation is time-consuming because the artist has to draw or adjust the character components for each frame; this thesis aims to find the best approach for generating this animation with deep generative models.

Your Tasks

Your task is to familiarize yourself with generative models, investigate the state of the art in this field, and determine which generative modes can generate a character's sprite sheet. There are various approaches to achieving this goal; you have to try as many as you can, compare the complexity of using them, and compare the quality of the generated images and animations.

Requirements

We are seeking an enthusiastic and talented student with:

- Experience in Python.

- Nice to have: Experience in Unity.

Contact:

Danial Barazandeh, barazand uni-bremen.de Prof. Dr. Gabriel Zachmann, zach informatik.uni-bremen.de

Master Thesis: CGAL-ifying some of CGVR's code

Subject

CGAL (www.cgal.org) is a mature and well-known library for all kinds of computational geometry algorithms, written in C++ , and used by a lot of industries and academia.

At the CGVR lab, we have developed a number of geometric algorithms, such as software for computing and using sphere packings to answer various geometric queries (for instance, intersection queries on 3D models). Another software performs collision detection extremely fast, using a special type of bounding volume (k-DOPs). Exactly which part of CGVR's code base is to be ported to CGAL within the scope of this thesis will be discussed at the beginning, taking into account your preferences and pre-existing knowledge of geometric computing.

With this thesis, we would like to explore the possibilities and effort of porting our code to the CGAL library, such that our code would then be available to the wider public, and hopefully can be maintained much longer.

Ideally, the code will get included in CGAL by the end of your thesis, but this is not a prerequisite for a successful completion of the work. If you would like to attend one of the regular developer meetings of the CGAL community, we can arrange that and also finance it.

Your Tasks

- Get familiar with the mostly-templates-based framework of CGAL

- Get familiar with our code and the underlying algorithms, for instance, the sphere packing, or the kDOP code

- Port our code to CGAL

- Develop performance tests

- Evaluate the robustness of our code

Requirements

We are seeking an enthusiastic and talented student with:

- Good command of C++

- Some familiarity with geometric computing, such as is taught in computational geometry or computer graphics

- Pleasure in developing production code

- Pleasure in understanding geometric algorithms

- Knowledge in software engineering is a plus

Contact:

Prof. Dr. Gabriel Zachmann, zach informatik.uni-bremen.de

Master/Bachelor Thesis: Point Cloud Rendering

Subject

With the advent of cutting-edge scanning technologies and 3D data capture, point cloud datasets have grown to unprecedented sizes, containing billions of data points. The conventional rendering approaches simply fall short in handling such colossal volumes, leading to reduced performance, compromised visual fidelity, and frustrating user experiences.

Your Tasks

Your task will be to innovate and engineer a novel technique that cleverly optimizes memory usage and computational efficiency, while preserving the intricate details and accuracy of the original point cloud data. In case of a bachelor's thesis, making existing techniques and tools usable for the application in the Institute for Protecting Maritime Infrastructures is a suitable task, too.

Requirements

We are seeking an enthusiastic and talented student with:

- Proficiency in computer graphics and 3D rendering techniques,

- Programming skills, and

- A passion for pushing the boundaries of what's possible in the realm of visualization.

Contact:

Prof. Dr. Gabriel Zachmann, zach informatik.uni-bremen.de

Dr.-Ing. Jannis Stoppe,

Institut für den Schutz maritimer Infrastrukturen,

Bremerhaven,

phone: +49 471 924199 43 ,

email: Jannis.Stoppe at dlr.de,

DLR.de/mi

Master/Bachelor Thesis: Point Cloud Segmentation

Subject

Traditional approaches for point cloud segmentation using machine learning techniques often require vast amounts of annotated data, making their application cumbersome and time-consuming or downright impossible.

Your Tasks/Challenges:

Your task will be to explore innovative machine learning techniques, including few-shot-approaches, designing algorithms that can leverage prior knowledge from a small set of labeled point clouds to accurately segment new, previously unseen data.

Requirements

We are seeking an enthusiastic and talented student with:

- Proficiency in computer graphics and 3D rendering techniques,

- Programming skills, and

- A passion for pushing the boundaries of what's possible in the realm of visualization.

Contact:

Prof. Dr. Gabriel Zachmann, zach informatik.uni-bremen.de

Dr.-Ing. Jannis Stoppe,

Institut für den Schutz maritimer Infrastrukturen,

Bremerhaven,

phone: +49 471 924199 43 ,

email: Jannis.Stoppe at dlr.de,

DLR.de/mi

Master/Bachelor's Thesis: Point Cloud Labeling

Subject

Point cloud data lies at the core of modern technologies such as self-driving cars, augmented reality, and 3D mapping. However, annotating the training data sets for these technologies is still a time-consuming and tedious process, often resulting in only sparse data being available, especially for niche applications. One reason is that traditional labeling approaches usually rely on 2-dimensional projections, meaning that the selection of points is often a multi-step-process that makes even the selection of simple shapes a complex process.

Your Tasks/Challenges:

Your challenge will be to work on the immense potential of immersive 3D point cloud data labeling. By operating directly in 3D space, projection issues might be overcome, simplifying and speeding up the labling steps in the process. By leveraging VR technologies, users could be potentially more easily create vast amounts of proper baseline data sets for their algorithms. So, the task is to create, design, implement, and evaluate a novel 3D point selection technique, that operates directly in VR. Thus, you could unlock the next level of precision and efficiency in data annotation.

Requirements

We are seeking an enthusiastic and talented student with:

- Proficiency in computer graphics and 3D rendering techniques,

- Programming skills, and

- A passion for pushing the boundaries of what's possible in the realm of visualization.

Contact:

Prof. Dr. Gabriel Zachmann, zach informatik.uni-bremen.de

Dr.-Ing. Jannis Stoppe,

Institut für den Schutz maritimer Infrastrukturen,

Bremerhaven,

phone: +49 471 924199 43 ,

email: Jannis.Stoppe at dlr.de,

DLR.de/mi

Master Thesis: Collision Detection using the Latent Space of Neural Networks

Subject

Neural networks encode a lot of valuable information in their latent space. However, utilizing that in geometric computing is a challenge. One example is collision detection, which is the problem of deciding whether or not two meshes intersect with each other.

It should be possible to train a neural network (e.g., a graph neural network, or, maybe, a transformer) such that it encodes somehow the shape of a given mesh in its latent space. The central question of this thesis is: is it possible to utilize the latent space of two networks (encoding two different shapes) in order to decide whether or not they collide, given their positions (and orientations) in space?

Ancillary questions will be the performance of the method (assuming it is possible), both in terms of runtime and in terms of accuracy.

One approach to solve this could be to train one encoder/decoder network for each shape, then freeze their latent spaces and use those, together with the poses of the meshes, at runtime as input to another network, which will be trained to decide the collision problem.

Your Tasks/Challenges:

- Investigate other research on geometric computing using neural networks

- Find a primising architecture for the collision detection problem

- Train and evaluate the networks

Requirements:

- Good understanding of machine learning and deep learning concepts, familiarity with neural network architectures

- Some proficiency in programming and familiarity with one of the popular deep learning frameworks (Pytorch is a plus)

- Some knowledge of optimization problems (the training of neural networks is an optimization problem)

- Familiarity with GPGPU programming and basics of CUDA are a plus

Contact:

Prof. Dr. Gabriel Zachmann, zach informatik.uni-bremen.de

Dr. Rene Weller, weller at informatik.uni-bremen.de

Master Thesis: Investigating Methods for a Semantic Abstraction Schedule of Data to Aid Neural Networks During Training

Subject

Neural networks have proven themselves to be a valuable tool for solving certain problems in a wide variety of application domains. The beginning of each neural network model stays the same, however: The acquisition of suitable data and the subsequent training of the model. The training itself is a huge area of research alone, including optimization techniques for training time and gradient stability. The goal of this thesis is to investigate whether training can be accelerated and/or more stabilized with a "semantic abstraction schedule" for data samples in training batches. More specifically, the task will be to investigate what effects the (epoch-dependent) scheduled deblurring of sample images might have on the training of Encoder-Decoder networks. And whether another form of parameterized semantic abstraction of the sample information exists or is even more suitable.

Your Tasks/Challenges:

- Develop and investigate methods to get visual abstractions of images, without damaging essential information for the network, using Pytorch

- Investigate how and if such methods can act as a tool for regularization

- Investigate if there is another way of achieving the same effect by "acting" on the network itself, rather than on the data. Maybe there is a way of adapting EMA (Exponential Moving Average) models to achieve a similar effect during training?

- Test and evaluate the methods on different encoder-decoder networks or classifiers

Requirements:

- Good understanding of machine learning and deep learning concepts, familiarity with basic classifier and encoder-decoder neural networks

- Intermediate proficiency in programming and familiarity with one of the popular deep learning frameworks (Pytorch is a plus)

- Understanding of optimization problems (the training of neural networks is an optimization problem) and their rudimentary structure

- Familiarity with GPGPU-programming and basics of CUDA are a plus

Contact:

Thomas Hudcovic,

hudo at uni-bremen dot de

Prof. Dr. Gabriel Zachmann, zach informatik.uni-bremen.de

Master Thesis: Exploring the Impact of Self-Shadows in Multi-User VR Applications

Objective:

This thesis will investigate the influence of shadows in both single-user and multi-user virtual reality (VR) settings. Current VR systems, constrained by limited tracking to only the user's head and hands, often fail to render self-shadows accurately. The goal is to determine how the presence of realistic shadows affects user interaction and perception in VR, examining both beneficial outcomes (like enhanced depth perception and interaction accuracy) and potential drawbacks.

Research Tasks:

The candidate will design experiments to test the effects of self-shadows on user performance in various tasks within VR environments and implement them into a game engine. Additionally, the research will explore the psychological impact of shadows in multi-user scenarios (perhaps a little in the style of "Peter Schlemihl's Wondrous Tale").

Technical Approach:

To facilitate this research, a VR application will be developed where an invisible 3D avatar, replicating the participant’s movements including hands and feet, will cast a shadow. This will involve: Employing a game engine (such as Unreal Engine) to handle shadow rendering. Utilizing Vive trackers for precise tracking of the body's joints, animating the avatar through inverse kinematics. Optionally using motion capture systems like Optitrack for detailed movement replication or depth sensors (e.g., Microsoft Azure Kinect) to reconstruct physical interactions more accurately.

Required Skills:

- Design studies / structured experiments.

- Proficiency with game engines like Unreal Engine.

- Basic knowledge in animation techniques or point cloud processing.

- Strong programming skills.

Contact:

Andre Mühlenbrock, muehlenb at uni-bremen.de

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Master Thesis: Immersive Visualization in VR

Subject

Immersive visualization tries to combine scientific visualization (e.g., visualization of wheather simulations) with immersive technologies (e.g., VR headsets and 3D interaction). In this thesis, you are to implement visualization techniques on the game engine Unreal 5, such that standard Excel or CSV tables can be read by your system and visualized in VR. Also, some standard 3D interaction techniques are to be implemented, such as navigation around the data, selecting regions of the data, etc. Depending on availability, we would like to visualize data of the spreading of the recent pandemic, and data of specfic ocean measurements.

Your Tasks/Challenges:

- Get comfortable with Unreal engine 5, immersive visualization techniques, data sources

- Implement exisiting immersive visualization techniques in Unreal

- Develop techniques to deal with temporal data in VR (e.g., define time slices)

Requirements:

- C++

- Good ability to work self-driven.

Contact:

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Thesis: Machine Learning for Uncertainty Analysis in Rigid Body Dynamics

Subject

In scenarios involving nonlinear functions that adjust uncertain parameters, whether correlated or independent, the predominant approach for propagating uncertainty involves sampling techniques derived from the Monte Carlo method. However, when dealing with large-scale data or costly functions, this error propagation can become prohibitively expensive. Under such circumstances, implementing a surrogate model or leveraging parallel computing strategies may prove essential.

The rigid body is approximated with a set of spheres. Intersecting spheres are used to compute a force. The task would be to learn the outgoing force uncertainty (Covariance or the Eigendecomposition) for a set of colliding spheres (input).

Your Tasks/Challenges:

- Generate a large enough dataset

- Train a network: MLP, KAN (Kolmogorov Arnold Network), BNN (Bayesian Neural Network) or Gaussian Process?

- Comparison to sampling (ground truth)

Requirements:

- C++, Math, Machine Learning (PyTorch)

- Good ability to work self-driven.

Contact:

Hermann Meißenhelter, meissenhelter at uni-bremen.de

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Thesis: Probabilistic Collision Detection

Subject

In many applications, such as robotics, virtual environments, and dynamic simulation, obtaining exact representations of objects is often difficult. Instead, the representations of objects are described using probability distribution functions. This is because the data from the environment is captured using sensors, and only partial observations are available. Additionally, the primitives captured or extracted using sensors tend to be noisy. In this scenario, the objective is to calculate the probability of collision between two or more objects when the representations of one or more objects (such as positions, orientations, etc.) are expressed in terms of probability distributions.

A significant part has already been done. We use sphere packings to approximate the objects and compute the collision probability between two spheres (isotropic Gaussian) analytically with a tight upper bound (also for rigid bodies). However, we are missing a comparison/benchmark between different methods.

Your Tasks/Challenges:

- Compute ground truth collision probability by sampling

- Setup objects apart by absolute distances and measure collision probability and computation time

- Nice to have: Implement some other method(s) for comparison

- Optional: Include rotational uncertainty in collision probability computation

Requirements:

- C++ and some math

- Good ability to work self-driven.

Contact:

Hermann Meißenhelter, meissenhelter at uni-bremen.de

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Thesis: Treatment of Reflex Syncopes Using VR

Subject

There has been a lot of research on treatment of phobias using VR, and the effectiveness has been shown numerous times for a number of concrete phobias (e.g., fear of heights). Reflex syncopes (for instance, brief loss of consciousness when seeing blood) are different in that they affect directly the blood pressure and heart rate.

The question of this thesis is whether such conditions can be treated using VR or AR, perhaps similar to the exposure therapy for phobias.

Your Tasks/Challenges:

This thesis is special because it faces a number of challenges:

- You need to find a clinical expert for syncopes for collaboration;

- You need to find enough participants that exhibit syncopes when exposed to the same type of trigger (e.g., blood);

- Determine whether VR or AR are better suited for a potential treatment;

- You need to implement a high-quality rendition of the trigger that can robustly trigger the syncope.

- Conduct the user study.

On the other hand, this thesis treads on totally uncharted ground, so your reward could be immense (even a scientific paper at a conference, with our help, could be possible).

Requirements:

- Some experience with game engines (either Unreal or Unity should work);

- Good ability to work self-driven.

Contact:

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Thesis: Original or fake? WOLS (Alfred Otto Wolfgang Schulze, 1913–1951)

Subject

The artist Alfred Otto Wolfgang Schulze, alias Wols (Berlin 1913 - Paris 1951) is considered one of the most important pioneers of informal art in Europe. He left Germany in 1932 and moved to Paris, where he initially worked mainly as an advertising and portrait photographer. When he was interned in 1939 due to France's entry into the war, he shifted his artistic focus and created numerous watercolours and pen and ink drawings inspired by Surrealism. After his release from the Les Milles camp, he increasingly devoted himself to the graphic depiction of dissolution processes as well as sculptural and linear structures. He created his first completely abstract pictures. When he then created around forty paintings for the René Drouin Gallery in Paris in the mid-1940s, his pictures came to symbolise what was henceforth known in Europe as Art Informel or Tachisme and developed in the USA at the same time as Jackson Pollock under the name Abstract Expressionism.

The Wols Archive, run by the Karin and Uwe Hollweg Foundation and founded by Ewald and Sylvia Rathke, has recorded the majority of WOLS' works to date and will publish a catalogue raisonné of his drawings and watercolours in a few years' time.

Wols' posthumous success had and still has a very negative downside: Wols' works have been forged very frequently since the end of the 1950s. Thanks to the connoisseurship of Wols expert Ewald Rathke, it has been possible to identify the majority of forgeries since the 1970s; however, new genuine and fake drawings and watercolours continue to emerge.

Your Tasks/Challenges:

A program is to be developed which, on the basis of hundreds of genuine and secured fake works (all of which are digitally available at the Karin and Uwe Hollweg Foundation), will provide automated assistance in the future identification of genuine and false works.

Requirements:

Some experience with machine learning, in particular neural networks, and/or random forests, etc. Also, a bit of programming experience with Python. Ideally, a bit of experience with PyTorch or TensorFlow.

Contact:

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Bachelor Thesis: Influence of Hand Models on Hand Pose Estimation

Subject

Pose Estimation of hands (in combination with objects) is an important foundation for both VR and robotics applications. Methods based on deep learning usually outperform classical methods by a wide margin. However, they require annotated training images. The annotation process of real images with hands is cumbersome and prone to errors. Therefore synthetic data is important tool to train pose estimators.

In this thesis you measure the influence of hand models with different quality (see image) on hand pose estimation.

Your Tasks/Challenges:

- Use our data generation tools and create training data for different existing hand models

- Use an existing hand pose estimator to investigate the influence of this models

Requirements:

- Experience in Python

Contact:

Janis Roßkamp, j.rosskamp at cs.uni-bremen.de

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Thesis: A Hybrid Approach to Pose Estimation of Hands using Deep Learning

Subject

Hand Pose Estimation is important for both virtual reality applications and motion capturing (mocap) for games and movies. Current methods often use RGB images (top image) or marker-based strategies. However, RGB images typically fail to provide high-precision pose estimation, whereas marker-based motion capturing requires numerous markers to accurately track the hand requiring the use of an intrusive glove (bottom image). Moreover, this marker-based approach results in the loss of all hand information, such as shape and color.

In this thesis, you develop a novel hybrid method that combines the strengths of mocap and RGB images to enhance the accuracy of hand pose estimation. Using only a few markers, i.e. on the fingertips, we can improve current methods on RGB images.

Your Tasks/Challenges:

- Generate synthetic training data suitable for the hybrid hand pose estimation method. You can use our existing tools for the synthetic data generation

- Various projects with code exist for Hand Pose Estimation. You can choose a project which you find most suitable and modify its underlying neural network so that it can incorporate precise motion capturing data.

Requirements:

- Experience in Python

- Basic knowledge in Deep Learning

- Optional: Experience in PyTorch

Contact:

Janis Roßkamp, j.rosskamp at cs.uni-bremen.de

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Master Thesis: Real-Time Rendering of Dynamic Point Clouds

Subject

Rendering 3D point clouds, captured using depth or LiDAR sensors, in real-time is a fundamental and challenging area in computer graphics. A point cloud consists of numerous 3D points that hold spatial position and color information. The primary goal of point cloud rendering is to display this collection of points (which might be a simple array of 3D-Vectors) in such a way that they are perceived as a opaque surface on a screen.

The current methods in point cloud rendering are diverse. Some approaches visualize the points directly as circular "splats" on the screen. Other techniques transform the points into a 3D grid and reconstruct a mesh, which is then rendered. Recent techniques utilize different types of deep neural networks.

Your Task:

Depending on your preferences, your task may vary an may be:

- to develop/conceive your own novel rendering technique.

- to make improvements to existing rendering techniques.

- to reimplement a part of an highly advanced rendering technique from the literature (see next paragraph).

Highly advanced techniques such as Fusion4D (Microsoft) or Function4D (among others, by Google), which achieve high-quality results, typically do not release their source code. Therefore, re-implementing parts of these techniques would be very interesting for a large research community in the context of a master thesis.

Working Environment:

Regardless of your chosen path (whether developing a new technique, improving an existing one, or partially re-implementing a very advanced unpublished technique), your technique should finally be integrated into our Point Cloud Rendering Framework (PCRFramework). Our PCRFramework is a stable, lightweight and easy-to-expand framework implemented in modern C++ and offers an ImGUI frontend, that already supports different basic kinds of point cloud rendering techniques (Splat Rendering, Mesh Rendering, TSDF with Real-time Marching Cubes). It already possesses numerous functions to load point clouds from an Azure Kinect, Microsoft Kinect v2, or from a recorded file. The framework integrates CUDA and already offers some useful functions to access the loaded point cloud in CUDA as well as from the CPU. Should you wish to use neural networks, the PCRFramework is also capable of loading and inferring neural networks trained in PyTorch via LibTorch. In this case, your implementation would primarily be in Python and PyTorch.

Requirements:

- Solid knowledge and experience in 3D rendering techniques (CG1 / CG2).

- Programming skills (C++ and either OpenGL / GLSL or CUDA).

- Optional: Python and PyTorch (when you want to utilize neural networks).

Contact:

Andre Mühlenbrock, muehlenb at uni-bremen.de

Prof. Dr. Gabriel Zachmann,

email: zach at informatik.uni-bremen.de

Master Thesis: Redirected Walking in Shared Real and Virtual Spaces

Subject

Redirected walking (RDW) enables users to walk in a larger VR space than

the real space.

This works by shifting and rotating the virtual space ever so

slightly, ideally below the user's noticeable threshold.

There has been a lot of research on RDW techniques and

the just noticeable thresholds.

However, how do you redirect multiple users in a shared

virtual environment in the case the users also share

the same real space, e.g., a big lab or a huge indoor court?

The setup is a number of users wearing untethered HMDs

moving around in a large, common, tracked space

(for instance, using optical tracking and WiFi HMDs).

This setup is not quite consumer grade (yet), but we can imagine a

future, where such kind of arcades are possible.

An approach to solving the RDW problem could be kind of a trajectory

optimization, where users' trajectories in real space

are predicted, and the optimization goal is the

total deviation from all the trajectories of all users.

Your Tasks/Challenges:

Research the literature on multi-user RDW. Formalize the optimization problem as a mathematical non-linear optimization problem. Identify a suitable math library for solving the problem in real-time. Implement the system. Test and evaluate it with a number of users.

Requirements:

- Passion for the topic

- Programming skills

- Ideally, a bit of experience with the Unreal Engine (can be learned rather quickly)

- Ideally, some experience with mathematical optimization methods; you will not need to develop optimization codes or algorithms, but you should feel comfortable with applying existing optimization methods (and use respective libraries)

Contact:

Prof. Dr. Gabriel Zachmann,

email: zach at informatik.uni-bremen.de

Master Thesis: Inverse Reinforcement Learning and Affordances

Subject

People could program powerful chess computers before they could program a robot to walk on two legs, and many of the tasks we find easy as human beings, such as daily activities involved in preparing meals or cleaning up, turn out to be difficult to specify in detail. Thus, if we want robots to be competent helpers in the home, it would be better if we could teach them by showing what needs to be done, and for them to learn from watching us. Several techniques are being researched to enable such learning. One of these techniques is IRL—inverse reinforcement learning [1]—where the goal is to discover, by watching an "expert," the reward function that this expert is maximizing. This is more effective than simple imitation of the expert's actions. Consider the proverbial monkey shown how to wash dishes. The monkey may go through the motions of wiping, but if it did not understand that the dishes should be clean afterwards, then it won't do a good job. However, IRL is an ill-posed problem: there can be an infinity of reward functions that the expert may be demonstrating. To even make an educated guess would often require considering enormous search spaces—there are many parameters that go into characterizing even the simplest manipulation action! Additionally, the environments in which human beings perform tasks, and the tasks themselves, are in principle of unbounded complexity: if a human knows how to stack three plates on top of each other, they also know how to stack four or ten.

Your Tasks/Challenges:

The subject of this thesis is to develop an IRL system that combines existing research into relational IRL[2], modular IRL[3], and explicitly represented knowledge to enable a simulated agent to learn, from demonstrations performed in a simulated environment, how to perform tasks such as stacking various items, putting objects in and taking them out of containers, and how to cover containers. While the project can start with published techniques, it also raises research questions to investigate. Relational IRL is a technique to learn rewards that generalize and describe tasks for environments of, in principle, arbitrary complexity. However, the choice of logical formulas in the relational descriptions has a significant influence on the quality of the learned rewards—how can the logical language of these descriptions be well-chosen for the tasks we have in mind, such as stacking and container use? Furthermore, because IRL is mathematically ill-posed, many reward functions are learnable. [2], cited below, shows an example of an unstacking task, where both a reward for "there are 4, 5, or 6 blocks on the floor" and a reward for "there are no stacked blocks" are learnable from the same data, but it is only the second one that captures the intended level of generality. How can the learning process be influenced to prefer the more generalizable rewards? How can we encode which parameters of the demonstration count "as-is" and which are allowed to vary arbitrarily? The manipulations involved in stacking or container use are complex. Can these be split into several phases, allowing for independent learning for each phase and thus simplifying the search space for the IRL problem?

Requirements:

- Motivation

- Programming skills (Python, PyTorch, OpenAI Gym).

- Unreal Engine (Virtual Reality or OptiTrack)

Contact:

Prof. Dr. Gabriel Zachmann,

email: zach at informatik.uni-bremen.de

Master Theses at DLR/CGVR: Point Clouds in VR

Subject

The Department of Maritime Security Technologies at the Institute for the Protection of Maritime Infrastructures is dedicated to solving a variety of technological issues necessary for the implementation and testing of innovative system concepts to protect maritime infrastructures. This includes the development of visualization methods for maritime infrastructures, including vast point cloud data sets. The Computer Graphics and Virtual Reality Research Lab (CGVR) at University of Bremen carries out fundamental and applied research in visual computing, which comprises computer graphics as well as computer vision. In addition, we have a long history in research in virtual reality, which draws on methods from computer graphics, HCI, and computer vision. These two research groups offer the opportunity for joint master theses, allowing students to get the best of both worlds of acadamic and applied science.

Potential Topics:

- Point Cloud Labeling: Your mission will be to create an intuitive and interactive VR application, revolutionizing the way we annotate and process vast amounts of point cloud data. Point cloud data lies at the core of modern technologies such as self-driving cars, augmented reality, and 3D mapping. Your challenge will be to bridge the gap between traditional 2D labeling methods and the immense potential of 3D point cloud data. Through your expertise and creativity, you will unlock the next level of precision and efficiency in data annotation.

- Point Cloud Rendering: With the advent of cutting-edge scanning technologies and 3D data capture, point cloud datasets have grown to unprecedented sizes, containing billions of data points. The conventional rendering approaches simply fall short in handling such colossal volumes, leading to reduced performance, compromised visual fidelity, and frustrating user experiences. Your task will be to innovate and engineer a novel technique that cleverly optimizes memory usage and computational efficiency, while preserving the intricate details and accuracy of the original point cloud data.

- Point Cloud Segmentation: Traditional segmentation approaches often require vast amounts of annotated data, making them cumbersome and time-consuming. Your task will be to explore innovative learning techniques, including few-shot-approaches, designing algorithms that can leverage prior knowledge from a small set of labeled point clouds to accurately segment new, previously unseen data.

Note that you do not need to work on all of the topics; they are meant as potential ideas what you could work on and what is of interest to us. The specific details of your topic will be discussed, once you decide you want to work in this area.

Also, there is the option of your getting some funding when working on one of these topics.

Requirements:

- Proficiency in computer graphics and 3D rendering techniques.

- Strong programming skills (C++, Python, or related languages).

- A plus, but not really necessary, is familiarity with GPU programming (CUDA) and a good understanding of hashing and image matching and feature detection on images

- A passion for pushing the boundaries of what’s possible in the realm of virtual environments and visualization.

Contact:

Prof. Dr. Gabriel Zachmann,

email: zach at informatik.uni-bremen.de

Master thesis: Identifying the Re-Use of Printing Matrices

Subject

Even before Gutenberg invented printing texts, images were printed using matrices, either carved woodblocks or engraved copperplates. Because they were expensive to produce, these matrices were often re-used even after many years or sold to other printers. Since there was no copyright, some printers simply had successful illustrations copied (with greater or lesser accuracy) for their own use.

In the last years, several millions of book illustrations have been digitised, including naturally many re-uses of printing matrices. However, these photographs do not look exactly the same - matrices may become worn or damaged over time, the printing process may have been handled slightly differently, pages can become dirty or torn, lastly, photos were taken by different camera systems and from different angles.

This thesis aims to investigate possible methods to match images to used printing matrices in order to track possible re-use, with the intention of incorporating the devloped methods into real-world usage.

One idea could be to utilize geometric hashing on either extracted feature points (see our Massively Parallel Algorithms lecture) or on features extracted from a trained classifier network.

Your Tasks/Challenges:

- There are already systems that analyse a closed corpus of such images through direct comparison between them (https://www.robots.ox.ac.uk/~vgg/software/vise/). However, here, a procedure is sought that can work with an image database, to which new material is added constantly.

- Two aspects of the material may differ from many other tasks of analysing images:

- Firstly, many examples contain large numbers of lines, not least because light and shade are normally shown by hatching. Hence, finding feature points could be somewhat challenging.

- Secondly, one will not be able to make new photographs of the images under standardised conditions but use the images that are publicly available in repositories such as this: https://www.digitale-sammlungen.de/de/.

- Familiarize yourself with the concepts of spatial hashing (geometric hashing) and implement it so it can take adavantage of the parallelization capabilities of the GPU.

- Just throwing ORB or another feature detector on the images may not be enough to prevent false negative and false positive matches, you might need to incorporate deep learning features and maybe even other attributes of the images and think of suitable data structures for that.

Requirements:

- Solid machine learning and deep learning skills, familiarity with basic classifier neural networks

- Familiarity with the concepts of features and feature extraction w.r.t (convolutional) neural networks

- Familiarity with GPU programming (CUDA) and a good understanding of hashing and image matching and feature detection on images

- Openness for working with images from other time-periods

Contact:

Thomas Hudcovic,

hudo at uni-bremen dot de

Prof. Dr. Gabriel Zachmann, zach informatik.uni-bremen.de

Master Thesis: Gravity Modeling and Stable Diffusion

Subject

Current and future small-body missions, such as the ESA Hera mission or the JAXA MMX mission, demand good knowledge of the gravitational field of the targeted celestial bodies. This is not only motivated to ensure the precise spacecraft operations around the body but likewise important for landing maneuvers, surface (rover) operations, and science, including surface gravimetry. To model, the gravitation of irregularly-shaped, different methods exist.

Recently (latent) stable diffusion has gained popularity as a deep learning approach. Usually, the systems work in the image space. However, this thesis should investigate how the method can be used to model a gravity field (3D space). With the polyhedral method, we can compute the gravity field of 3D shape files as ground truth data.

Your Tasks/Challenges:

- Generate a lot of ground truth data with the polyhedral method

- Find a more compact way to represent the gravity field (latent space, gravitational potential)

- Predict the gravity field of new objects

- Another output could be the density distribution inside a body (inverse problem)

Requirements:

- Excellent machine learning skills

- Basic knowledge of stable diffusion (AutoEncoder, U-Net)

- Motivation

Contact:

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Master Thesis: Sphere Packing Problems

Subject

Sphere packings offer a way to approximate a shape volume. They can be used in many applications. The most common usage is collision detection since it is fast and trivial to test spheres for an intersection. Another application is modeling gravitational fields or applications in medical environments with force feedback.

Also, an important quality criterium is packing density, which is closely related to the fractal dimension. An exact determination of the fractal

dimension is still an open problem.

The practical side is well understood. We use the Protosphere algorithm for triangular meshes to generate sphere packings, which approximate Apollonian diagrams. Yet, the theoretical side needs more exploration.

We are considering multiple areas where you can study single or multiple topics in a thesis.

Your Tasks/Challenges:

- Determining the fractal dimension

- Packing density (theoretical limit for approximation error)

- The precision or effect of prototypes (symmetric object leads not to a complete symmetry in the sphere packing)

Requirements:

- Joy in math and geometry (Computational Geometry)

- Motivation

Contact:

Prof. Dr. Gabriel Zachmann, zach at informatik.uni-bremen.de

Master thesis: Natural hand-object manipulations in VR using Optimization

Subject

One of the long-standing research challenges in VR is to allow users to manipulate

virtual objects the same way they would in the real world, i.e., grasp them,

twiddle and twirl them, etc.

One approach could be physically-based simulation, calculating the forces acting on object

and fingers, and then integrating both hand and object positions.

Another approach, to be explored in this thesis, is to use optimization.

The idea is to calculate hand-object penetrations, or minimal distances in case

there are no penetrations, then determine a new pose for both hand (and fingers)

and the object such that these penetrations are minimized (or distances are maximized).

Software for computing penetrations has been developed in the CGVR lab and is readily available.

Also, many software packages for doing fast non-linear optimization is available in the public domain

(e.g., pagmo).

Task / Challenges:

- Work out the details of the method, for instance, what exactly could be the best objective function for the optimization?

- Determine the best optimization software package, get familiar with our penetration computation software.

- Implement the method in C/C++.

- Perform a small user study.

Requirements:

- Programming skills in C/C++ (at least basic knowledge)

- Mathematical thinking (no theorem proving will be needed)

Contact:

Prof. Dr. Gabriel Zachmann: zach at informatik.uni-bremen.de

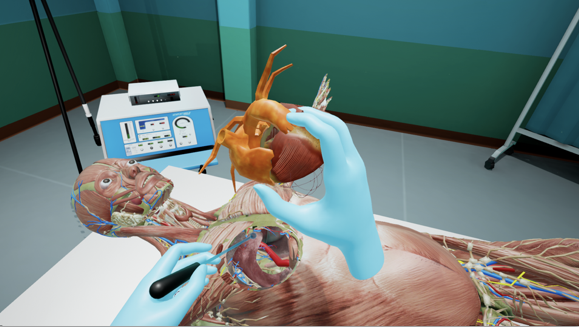

Master thesis: Mixed Reality Telepresence: Extending a Collaborative VR Telepresence System by Augmented Reality

Subject

Shared virtual reality (VR) and augmented reality (AR) systems with personalized avatars have great potential for collaborative work between remote users. Studies indicate that these technologies provide great benefits for telepresence applications, as they tend to increase the overall immersion, social presence, and spatial communication in virtual collaborative tasks. In our current project, remote doctors can meet and interact with each other in a shared virtual environment using VR headsets and are able to view live-streamed and 3D-visualized operations (based on RGB-D data) to assist the local doctor in the operating room. The local doctor is also able to join using VR.